Batching

BATCHING

Throughout this book I make reference to the technique of Batching. So what is it, which characteristics does it exhibit, and how does it influence businesses?

Simply put, Batching is the aggregation of multiple units (of work) into a single (larger) parent. This parent unit is constructed and then transported downstream, to subsequent processing workstations (or teams), until finally reaching its intended recipient. Consequently, a relationship is formed between both the parent (batch) and child (lower-level unit), but also between those children [1].

TOOL

To elaborate some of these principles I created a software application. You can find it here: https://batching.assuredsynergy.com

Let's now discuss the challenges with Batching.

MINDSET & SYSTEM-LEVEL THINKING

Batching may (rightly) be a sensible recourse in certain industries [2], such as when manufacturing many physical products that require a high degree of uniformity, but I often find it synthetic, unnatural, and deeply entrenched in the psyche of the creative software industry, and unnecessary for most digital products that don’t need to be (re)manufactured, nor batched.

But it can also be a mindset pollutant, where a single team who thinks and works in Isolation, typically with good intentions, such as dealing with its own capacity or efficiency concerns, can effectively force everyone down the same path, to the detriment of Systems Level Thinking and Flow. They work with batches, forcing other teams to also work in this fashion.

DELIVERY PRACTICE BATCHING

Conway [3] described how the architecture tends to mirror the organization's communication paths. This is also true of delivery practices. A team that produces or consumes batches influences the immediately preceding and successive teams to also work in this fashion, who subsequently influence their dependents. The outcome is a batch-oriented mindset, adopted, inculcated, and entrenched across an entire business that's difficult to supplant.

To highlight how batching affects and influences others, consider the following dialogue based on a real case.

Partner: “We'll need all of those specifications defined and finalized in the next three weeks.”

Bob (internally): Steady there! Those specifications are a long way off being finished. And what's this new deadline that requires everything?

Bob: “Perhaps we could give you one specification at a time, as we finish them. Then we can finalize it, and you could deliver it incrementally?”

Partner: “You'd think that [sic: even they think this is wrong], but unfortunately we don't work like that. Plus it's more efficient for us to deliver it all in one go.”

Bob: “But you told us you worked in an Agile manner. That means working and delivering in an Agile manner. More importantly, you've just forced a significant amount of work upon us, in a short time-frame, simply because you're working in batches. That doesn't seem very fair. It'll force us to rush the work, so we'll likely miss important information. Feedback will also be slow, so putting it right will be intrusive and expensive for everyone.”

Partner: Shrugs shoulders. “Sorry, but that's how we work.”

This is myopic, with one party so focused on themselves that they've neglected to consider the broader system level effects (and indeed, on it being a supportive, collaborative partnership).

WASTE

Fundamentally Batching is affiliated with working in isolation (e.g. Silos), which generates Waste (The Seven Wastes). This can be demonstrated in part by using the software tool (link provided earlier).

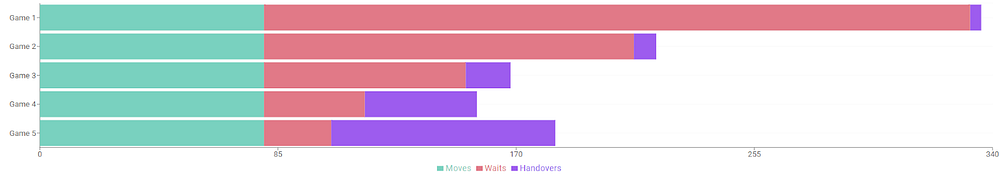

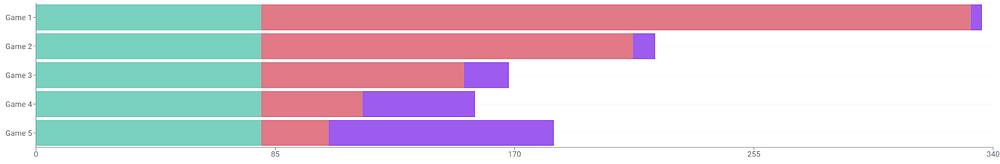

Play Scenario 2 - 5 Standard Games, which is five games of: twenty coins, four players, and the following batch configurations: 20, 10, 5, 2, 1 - and compare the results. This is what I get.

Or as a bar chart (the colors indicate the type of activity being undertaken - green is plays, red is waits, and purple is transportation).

There's a few interesting aspects here. First, look at the Total Plays for each game (within the doughnuts). This indicates how many total moves (the combined total) it takes each game to release all value to the customer (i.e. every move of every player). Typically, the longer it takes to deliver value, the more undesirable it is.

Notice how much time is spent waiting, when larger batch sizes are used (e.g. game 1). This is representative of workers in a business' Value Stream that are blocked (doing nothing), whilst awaiting work. But there's also the handoffs waste (technically a Transportation waste) - the non-value-adding waste of transporting work to the next player. The general pattern here is that the lower the batch size, the greater the amount of transportation waste (game 5 has lots, game 1 has very little), and is described further in the Transportational Waste section later.

Purely from a waste perspective, Game 1 is the clear loser (for a total of 336 plays, there's 80 value-adding moves, 252 waits and 4 handovers). That equates to ~75% waste (or ~25% useful work). Whilst games 2, 3, and 4 all have a similar total number of plays, their quantities of waste (waiting and transportation) are quite different. Game 3 has more waiting than 4 or 5, but less handovers. Game 5 has less waiting, but more handovers. If we were solely interested in the lowest number of total plays and the least waste, which we're not, we'd do well to consider game 4’s configuration.

SIDE HUSSLES

Of course in the real world, businesses don't expect staff to sit around waiting for work to arrive, which is where Efficiency Bias kicks in. Rather than wait, they're given side hustles, to keep them occupied whilst the main activities are being processed. This increases Work-In-Progress (WIP), Expediting, produces Transportation waste (mainly in the form of Context Switching), and cognitive overhead. Teams engaged in these side hustles can become invested in them, and lose sight of the business goal. See the Expediting section for more.

OVERPROCESSING

Larger batches also have the potential for Overprocessing, which is just another term for Gold Plating. This waste relates to adding features, impressive designs, and various other “technical flourishes” that are completely unnecessary, aren't considered in any financial or temporal budgets, and therefore lowers potential ROI. They could also be increasing Complexity [4]. Overprocessing in software typically occurs when work is done in extended Isolation. Individual entities - whether a person, a team, or even a business - are allowed to work in isolation for longer periods, so have more opportunities to gold plate their work [5].

DEFECTS

Defects are another common form of waste. Let's play another game. This time though, rather than working with flawless items, we'll mark one to indicate a flaw, unexpected feature, or defect, that we might encounter in a real value stream.

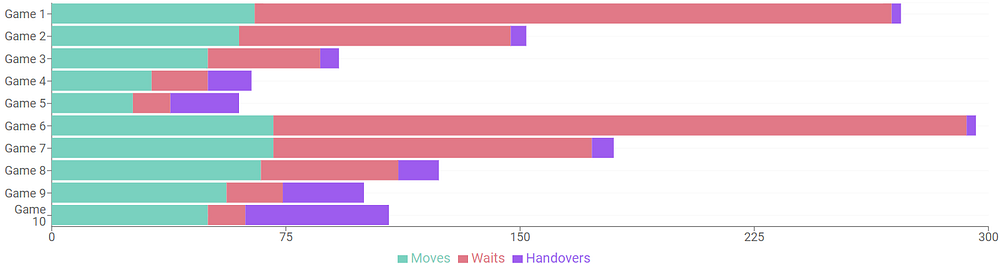

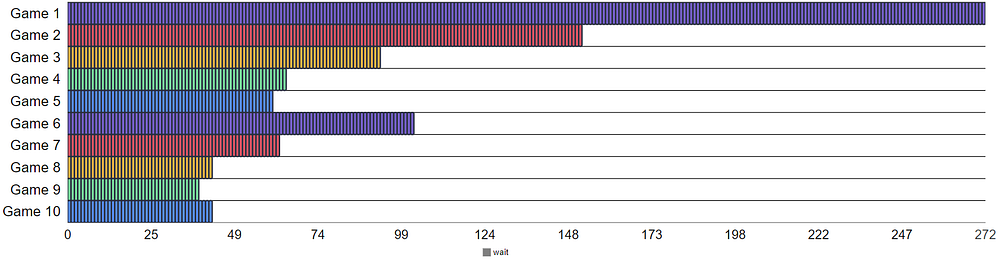

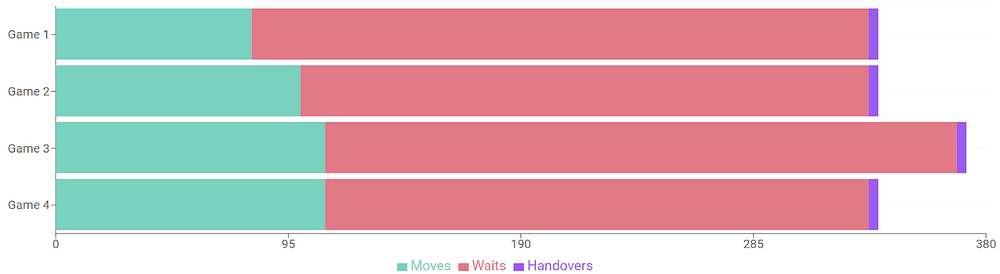

Select Sample 3 - 5 Games - Defect at 5 in the tool. In this case, a defect exists in item 5 (from a total of 20 items), and the defective item is only verified by the customer (the last player), who quality checks it before accepting or rejecting the batch. Note that, when the defect is identified, all work (i.e. all players) must stop. Here are the waste and cashflow results.

Note the spiky cashflows for some (games 4 and 5), and a downward linear path for others. Here are the defect feedback results.

Note how the large batch (game 1) has a significantly longer feedback time (it takes 272 moves to find it), and amount of activity, than the smaller batches (e.g. game 3). The cashflow (line chart) for game 1 is also terrible; indeed only games 4 and 5 have any return. Not only does a defect in a large batch present a greater opportunity to pollute than a defect in a small batch, but it also leads to considerably slower feedback, and lowers the return potential.

Should we move the defective item to later on (e.g. position 11), we get even worse results. Select Sample 7 - Compare Defect On At Different Positions and play it through. This has ten games in total, five games (1 to 5) where the defect is at position 5 and five games (6 to 10) where the defect is at position 11. Once again, it's player 4 who verifies in each case. See below.

The waste view paints a similar picture; see below.

Ok, so a later defect is worse, but it's not (yet) drastically different, with extended timelines before defect identification (bad), affecting feedback, and potentially increasing the number of defects in the system before they're found.

Let's push it a bit further by looking at the effect of shifting the verification stage to earlier. Play through Scenario 8 - Compare Verify At Different Players. In this scenario, the defect is always in item 5, importantly though, with games 1-5 we verify at player 4, and for games 6-10 we verify at player 2. Here are the waste results.

Compare game 1 with 6 (largest batch) and game 5 with 10 (smallest batch). We're seeing 2-3 times as much waste in the large batch when the verification step is left to the end, rather than doing it in position 2. It's also a noticeable, but lesser effect in the small batches (5 and 10). Here are the defect feedback results.

Again, and possibly unsurprisingly, we're seeing significant disruption with the large batches and a noticeable (but lesser) impact with the smaller batches. What does this tell us? Defect identification is significantly shortened if (a) you verify earlier (you shift left), and (b) you work with smaller batches.

SHIFT-LEFT AT WORK

Shift Left produces positive outcomes by shifting activities to earlier, thereby tackling problems sooner. In our case, we shift the verification stage to earlier to identify defects (and resolve them) sooner and thereby more cheaply.

FAST FEEDBACK (FIRST VALUE)

The speed at which your customers receive value (any value) is another important factor. Not only does it provide the customer with usable solutions (even if only partially), but it also promotes the flow of cash within your business.

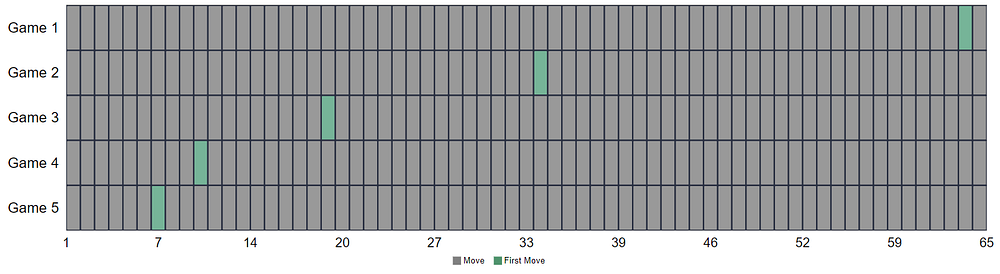

Run Sample 2 - 5 Standard Games in the tool. You’ll see the following.

In this scenario the last player (player 4) acts as the customer holding the purse strings. You don't receive any money until they get something from you. Your job isn't solely to give customers useful features, it's also to get a profitable investment return as soon as possible, by delivering sooner.

Looking at the Feedback section in the tool, you'll see something like this.

The green rectangles represent when the customer (last player) first receives some value (the output of all your hard work). Compare games 1 and 5. The customer in game 1 (largest batch) doesn't receive any value until move 64, whilst the customer in game 5 (smallest batch) receives some value very quickly (move 7). That's a significant difference. Why? Imagine that you were building out an important new feature but you don't know how it will be received. Game 1 (large batch) requires you to build all of it up-front, and not deliver anything to the customer until very, very late. If customers hate it, what do you do? You've just wasted a lot of time and money on a flop that would have been better spent elsewhere. The return is terrible.

FEEDBACK

Delivering value to customers early (and often) helps you glean valuable insights before you spend lots of time on potentially unimportant features (Feature Development is a Form of Gambling). It's a way of testing that your assumptions are right and the thing you're building is valuable. In itself it's a valuable cashflow protection policy.

The advantages of smaller batches is clear. If you've delivered incrementally using small batches, but customers don't like it, so what? You haven't made a heavy investment, so it's easy to adjust, or even kill the feature for a better alternative.

TRANSPORTATIONAL WASTE

I touched on transportational waste in an earlier section, but let's now return to it. Every product or service is a composition of many discrete parts, activities, or stages. In Karl Marx's example, it was a tailored jacket; for Adam Smith, a pin; a biscuit is composed from many ingredients and four key stages; a Rolls Royce aircraft engine is composed of over 30,000 individual components [6].

Regardless of the product, every stage in a Value Stream must receive that product to complete their own value-add activities, which occurs through transportation. Transportation may be a necessity (it's required to deliver value to customers), yet it's also non-value-adding (and why it's a waste), since it doesn't improve the product.

Let's return to our sample games. Comparing games 1 and 5 in Sample 2 - 5 Standard Games, game 5 clearly contains far more transportational waste. See below.

We’ve ended up replacing one form of waste (Waiting), with another (Transportational). At first glance this seems unappealing. For instance, transportational waste requires someone (or something) to do something (to transport something to somewhere else for further processing), whereas waiting doesn't require a person to do anything. And you can't expedite transportational waste like you can with waiting. There are, however, a few interesting qualities.

Firstly, note that large batches have a much larger transportational waste than what’s shown here; i.e. you're still incurring transportation costs, even if they’re less visible. At some point, the items within the large batch must be split out into smaller units of work and tackled (often sequentially), which consequently has a transportation cost (consider a testing team with limited capacity). I didn't represent this cost in the game (how could I?), but it still exists.

CONSTRAINTS & BATCHING

The Theory of Constraints (ToC) states the constraint is the limiting factor in a Value Stream's overall throughput. Thus, when the size of a batch exceeds the capacity of the constraint, you must slow down - so does batching really help? Fundamentally, this is the same problem you face when a pipe (or chimney) is blocked in your home. You need to unblock it - using a brush, a plunger, or chemicals - to get it flowing again. You achieve this by decomposing the offending item into smaller units that eventually “fit” down the pipe.

When you reach a constraint (blockage) in a value stream, you must do the same thing - you must (virtually) decompose the batch into smaller units, processing each in a sequential fashion, until it’s all done [7].

Processing batches in this manner therefore leads to Waiting, but there's also the Transportation costs of cognitive load, coordination, decomposition, and sequencing that are rarely considered.

Secondly, it's often assumed that there's an inefficiency associated with this approach, since people are transporting things, but why does it need to be a person? If activities can be broken down into small enough units, and given a level of standardization (Uniformity), then why can't automation do the heavy lifting via techniques like Deployment Pipelines and fine-grained User Stories?

PRE-MODERN ASSEMBLY LINES

In the days prior to modern (moving) assembly lines it was quite typical for manufacturing plants to physically transport items from one department to another, sometimes over relatively long distances. This is inefficient. Henry Ford saw this problem, and became an early adopter of the moving assembly line, which became a critical enabler for Ford to dominate the market for many years.

Decades later, the McDonalds brothers (yes, that McDonalds) also realized the efficiency implications of transportation would affect the quality of customer service that they sought. In an act of great insight, they drew up their new restaurant plans (in chalk) on a tennis court and simulated the delivery experience, seeking the most efficient restaurant configuration, and moving the “chalk stations” when encountering flow and transportation problems [8].

Today, of course, many assembly lines are both automated and moving. Work is automatically transported on to the next stage in the assembly process without the need for someone to manually move (transport) it. Transportation costs still occur, but it’s contained within the cost of building and running the assembly line rather than a regular and repeated cost.

This concept hasn’t yet seen universal adoption. For instance, there are still organizations delivering software without Deployment Pipelines (software’s equivalent of the moving assembly line), thereby incurring the associated transportation costs.

CASHFLOW

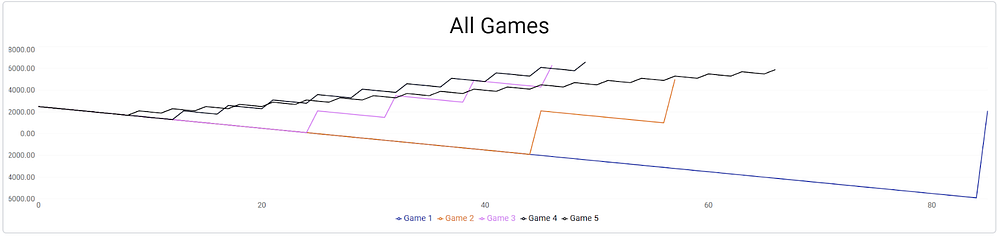

Let's now view batching through the cashflow lens. Run Sample 2 - 5 Standard Games. To keep it fair, each game is configured to start with the same cashflow position, and have identical profit and loss potential. View the Cashflow section for all games and you’ll see something like this.

Note the patterns for each cashflow line graph, which are more clearly presented below.

Each line in this graph represents a game (i.e. all player data is aggregated per move position). The first thing to note are the lengths of the lines. Some are noticeably longer than others (for instance, consider game 1 to game 5). They represent how long it takes to complete all work, and thus, reclaim the entire financial investment. Shorter tends to be better here.

The second thing worth noting are the patterns. If you follow game 1's trajectory, it's almost exclusively downwards (down and down and down it goes) until the very last step, where it makes a very steep rise. Predominantly, it indicates lots of spend, with a final return only achieved at the very end. Compare that to game 5, which is shorter, and far more jagged. It heads down for a brief time, before quickly rising, and then repeats that same arrangement again and again. This is a repeated pattern of a relatively small spend, immediately followed by a modest return, a much healthier position.

Remember, this graph represents business cashflow and ROI. With game 1 you’re reaching deep into your pockets for more money, and receiving nothing back for a long time. In contrast, with game 5, whilst you still need to reach into your pocket, someone else is quickly filling it back up. It tells us that smaller batches have the potential to make faster returns (ROI) than larger ones. It may also clear the way to more flexible Just-In-Time budgeting options.

EFFICIENCY

One reason Batching sees such widespread use lies in its efficiency. If it’s done right, you know exactly what you're doing, and expectations don't change prior to delivery, you get a very efficient way of delivering value. But is it effective?

Let’s turn our attention to batching in the Waterfall Methodology. If you can gather up all of your requirements in one fell swoop, correctly design, implement, and test it, without failure, you’ll struggle to find a more efficient approach.

It's much easier to work in this manner when Uniformity is high. Many factories, for instance, produce thousands, or even millions, of the same product in short order, using the same ingredients or components, and constructed in exactly the same fashion [9]. Each item is built in the same way, and the consumer isn’t going to ask you to change its feature. Software features, however, don’t have physical characteristics (this mutability means that customers are more comfortable requesting changes). Neither are they Uniform (like a biscuit) - each one is uniquely considered, designed, built, and tested. High uniformity equates to high volume which equates to high batching potential. Low uniformity generally leads to the opposite; e.g. higher cognitive load (Transportation costs), and greater potential for Defects [10]. To summarise, you may want efficiency in software, but it’s probably not achieved via batching.

THE EFFICIENCY BIAS TRAP

Businesses producing software often fall into the batching trap because they only consider efficiency. It's a great example of Efficiency Bias at work, where System-Level Thinking is replaced by a more granular, parochial view of the efficiency of a single team. The goal isn’t (just) to be more efficient, it’s to make the overall flow of value to the customer more efficient and effective.

RISK

One of the best examples of the risk of Batching comes from the Waterfall Methodology. Recall that this methodology is a coarse-grained, highly sequential, regimented, and forward-oriented delivery methodology. You do all of the requirements gathering, then all of the design, then all of the implementation work etc. It's a great example of Isolation and low (diverse) collaboration that naturally leads to the batching of large tranches of change.

There's a number of inherent risks here. First, there's the risk of the batch being so large that it's not valuable when it's finally delivered. Or to rephrase, its value has been negated by its delivery speed. Then there's the risk of large batches hiding quality issues. There's also the potential for Bias (e.g. political) and Loss Aversion more latent in large batches hampering feedback and necessary rework. And finally, it also lowers delivery speed and reduces feedback opportunities (Fast Feedback), which is particularly important when we encounter defects (see Defects section). The pollutant finds it much easier to hide, due to the vast amount of noise from everything else.

BATCHING & POLLUTANTS

Generally speaking, the larger the batch, the longer it takes to uncover a problem, which could mean a large amount of additional, avoidable work is done. That's not very customer-oriented, nor is it great for the business (e.g. loss of ROI).

Several well-respected technology leaders annually publish their State of DevOps Report [11], typically with a section showing the correlation between deployment frequency and success. Elite and high performing businesses tend to be the ones that deploy very frequently; e.g. multiple times a day. By now, this shouldn't come as a surprise - I've reiterated the importance of delivery throughout this book (e.g. DORA Metrics). Batching though is directly linked to deployment frequency - the larger the batch, the longer the delay between releases, and the slower the delivery. Perversely, the longer the delay, the more likely there's batching.

RESILIENCE

Batches - by their nature - contain many items of work. You’re gathering many items of data, or work, together into a “batch” that you’re then moving (treating) as a single unit of work. Yet, in terms of processing (rather than distribution), it's rarely possible to treat a batch as a single atomic unit.

Let’s visit a biscuit factory. Each biscuit in a batch is created through four main steps: mixing, forming, baking, and cooling; i.e. for each batch run we prepare the ingredients, mix them, form them, bake them, and cool them, before packaging them and distributing them to the various shops, and thus on to our consumers. Each step is quite intensive, more complex than you might imagine [12], and immutable. Once it’s done, it’s done - there’s no undo option. Imagine then, that a pollutant is accidentally introduced during the mixing phase, and no one is aware [13]. Possibly it only affects one biscuit, or possibly the whole batch is polluted.

In one scenario, the biscuits are distributed to consumers, where the pollutant is discovered. Anyone aware of the problem immediately discards the biscuits, and raises their concerns with the relevant authorities. The whole batch is probably deemed “polluted”, must be discarded (recalled - the ultimate Waste), and the manufacturer faces reputational damage (at least). In another scenario, the pollutant is caught earlier, at the quality check stage, before it reaches consumers. This is good, in the sense that a potentially dangerous product hasn’t been released, but bad in the sense that every part of that batch is now considered polluted. A single flaw, in a single item, in a batch, may lead to the whole batch being discarded.

It’s a similar principle in software batches. For instance, when you introduce a flawed feature into a batch of changes, then you must abandon the release (but not necessarily all of the changes; they probably weren’t constructed from the same ingredients), rework the offending item(s), which takes time and causes Expediting, and then re-release a new batch. The pollutant may not ruin the entire batch, but it’s very invasive.

There’s also batch-based system-to-system communications, where a producer distributes a batch of data to a consumer to process. This approach is commonly used in file-based transfers (e.g. CSV files) between businesses. Again, it’s quite common for a single pollutant (row), intermingled with valid data, to break the entire batch, and cause the consumer to fail [14]. The solution tends to be to (manually) identify the pollutant(s), decide what to do with them, (typically) remove them, and then re-process the batch again.

What I’ve demonstrated here is that Batching affects Resilience (both Change and Runtime versions). The larger the batch, the greater the potential pollutants, and the greater the impact.

ORGANIZATIONAL ISSUES

When we batch changes, we're distributing a large quantity of change at one time. These changes are coupled to the parent unit (our batch), and also, in a sense, to their siblings. This makes the reorganization (of both the batch and the affected teams) difficult and invasive when things go awry.

Batching suits Isolation, whether in terms of activities or in thinking, and isolation tends to favor centralized teams and Silos. Silos though, often have their own priorities, or multiple projects vying for their attention. They’re not always as deeply invested in business-wide initiatives as maybe they should be, in part because of their isolation. Yet the fast, effective flow of value through your Value Stream is vital. Is each siloed team truly invested in that flow, at the expense of all else, or are there still pockets of resistance working in batches? It may sound unimportant, but you only need one siloed team, working at a lower capacity and awkwardly placed within a Value Stream, to force batching upon others [15].

SYSTEM-LEVEL THINKING

Business processes require holistic, system-level thinking, which considers the effect of batching and Silos. There's little point building in efficiency everywhere else only to leave a key team siloed and working in batches. Beating the Batching Bias requires wholesale rethinking of the entire flow, not just one part.

Conway's Law describes how an architecture mirrors the business’ communication and organizational structure. This is also true of its data flow and work item delivery model. If one team only works with batches, that influences others, thereby causing further batching to occur up and downstream. See below.

ACCURACY

Some processes (most commonly with ETLs) use heavyweight batching techniques to transfer data from one system onto another [16]. Unfortunately, this often affects the accuracy and currency of the data - systems are unnecessarily inconsistent (Consistency), less reliable, and thereby lose credibility and Stakeholder Confidence.

Batching either expects the sender to wait until a particular number of items arrive, or to wait and action them at a specific time. The receiver (consumer) waits for the sender, and must then process a potentially huge batch in one go. Either way, the Waiting aspect sanctions consistency issues [17].

STREAMS

It’s no accident that modern data integration techniques use the term Streams. They allow lots of small items of data to flow down a stream, and be consumed in near real-time. These techniques allow systems to remain more accurate and consistent.

Batching can also cause informational inaccuracies between individuals and teams, since change is much larger (potentially too large to contextualize), and takes much longer to travel through the Value Stream, meaning information is lost [18].

QUALITY ISSUES

Large releases (batches) are complex, lengthy, and unwieldy beasts. A large batch requires us to process far more (physically and cognitively), to such an extent that we may be unable to comprehensively process it. Problems encountered in these circumstances typically aren’t caught early enough, indeed sometimes too late to do anything about them.

Batches also have a higher emotional investment, simply because they contain more value. To analogise, it’s far worse to drop a beautiful cake you've spent all day making than to drop one of twelve cupcakes, since the cake has far greater emotional investment. It’s the same concept with the batch. The bigger the investment (effort or money), the more people are invested, and the less likely they are to stop investing, even if they think the project will fail, or it contains considerable flaws (Loss Aversion).

Larger releases (batches) then, are better at hiding quality issues, but are also less forgiving and limiting should a problem be encountered.

PREDICTABILITY

Predictability is an important quality for any business. The more predictable you are in your estimates, implementations, and deliveries, the more confident you - and your customers - are that you can deliver on your promises.

But it’s difficult to accurately predict any of this when large batches are used, since they contain many more influences, Assumptions, Complexity, and therefore Surprises, than a small item of work, so therefore take significantly greater effort (time) to verify, release, and coordinate. To further exacerbate matters, no two batches are the same (in terms of their size and complexity), meaning you can’t find comparable batches to accurately estimate against.

EXPEDITING

Expediting occurs when there's a change in business priorities and there are current activities still in-flight (i.e. it's unnecessary to expedite if there’s nothing in-flight). It's often the sign of too much Work-in-Progress (WIP) (which is both a cause and an effect), large batches of change, slow releases cycles (the result of large batches), and external pressures. But it also occurs when someone wants efficiency gains that are not part of the current business flow of activities.

In our case, the expeditor’s goal is to identify waste (or efficiency potential) in others, and fill those gaps with useful activities. For example, consider the table below showing ten moves for one player.

| Time Unit | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Move Before Expedite | P | P | P | W | W | W | P | P | P | W |

| Move After Expedite | P | P | P | P | P | W | P | P | P | W |

Before expediting, the player made 6 plays (useful work), and experienced 4 waits (waste). After expediting, the player makes 8 plays (useful work) and only 2 waits (waste), thus improving efficiency.

REALITY CHECK

This approach is synonymous with how some workplace managers operate. If they see their employees without work, they'll find something for them to do. Typically though, their influence only extends into their own area, meaning efficiencies are isolated, and not necessarily seen elsewhere.

Consider also why the expeditor does this? Why must they find work for someone else to do? Because they aren’t always busy? Sure. But why isn't that person / workstation always processing business-critical work? Because it isn’t being delivered to them as a constant flow. Based on what I’ve covered here, why might that be?

Let’s consider a standard, non-expedited game against ones with an expeditor. This time select Sample 6 - Mix Standard & Expedited Games for Comparison in the tool. Here are the configurations.

| Game | Type | Items | Batch Size | Players | Expedite On | Expedite Batch Size |

|---|---|---|---|---|---|---|

| 1 | STANDARD | 20 | 20 | 4 | N/A | N/A |

| 2 | EXPEDITE | 20 | 20 | 4 | Player 2 | 20 |

| 3 | EXPEDITE | 20 | 20 | 4 | Player 2 | 30 |

| 4 | EXPEDITE | 20 | 20 | 4 | Player 3 | 30 |

The expeditor has a very specific task - whenever they see a player without work, they allocate them work. This work is entirely independent of the current “batch work” already in the system, and secondary to the business' overarching goal. To simplify our example, we’ll assume that expediting occurs only once. The player must complete any expedited activities before returning to the overarching “business goal” activities.

Game 1's - the standard (non-expedited) player - results are shown below.

Nothing exceptional here. The same pattern repeats in a stepped fashion through the players. Now view Game 2’s player results; shown below.

They’re similar, but note that player 2 (Development) shows two sets of green blocks (separated by a red block) rather than one? The first green block of 20 items is an expedited batch, particular to player 2 only. The second green block of 20 items (starting at position 22 and lasting for 20 time units) is the expected business flow of work. Comparing the same player for games 1 and 2, there’s clearly less waiting (red) in game 2, and thus an efficiency boost achieved via expediting. To reinforce this, here are the overall game results from a waste perspective.

Note the total number of moves remains unchanged between games 1 and 2, but there's a noticeable advancement of plays (green), indicating greater efficiency. Game 3 is interesting. Its total number of moves is greater due to a clash in priorities when the player is given expedited work (30 units) greater in size than the game’s batch size (20 units). Thus, important business activities are forced to wait for expedited work, which is completely unacceptable. Critical business work should never be forced to wait for the completion of isolated activities only important to one area.

PRIORITY CLASHES

Clashes in work item priorities tend to occur when the expeditor delivers a change which (a) takes more effort than the time left for the previous player to deliver more work (as we’ve just seen), and relates to the batch size, and (b) the player is expected to finish the expedited item first.

One approach could be to always use large batches and ensure localised, expedited activities are always smaller than it. In this model there would always be time to expedite and build in efficiencies. But consider for a moment that this amounts to you saying: “I’m ok with performing isolated activities that don’t meet my business’ goal, possibly because I can’t organize us to always deliver our business’ goal in a joined-up, coherent, and effective manner, or because I think my work is more important than what the business deems so.”

VALUE STREAM EFFECTS

When individuals or teams choose to expedite in isolation and for themselves, they’re typically choosing to enhance or improve the efficiency of their own area, which may have unexpected consequences on the entire value stream - see Theory of Constraints.

Finally, run Sample 5 - 5 Games Expedited at Player 1 in the tool. Notice that by game 5, the batch size is small enough that players don’t get much opportunity to absorb other (non-business-critical) work, indicating that most of their time is committed to the business’ goal. That sounds like a great way to deliver change.

APPLICATION ARCHITECTURES

One of the big technology talking points over the last decade is the transition away from the centralized (monolithic) architecture to a distributed one. Whilst such a move can bring runtime benefits, it’s the ability to better manage small changes (to counter Batching) that’s a clear winner for me. Centralized architectures are tightly coupled, and slow to change, thus promoting large batches. Distributed architectures are loosely coupled, (relatively) quick to change, and promote small batches.

SUMMARY

There's a common conception that Batching is simple, efficient, promotes Economies of Scale, and is thereby cost-effective. It can be, but it's also highly volatile and influenced by many variables, so often, it’s not. I regularly see delivery flow problems where a business applies unnecessary Batching techniques to deliver change or manage system interactions.

As described, Batching comes with lots of baggage. Efficiency (Efficiency Bias) isn't the only - nor the most important - consideration. For instance, what about TTM, ROI, System-Level Thinking, Fast Feedback, WIP, Expediting, Loss Aversion, cashflow, and the multiple forms of Waste it can generate?

Even so, Batching is deeply entrenched in our psyche and remains the mainstay of many organizations and industries, and too often, is something we revert to in times of trouble (a coping mechanism). Batching then, can represent a broader risk to how businesses successfully deliver value and sustainability, and competitiveness.

FURTHER CONSIDERATIONS

- [1] - We'll discover why this is a problem shortly.

- [2] - Typically ones producing the same physical item over and over, like an assembly line.

- [3] - Conway's Law - Conway's law - Wikipedia

- [4] - Adding additional features increases complexity, which also adds risk.

- [5] - I'm not necessarily saying extended isolation is an issue, only that it opens the door for more opportunities to abuse it.

- [6] - https://www.rolls-royce.com/media/our-stories/discover/2023/poweroftrent-how-does-our-production-test-facility-work.aspx#:~:text=It%20takes%20our%20team%20of,parts%20to%20build%20one%20engine.

- [7] - Testing a batch of software changes is an obvious example. You need to decompose the release, test each individually, coordinate all those activities, and then do a final more holistic regression test.

- [8] - https://www.rapidstartleadership.com/refining-the-plan/

- [9] - They may also benefit from Economies of Scale.

- [10] - Efficiency, of course, can be a very bad thing when a pollutant is introduced undetected, since feedback is slow. This is why we have the Andon Cord.

- [11] - Google - 2023 State of DevOps Report | Google Cloud

- [12] - For instance, did you know that temperature is an important factor in the correct forming of a biscuit?

- [13] - You may think this unlikely, but try searching online for “famous food product recalls” - it’s quite eye opening. You may imagine that the samplers and quality control will catch this, but the number of product recalls suggests not.

- [14] - We can argue whether this should happen (autonomic systems), but I continue to see this problem occur.

- [15] - I've seen teams working with modern technology and release practices unable to reap their rewards, simply because a downstream team was siloed, constrained by manual practices, and excluded from regular and meaningful interactions. Any strategy to increase the speed of technical delivery (for example to gain Fast Feedback) was hamstrung, both by the lower capacity of the downstream team, and the manner in which they completed their work, and drove the technology teams back towards a batch delivery model, thus creating business risk and competitive concerns.

- [16] - It's quite common to only execute an ETL only once or twice a day, meaning data is stale, or inconsistent during that period.

- [17] - the long waits being one, followed by the queueing of work in the batch.

- [18] - You can't expect people to retain information not meant for their immediate attention. Information should be Just-in-Time (JIT). There’s a temporal aspect to knowledge sharing that affects Shared Context.

- Assumptions

- Batching Bias

- Bias

- Complexity

- Consistency

- Context Switching

- DORA Metrics

- Efficiency Bias

- Expediting

- Fast Feedback

- Gold Plating

- Isolation

- Loss Aversion

- Project Bias

- (The) Seven Wastes

- Silos

- Stakeholder Confidence

- Surprises

- System-Level Thinking

- Theory of Constraints (ToC)

- Value Stream

- Waterfall Methodology

- Work-in-Progress (WIP)