SYSTEMS THINKING

Work-in-Progress...

SECTION CONTENTS

- Assumptions

- Functional Intersection

- Domain Pollution

- Real-Time Reporting

- Build v Buy

- Entropy

- (the) Many Forms of Coupling

- Technical Debt

- Separaion of Concerns

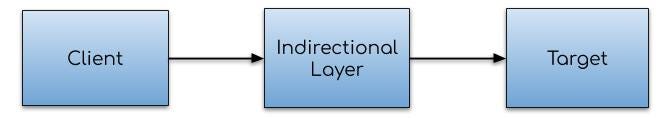

- Indirection

- Refactoring

- Feature Parity

- Technology Sprawl

- Object-Relational Mappers (ORMs)

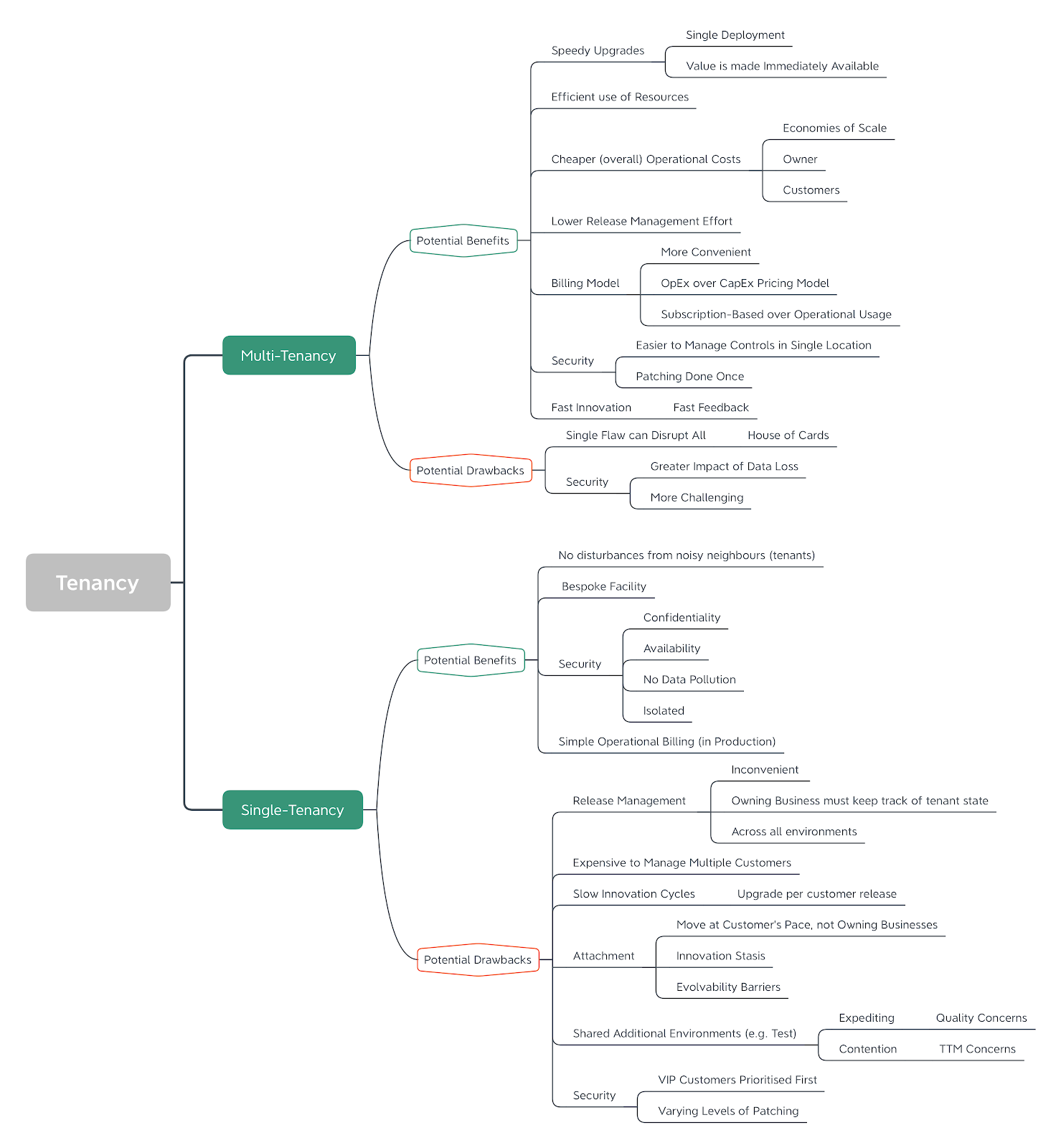

- System Tenancy

- Containers & Orchestration

- Feature Flags

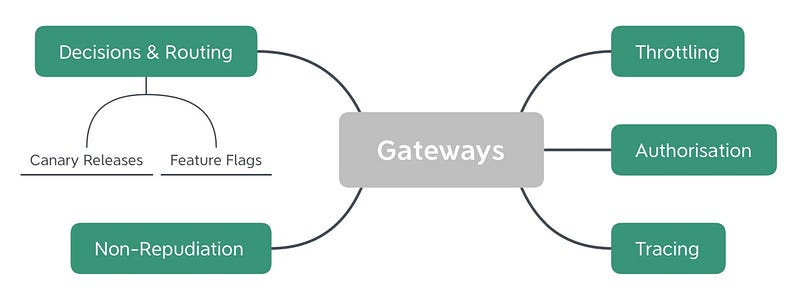

- Gateways

- PAAS (PLATFORM-AS-A-SERVICE)

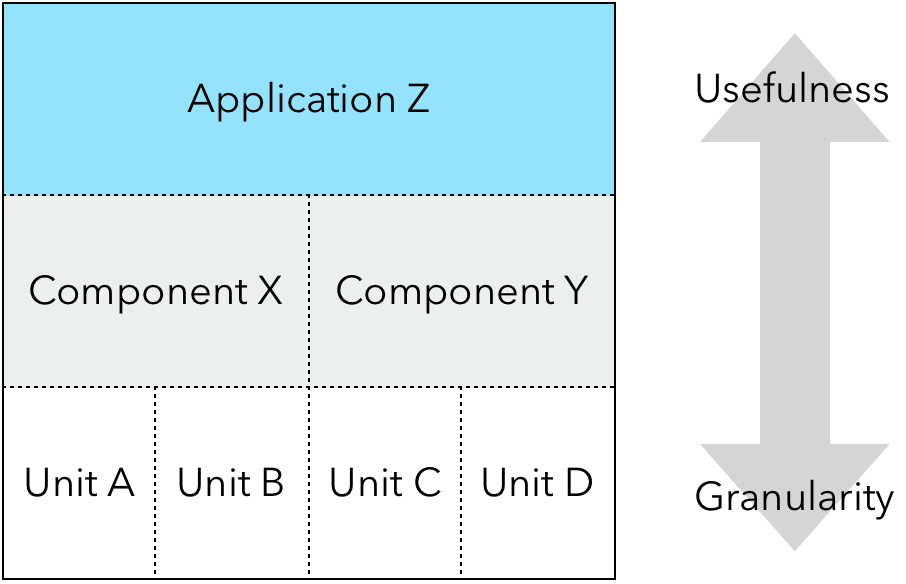

- Test Granularity

- Granularity & Aggregation

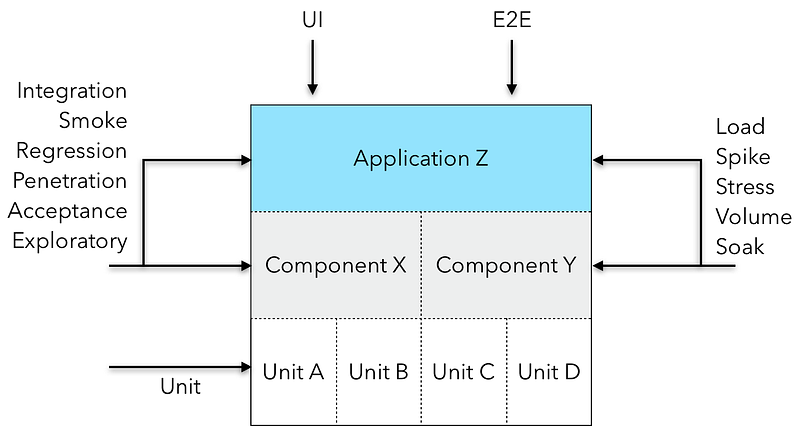

- When to Test?

- Types of Testing

- Regression Testing

- Flooding the System

- Smoke Testing

- Exploratory Testing

- Performance Testing

- Volume Testing

- Load Testing

- Stress Testing

- Endurance (Soak) Testing

- Spike Testing

- Cybercrime

- Penetration ("Pen") Testing

- Unit Testing

- End-To-End (E2E) Testing

- Integration Testing

- Acceptance Testing

- SLAS

- Synchronous v Asynchronous Interactions

- The Cloud

- Serverless

- Cloud Agnosticism

- Denial-Of-Service (DoS)

- (Distributed) Transaction Tracing

- IAAS, PAAS & SAAS Responsibilities

- Infrastructure-As-A-Service (IAAS)

- The Weakest Link is the Decisive Factor

ASSUMPTIONS

Assumptions are a root cause of Change Friction and Surprise. Assumptions are the remnants of a decision (verbal or technical), that may undermine the overall success of a feature.

At all levels of product development we make many assumptions, including:

- The level of skill and understanding of key decision-making stakeholders.

- How responsibilities are aligned within each software unit. One way to understand the level of Assumptions (potential coupling in software), is to look at its dependencies. Generally, the greater the dependencies, the more Assumptions exist about its execution environment, context, or constraints (e.g. temporal Availability).

- The assumed level of security (Perception) in a software component compared to its actual.

- The likely success of introducing a product into the market. You can do all the market research you want, but you'll never truly know until you do it.

- The types of coupling (e.g. temporal) employed at the software, or business level.

- The Availability of other systems.

- That correct (and accurate) information has been supplied, at the right time, to the right people, to successfully undertake a task (JIT Communication).

- That the customer's Perception of quality mirrors your own.

- The customer truly understands the proposed delivery plan.

- Understanding all the nuances of a new technology is seamless and quick to an uninitiated team (a common mistake).

- That a chosen technology is truly agnostic.

- 100% Test Coverage equates to zero bugs.

- Buy is always cheaper than Build.

- Connectivity assumptions - i.e. "I can connect this to that". This may not be true. For instance, a Governance or Security function may stop you from doing so.

- Scaling assumptions; i.e. "System A will scale to my needs, so I don't need to test that assumption." Possibly, but I'd label that a risk.

Individually, each assumption seems manageable, but when viewed in its entirety, we have a Complex System, exposing a myriad of minutiae that makes second-guessing impossible.

I categorise Assumptions into four areas:

- They are correct and require no remedial action. These have positive outcomes.

- They are embarrassingly wrong, but will be quickly noticed due to their inaccuracy (and thus, are relatively inexpensive to resolve).

- They are entirely wrong, yet insidious, and remain undiscovered for years. These are highly problematic as they infer the inaccuracy of a foundational decision that (a) has remained so for a long time, and (b) that remedial action may no longer be possible (e.g. due to high expense). Failing tend to be architectural decisions, such as poor security, vendor coupling, business logic in the database, overuse of a DB triggers strategy, integration by data duplication, never undertaking performance tests.

- They will seem unimportant, and thus will be ignored, but combined with other seemingly innocuous decisions, accrue over time into something more dangerous (like little fish that take many small bites from you). This might include: a bottom-up API design, no clear versioning upgrade strategy, failure to encrypt sensitive data, client-dictated upgrade timelines, Domain Pollution. Many developers tend to focus on solving immediate problems, and don't necessarily appreciate that a single, seemingly innocuous assumption can significantly impact a system, and thus, a business.

Assumptions tend to be more wrong when dealing with an unknown quantity (i.e. the opposite of Known Quantity). However, that should not dissuade us from change, else we fail to evolve!

To some extent, Assumptions can be countered with:

- Feedback cycles. By regularly testing the accuracy of an idea and feeding it back in, we can quickly identify gaps in thinking.

- Continuous practices such as Continuous Integration (CI), Continuous Delivery (CD), and Continuous Learning. It’s one reason why CI and CD are so popular; they expose Assumptions sooner.

- Agile, DevOps, and Cross-Functional Teams. All these are approaches to increase collaboration across a more diverse range of people, with varying skill-sets. Assumptions are caught sooner, often before any significant effort is undertaken.

- Safety Net. Techniques like sprint Spikes are a form of Safety Net that test the veracity of an assumption. Quality test automation reduces flawed assumptions that a change works.

- Prototypes. Placing a basic prototype in front of your customer early provides vital feedback about how something should function (and look). This reduces the likelihood of incorrect assumptions.

- A flatter employee hierarchy, where everyone contributes with valuable insight to make more accurate decisions.

FUNCTIONAL INTERSECTION

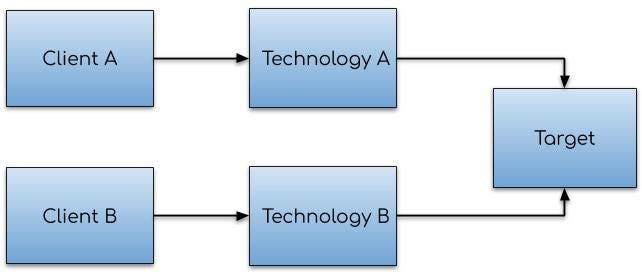

When looking at any significant systems integration (including the Build v Buy option), it’s important to consider Functional Intersection.

In the context of systems integration, Functional Intersection indicates the amount of functionality (or behaviour) that (fundamentally) represents the same concept, across two distinct systems. It is a common cause of Technology Consolidation headaches.

Consider the figure below, showing two systems being considered for an integration project (A is your existing system, whilst B is the one under inspection).

Small functional intersection between two distinct systems

In this case, we have a small intersection between System A and B.

FUNCTIONAL INTERSECTION IN RESPECT TO SYSTEM SIZE & COMPLEXITY

The size and complexity of the two systems being considered for integration is also important. Typically, the larger they are, the more complex there are, and thus, the greater the number of Assumptions they contain. This suggests an increase in the number of integration tasks required.

Why even care about the intersection, and specifically its size? Well, to generalise, a large intersection infers that each system must know a lot more about each other than a smaller intersection, and this may have unexpected and sometimes damaging results.

Functional Intersection often leads to data duplication, by duplicating of domain concepts across systems (we must synchronise the same domain entities across both systems for all changeable events; i.e. create, update, and delete operations), and thus, a duplication of integration tasks. The problem is that all this duplication has little to do with business needs, it’s simply to meet system expectations (Satisfying the System), and that leads to the Tail Wagging the Dog syndrome; e.g. technology dictates the business’ direction to travel.

INTEGRATION == COMBINING

When we discuss “integration”, we’re really describing the joining or combining of multiple systems (or subsystems) together to form (typically) a larger, more functionally-rich software solution.

Consider the following example. Let’s say both systems A and B (from the earlier figure) are e-commerce solutions. We are actively using System A, but have found it lacks some key features. The business has identified an opportunity to better engage web customers to increase spend, by providing them with a richer and more immersive product catalogue experience. We’re undertaking a due diligence to identify the right strategy (Build v Buy).

Before continuing it's worth mentioning a key oft-neglected point. We already have existing sales coming through System A‘s order processing subsystem (which our business is too tightly coupled to, to change), so we must also continue to support products in system A. In Layman terms, that means we must mirror the same product in both systems.

Now, to successfully reflect one entity in another system, we must handle all changeable events (events that have a state change on that entity). For a product (and I’m underselling this too), must support the following actions:

- Create Product.

- Update Product.

- Delete Product.

That’s three events. But remember, since both systems need the same products (one for a rich user experience, and the other to process orders), we must reflect the same changes. That’s a duplication of effort.

Thankfully, in our case, the intersection seems relatively small (a good sign), indicating a relatively clean integration. However, there’s deeper considerations around dependencies.

Let’s say the business had decided that System B’s Payments solution (it enables us to offer additional payment capture options on top of System A) was also impressive, and they’d like to incorporate it into System A and sell it. The thing is, they still want to keep aspects of System A’s Payment solution too (it’s currently being used by some significant customers).

Again, we undertake our due diligence; this time not only analysing the desired feature, but any dependent features it uses. We identify the following dependency hierarchy:

- Payments (in both system A and B)

- Carts (in both system A and B)

- Customers (in both system A and B)

-

Products (in both system A and B)

- Catalogue (in both system A and B)

- Carts (in both system A and B)

Again, we’re Satisfying the System - to incorporate one function, we must satisfy every other function dependency. For example, to use the Payments feature, we must ensure we’ve stored a Customer and managed it through a Cart, and added a Product to a sales Catalogue.

MASTER/SLAVE

The duplication of domain concepts across different systems (boundaries) also raises the notorious Master-Slave question. We get cases where system A is the master of some concepts, and system B the other. In the worst case, you may have two masters for the the same domain concept, but offering different consumer flows. Apart from the massive system complexity this causes, it may also cause cultural tension as teams lose sight of business intent and become a slave to the system.

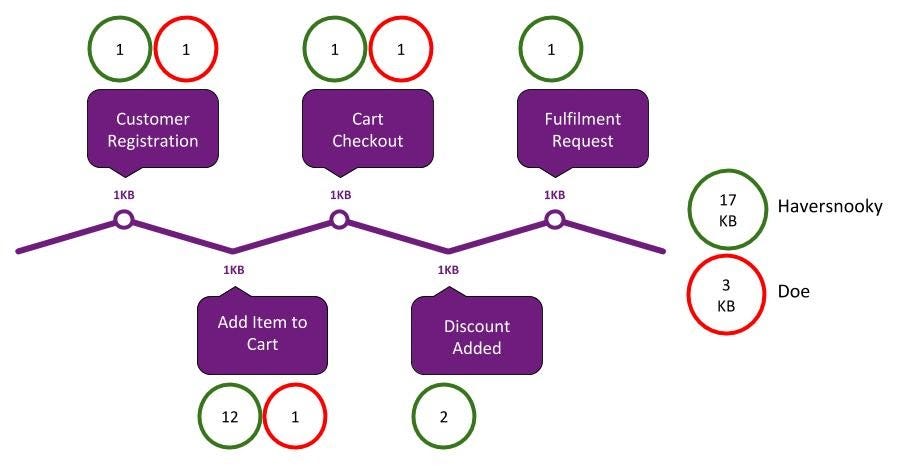

The Functional Intersection for Payments now looks very different; see the figure below.

New functional interaction

It is significantly larger, and more complex than the earlier case. It also means we must synchronise across every entity:

- Product (create, update, delete).

- Catalogue (add, move, delete).

- Cart (create, add, remove, clear, checkout).

- Customer (create, update, forget).

- Payment (create, update, refund).

I make that a rather hefty 34 integrations (17 integrations * 2 systems).

That’s far less appealing, and potentially leads to Integration Hell. What might have been a few weeks of effort has now turned into a quarter or two. Is there still a competitive advantage of undertaking such a lengthy and risky integration?

I’ve witnessed poor Functional Intersection in (at least) three different contexts:

- Attempt to introduce an open-source product into the existing stack. Was unsuccessful, and not attempted due to integration effort.

- Introduce an existing internal (legacy) system to plug the functional gap. Was done, but lead to duplication of work, throwaway work on a legacy system, increased release and deployment effort and time, scalability and resilience concerns, productivity concerns.

- Use data replication techniques to share domain concepts across multiple systems. Massive complexity, pollution of master-slave concept, Domain Pollution, cultural concerns.

I haven’t yet seem a satisfactory way to handle the integration of large, tightly-integrated systems.

FURTHER CONSIDERATIONS

DOMAIN POLLUTION

Domain Pollution is often the result of poor software development practices, where one (or more) domains assumes another’s responsibility. It's relatively common in monolithic applications, and exposes significant system failings. The figure below shows an example.

Example of Domain Pollution

This system has five distinct domains, D1 to D5. Note that all domains use D5’s data (I have only represented D5’s dataset, but it's likely the other domains also manage their own datasets too).

This breaks the Single Responsibility Principle - a fundamental software structuring principle. Based upon Single Responsibility Principle, D5 should only interact with D5’s data. However, D1, D2, D3, and D4 have also assumed a responsibility of D5 and thus, have hoodwinked it. Domain Pollution has hampered D5’s evolution, further causing innovation, security, and scaling challenges.

SCALABILITY

"Just join domainA and domainB's tables, and you've saved yourself a database trip."

Beware! This argument suggests that you'll improve scalability by joining (coupling) domains together, since only a single database interaction is required (database interactions are generally expensive).

This is true, and false. You probably will improve scale... but only to a point. This advice advocates a vertical scalability strategy over a horizontal scalability strategy (the stronger of the two); thus, opting for the weaker alternative for the sake of productivity.

The join argument makes a dangerous usage Assumption (i.e. coupling). One domain has assumed another domain:

- Is always accessible, and available.

- That data will always reside within the same partition.

- That the same technology/vendor is suitable and will be used for both domains.

The SQL joins have:

- Tightly-coupled the domains to a database type (e.g. relational).

- Tightly-coupled the domain to a specific vendor (e.g. Oracle, Postgres).

- Made an assumption about where the data resides (i.e. on the same instance/machine).

EVOLVABILITY

Evolvability is a key, oft-forgotten, architectural quality. It indicates the ease with which a system (or part of a system) may evolve. In a scenario where Domain Pollution has taken over, we are severely hampered in our ability to modernise. To make any change, not only must we identify every area of change, we must also:

- Collaborate with all the domain experts to understand (and contain) the Blast Radius.

- Plan the capacity for the change.

- Fix/replace broken unit tests.

- Restructure (potentially) large swathes of code in the affected areas (e.g. passing an id through a set of layers to the persistence mechanism).

- Recompile and redeploy the domain.

- Regression test a large area of the system, and any of those area’s dependents.

- Load test it.

This is Change Friction. I’ve seen cases where only an individual (a “Brent” in The Phoenix Project) can resolve this friction, and that individual is unavailable for the next two months. This is a terrible situation. It affects your Agility and (potentially) Brand Reputation, and can leave your customers in a precarious position. The business may face a dilemma; do they let their customers down and lose their custom (possibly also suffering reputational damage), or do they choose to hack further (proprietary) changes into the solution and exacerbate evolutionary issues?

FORMS OF DOMAIN POLLUTION

Domain Pollution tends to be caused by the introduction of incorrect Assumptions in software. Several forms of domain pollution exist:

- Assuming another’s responsibility. One domain embeds a responsibility belonging elsewhere. I often see this anti-pattern applied in unexpected places; e.g. a developer copies some logic from one domain, and pastes it into another domain, and now there’s two polluted domains and a duplication of logic (a code smell).

- Assuming another’s data. Foreign key relationships actually tightly couple two domains together (e.g. the cross-domain SQL pollution I described above), incentivises developers to introduce Domain Pollution into code, and may hamper Evolution, Scalability, and Security.

- Exposing consumers to internal details. In this article on API design, I described the importance of the "Don't Expose your Privates" practice (Don’t Expose your Privates). In this case, an API exposes unnecessary details to external consumers, who become tightly coupled to those details. This adds (avoidable) complexity to an API integration, and also reduces Evolvability.

REAL-WORLD EXAMPLE

Many developers tend to focus on solving immediate problems, and don't necessarily appreciate that a single, seemingly innocuous, assumption can significantly impact a system, and thus, a business.

“Clean” domains can protect Business Agility and Brand Reputation, with flexible, evolvable, scalable, and secure software. Pollution can do the opposite.

I witnessed the ultimate form of Domain Pollution whilst analysing potential replacements to a large, monolithic application. The common approach to solving this problem is to break the monolith into smaller units (typically microservices), by identifying the seams and strangling (the Strangler pattern) each, one at a time.

However, I found this approach ineffectual due to significant domain pollution. Domain Pollution hindered evolution, and (to me) was the key technical factor for the product's demise. Change Friction dictated our direction, and resulted in the construction of a new (costly) product.

Some good approaches to counter Domain Pollution include:

- Encapsulating the data store, so others can’t access it.

- Always use an interface (e.g. REST API), and never undermine it through the circumvention of the data owner.

- Where practical, force the issue. For instance, by intentionally selecting to use a different data storage technology per domain.

- Employ user privileges (Principle of Least Privilege). Note that this approach has a positive side-effect as it also protects each domain's data from “polluted domain injection attacks”.

- Drive API design from the top-down (i.e. Consumer-Driven APIs), not the bottom-up. Let consumers drive flows, and models (if possible). Consider the need, name, and purpose of every data field before exposing it.

FURTHER CONSIDERATIONS

- See: https://medium.com/@nmckinnonblog/api-integration-manifesto-8814dbc7261a

REAL-TIME REPORTING

I've worked at several organisations that reported directly off the transactional database. Whilst it meant information was highly accurate, it also presented the business with some significant problems; namely:

- Scalability - labour-intensive reports reduced the system’s ability to scale for important customer interactions (including on-boarding and payment processing). Whilst reports are important, they are a side plot to the main story - to make a profit from custom. When less important processes dictates the capabilities of higher priority processes, we have a problem (Tail Wagging the Dog).

- Availability - running heavy reports can (I’ve seen it a few times) cause the transactional system to fail (Self-Inflicted Denial of Service), preventing access to legitimate users.

- Evolvability - one of my biggest bugbears. When the reporting scripts are tightly coupled to transactions data structures (and specific technologies), it becomes almost impossible to evolve (Domain Pollution).

- Security - in order for a report user to be effective, they’re often given higher access privileges, sometimes to the entire production database. This goes against a fundamental security practice (Principle of Least Privilege), and - if the account is compromised - could lead to a highly effective SQL injection attack.

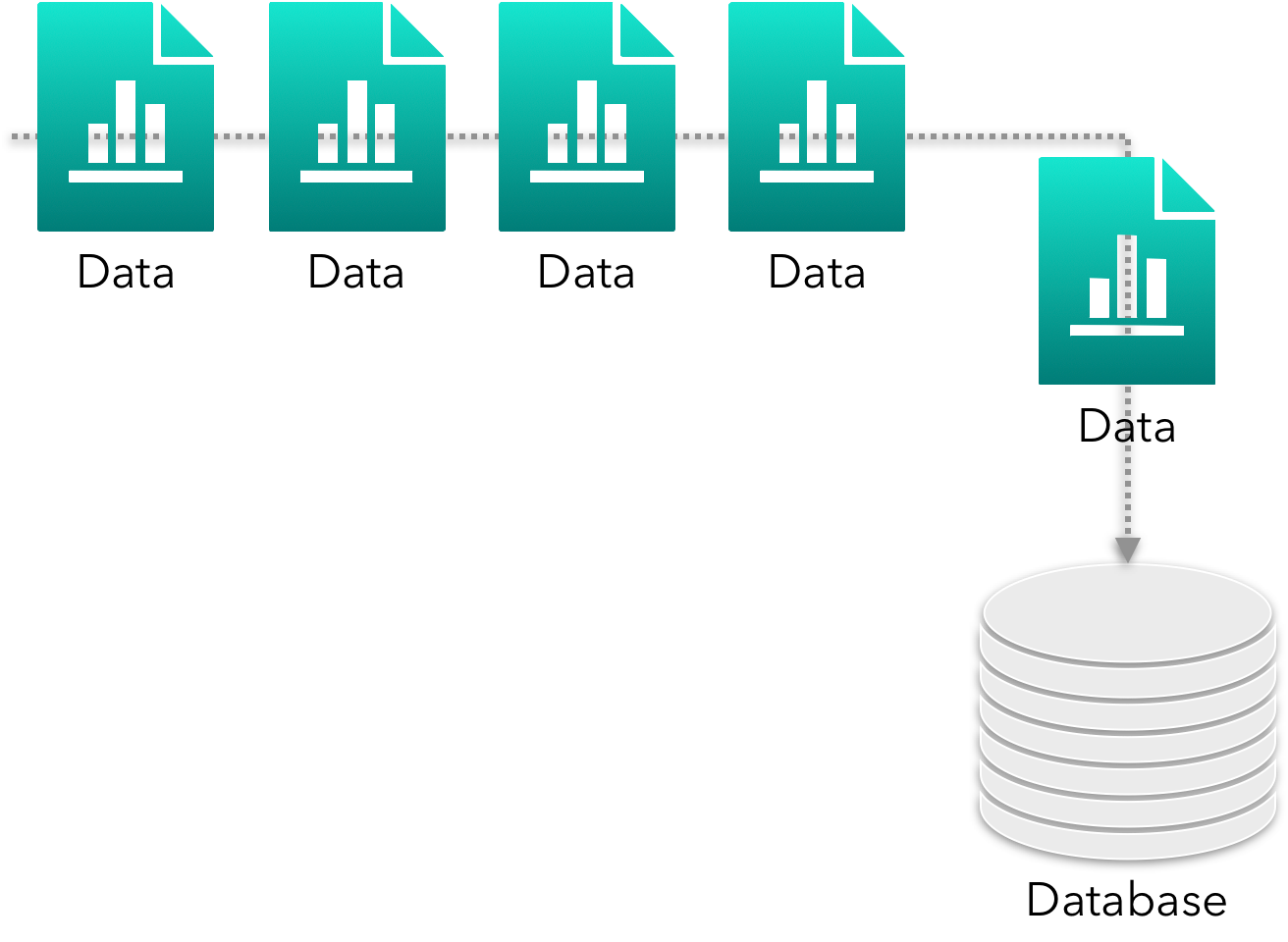

Most modern business systems I’m aware of don’t report off the transactional database (although I suspect there’s sometimes a case for it). Several approaches are available, but most revolve around extracting (or pushing) and duplicating data to another data store (such as a data warehouse or data lake), and pointing reporting tools at it. This approach makes minimal contact with the transactional database, enabling us to respond effectively to heavy transactional user loads, whilst business-oriented reports and metrics are processed elsewhere. Typical push mechanisms include an ETL (extract a delta and push elsewhere), and event-based pushes (my preference), using technologies like Queues & Streams.

Whilst transferring reporting work off the transactional data store is (generally) a good approach, it does add some complications. ETLs may still cause negative performance effects on a transactional data store if not carefully controlled; for instance, by the delta extract. Event-based pushes tend to happen at the business tier (but not always; e.g. some NoSQL databases support event-based pushes; relational databases have triggers), that may still negatively impact the production system. These approaches lessen the impact, they do not mean no impact.

There’s also the question of accuracy and consistency. None of these duplication approaches quite gives us real-time accuracy, and (depending upon the context) may occasionally see a disparity of results. Generally, though, it’s sufficient for most. It also brings complexity into play, as generating the data to report on is more challenging; we must handle all changeable events (inserts, updates, and deletes). Of course, running reports directly off the transactional data store doesn’t require this step as the data is already available.

REPORTING IN A DISTRIBUTED WORLD

It’s worth noting that reporting on data across a distributed (potentially Microservice-based) system is very different to the well-worn Monolith path, for two reasons:

- Each Microservice controls access to its own domain data, and doesn’t allow anything other than itself to directly access it. Remember, to prevent Domain Pollution and support Evolvability, we need all interactions to be controlled from the microservice’s (REST) interface.

- Technology Choice per Microservice. If we allow each Microservice to select the appropriate data store technology for its needs (i.e. the right tool for the job), the end result may be a sprawl of disparate data storage technologies for one product. This makes a pull model (ETL or direct reporting access) impractical; you’d spend all your time finding the data, then integrating, rather than reporting. This approach strongly hints at an event push model.

FURTHER CONSIDERATIONS

BUILD V BUY

There’s always some friction between Build and the various forms of Buy, along with a common misconception that Buy is always financially superior to Build.

Consider the following example. You are employed to deliver a software system for a lucrative new customer. Let’s say you already have part of the solution, built out by an internal team, that meets sixty percent of the customer's functional needs.

It’s your job to decide how best to satisfy the remainder. The following options are on the table:

- Build out the remaining functionality using the internal team.

- Buy/Partner with a supplier to provide the remainder, giving them a cut of any profits.

- Reuse a legacy system.

- Use an open-source solution(s).

There’s many nuances to consider, and in the end, only you and your team have sufficient appreciation of your context to decide (i.e. external entities should guide you to the right decision), but I'd suggest the following considerations are a good place to start:

- The five business tenets (TTM, ROI, Agility, Sellability, and Brand Reputation), described earlier. Which ones are pertinent to this decision?

- How are these decisions being made? Are they made to satisfy immediate or long term needs.

- Capacity and appetite. Does your business have the capacity and appetite to undertake the work yourself? Is the work pivotal to your business, or just a sideline/subplot to the main story?

- You’re looking at the entire product lifecycle, not just an initial integration.

- Control. How much (and what type of control) do you require over the solution? Is the entire solution built, or does it require regular changes?

- Delivery speed. Can you, or the partner, deliver at the speed (and reliability) required by your customers?

- Reputation. Will a vendor relationship affect your reputation in the eyes of your customers (Perception)?

- Integration effort (particularly around Functional Intersection). What is the real integration effort, not the perceived effort? How often must you reintegrate, and what’s the cost of each?

- Who dictates when a change affects you? What sort of pre-warning do you get?

- Service Level Agreements (SLAs). What agreements around performance, scale, availability, security, and bug fixes are in place? Do they meet your needs?

- Consistency. If you provide either a UI or APIs facility for your customers to access your services, can you still provide a consistent experience if you buy it in? If the vendor supplies APIs, would you wrap them? What integration effort is required to do that? If the vendor supports a UI, cannot be rebranded?

- Expertise. What level of domain expertise do you have? Is it a highly complex domain, or one that can be quickly learned (e.g. would you really want to build a tax solution without having a complete understanding of the domain)? Does your business want to have that expertise?

- Evolvability. Is the repeated modernisation of the product important to you and your customers? What promises does the vendor make on this? For instance, if the software isn’t regularly modernised, it may lead to security vulnerabilities creeping in (and thus Branding Issues).

- Regression and verification. Who manages the regression testing of any changes? Are they automated?

- Test sandbox. I’ve seen numerous cases where test integrations are executed on a production system because no test environment is available. Testing using a production system may add significant risk and cleanup costs.

- Multi-tenancy. Although the standard Multi-Tenancy approach has many merits, particularly for the solution owner, it also has some difficulties, particularly around (a) single point of failure, and (b) noisy-neighbour interference; where other customers interfere with your solution, potentially causing slowdown or Availability failings. The converse is that you get new features quickly.

- Charge model. If Buy, what charge model is being used? One-off, free, licensed, per transaction, per subscription?

- Is the new system a direct competitor and replacement for yours? This raises product capability questions that may lead to political infighting. Is that important from a Sellability perspective?

Let's look at the options.

BUILD

Why would you opt for the Build option?:

- You have, or want, the expertise.

- There is no suitable alternative.

- You can’t hide a Buy decision. Potential customers may request information on all your suppliers and vet them prior to contractual agreement.

- You have (or can source) the requisite capacity to perform the work.

- There is value (i.e. profit) to be made from building it.

- It is expected by a customer, or any agreement to use another vendor is too challenging to explore.

- Control is important to you. Whilst Buy might work initially, you know each subsequent change is not guaranteed.

- You can fit the integration nicely with the remainder of the system.

ADVANTAGES

- You control your product’s direction.

- You can manage costs internally.

- It becomes a sellable feature.

- You gain expertise in it.

- You can make it fit, and Consolidation is no issue.

- Delivery, and change speed, are within your control.

- Operational costs are within your control.

- You may be able to claim tax credits for it.

- You can control the evolution of the software; e.g. manage security.

- You can control quality.

- You can control feedback loops for continuous improvement.

DISADVANTAGES

- Requires an initial expense.

- There is risk if the feature is not wanted.

- It may further blur the lines of the business’ specialism.

- You must manage (deploy, scale, and secure) the software yourself.

- May require domain learning.

- Availability of resources.

- It diverts your resources from other (potentially profitable) activities.

BUY/PARTNERSHIP

Why would you opt for the Buy option?:

- You are comfortable with handing control over to another.

- You have a strong relationship with the solution owner.

- You are comfortable with the solution’s quality.

- You don’t have, or don’t want the expertise.

- You don’t have the capacity to build it out (although this has some caveats).

ADVANTAGES

- If they are a specialist, they should be capable (at least from a domain perspective).

- The functionality (in the main) already exists.

- You minimise the financial risk if the solution is unappetising to your customers.

- Change, distribution, and evolution becomes another’s problem.

DISADVANTAGES

- You may sacrifice Control for features.

- The vendor’s feature-set may provoke arguments over ownership of value within your own organisation.

- The vendor may rank you lower in the pecking order than other competing customers.

- Consistency. It may not be possible (or practical) to present a consistent experience to your customers.

- Some integration effort still exists (see Functional Intersection). You may also be forced to wrap all interactions to support Evolvability.

- You may need to broach the subject with existing/potential customers.

- Ownership. Where does it stop? It’s not just development, it’s testing too.

- Reporting and business metrics. You may not have the detail you can get from internal business systems.

- GDPR - you’re now depending upon another system to manage sensitive information. You may also be required to indicate the partner system as part of the customer onboarding journey.

SAAS BUY IS MORE THAN MANAGEMENT COSTS

It’s easy to imagine the only costs of a Buy option - such as SAAS - only being the monthly management costs, but that's rarely the case. If you're integrating two systems together, you must pay for the integration work.

If there is a Functional Intersection, the only way to consolidate with an external partner is to reduce your own feature set; something most business owners would be concerned over. So, we end up with a non-consolidated mess.

REUSE LEGACY SYSTEM

Some businesses have several product iterations (if they’ve failed to Consolidate), which are often richer in functionally than their modern counterparts. It may be possible to reuse one of these products.

Why would you opt for the legacy option?:

- The functionality already exists.

ADVANTAGES

- Increased ROI on that legacy system.

- It’s a Known Quantity (whilst a new vendor/partner system may not be).

- It may be a better business fit than a vendor/partner solution.

DISADVANTAGES

Whilst this approach seems quite appetising (I’ve seen it used many times before), it has some fundamental flaws:

- Legacy system (probably) means Monolith, and that increases the likelihood of a Functional Intersection.

- There's no practical way to consolidate (Consolidation) the systems; the legacy is too old to be worth decoupling, the newer system can't lose features in favour of the legacy system.

- The legacy system has, in all likelihood, been built using technologies which are now viewed as antiquated and no longer supported; any changes to the legacy to support the new system translates into poor TTM (productivity, contention finding skilled resources, poor practices, long release cycles, unfriendly to the cloud), poor ROI (fine if your just using it as is, less so if you must change it), and Brand Reputation concerns (e.g. security vulnerabilities). You’re unlikely to want to invest in rectifying problems in a legacy system.

- Joining large systems together often results in increased complexity, which (due to Complex Systems), equated to reduced reliability and increased risk to Brand Reputation.

- Security vulnerabilities related to the legacy nature of the system; e.g. it's sitting on technology no longer supported and patched.

- Hybrid stack. This one's quite a challenge. You must manage two disparate technology stacks, treating it as one entity. Any attempt to use modern practices (e.g. continuous delivery) may be hampered by the legacy's lifecycle (months?). The lack of Uniformity also hinders understanding and causes Change Friction.

- More business coordination required. You're managing two disparate systems (and possibly teams), requiring a more formalised (heavyweight) project management approach.

- It revives the Legacy Mindset (e.g. Monolith, quarterly releases), and may also lead to others thinking this is acceptable behaviour when it should be discouraged (will it pollute the culture you've tried so hard to change?). We forget why we labelled it legacy in the first instance.

OPEN-SOURCE

The open-source solution has both similarities and differences to the other models. Unlike (for instance) a SAAS Buy model, an open-source solution is typically taken freely (including the source code) and deployed internally.

Why would you opt for the open-source option?:

- The requisite functionality exists, or most of it exists.

ADVANTAGES

- It's (generally) free.

- It can be modified to suit your needs.

- It's often been worked on by numerous persons, who vetted it (although there's still questions around this).

DISADVANTAGES

- The design and implementation technology are dictated to you; i.e. you might not have the skillset to understand (let alone modify) the source code.

- You're generally managing the software yourself, leading to the hybrid stack issues I described earlier.

- Many open-source solutions are no longer maintained. Whilst this shouldn't prevent you using the solution, it should raise questions about its suitability. Security vulnerabilities are the most obvious concern.

- Reliability and trust. Can/should you rely upon a solution that you may not trust? I suspect governmental bodies holding sensitive information shy away from software that they cannot guarantee the veracity for.

- What about licensing? Whilst most open-source solutions I've seen have royalty-free licensing, that's not always the case. You don't want to tightly couple your business to a solution only to later find you're liable for royalties.

- It may reopen the Consolidation question, along with product capabilities questions (i.e. if both systems do the same thing, which one do you keep?). This may lead to political infighting and cultural issues.

SUMMARY

Only you and your team have the context to truly understand the best solution for your needs. Consultants can offer guidance and advice, but in the end they haven’t lived and breathed in how your business (and it’s systems - both technology and practices) functions, and may not appreciate all of its nuances. As you see, the Build v Buy question is far more involved than outsiders may initially realise (we, as humans, tend to simplify things).

BUILD, BUY, DOMAIN SPECIALISTS, & CONSULTANCY FIRMS

I admit it, I'm a bit of a skeptic (and should watch out for Bias). But I think have good reason for it...

Repeatedly throughout my career I've seen work handed over to (supposed) specialists/experts, employed to provide a feature or level of service that there was no internal commercial appetite or capacity for.

Whilst it’s true that experts can offer good value for money, particularly when the domain is complex (managing tax springs to mind), or where they already have extensive experience and a stable product, I'm more skeptical of some big consultancy firms who take the work away then magically reappear months later with a (supposedly) highly scalable, secure, robust, testable, and maintainable (i.e. quality) solution.

Extensive experience does not necessarily correspond to a good, well-rounded solution (e.g. I’ve seen several cases where all the consultancy does is farm the work out to another unproven group of workers and expect the same result as if they were domain and technology experts; it doesn’t work like that I’m afraid) , and forms both positive and negative Bias and Perception.

FURTHER CONSIDERATIONS

- Functional Intersection.

- Consolidation.

- Integration Hell.

- Control.

- Antiquated Monolith.

ENTROPY

Everything suffers from Entropy. People (die), cars (malfunction), houses (fall into disrepair), the value of the words in this book (deteriorate, and lose context and meaning), empires (crumble), (supernovas destroy) the heavenly bodies, and software (degrades). Entropy even affects us if we do nothing.

Entropy in software can cause:

- Every change to be challenged (Change Friction).

- Evolvability and Maintainability challenges.

- Business slowdown (TTM, Agility), stagnation, and increased expense (ROI).

Entropy can’t be beaten, but its negative effects can be slowed; i.e. whilst death still comes, you have some control over the date of its arrival.

How may Entropy occur in software?:

- Waiting in Manufacturing Purgatory.

- Waves of new technology.

- Change.

- Architectural style.

- Failing upgrade paths.

- Doing nothing.

- ... any many others.

Let’s visit some of them.

MANUFACTURING PURGATORY

Remember, everything deteriorates over time. Not only is money lost the longer a work item sits in Manufacturing Purgatory (it’s unused, so there’s no ROI on it), but its value also deteriorates.

How can that be? I have two reasons to offer:

- Technology evolves extremely quickly. Something sitting unused, even for a few months, is potentially outdated with a more modern approach.

- Any important learnings about the value of a feature is impossible to glean because customers aren’t using it.

WAVES OF NEW TECHNOLOGY

(Some) New technologies make existing solutions seem mediocre by comparison. Cloud technologies are one example of this. Failing to embrace new technology may put your business behind the curve of your competition.

Consider the following. I once heard an executive opine that the technology team should “make a decision and stick with it”.

Whilst, in some sense, that executive’s reasoning was right; frustration was causing them to provoke a decision ahead of a vital deadline. At some point you must place a bet.

Yet there’s two sides to a coin, and Entropy is a strong influencer. Forcing everyone to live with a (unproven) decision for the foreseeable future, regardless of the other opportunities that appear over the horizon is demotivating, has a whiff of tactics rather than strategy, and may hamper Evolvability, Agility, and eventually TTM, ROI, and Brand Reputation.

CHANGE

Almost any form of software extension or maintainance (without a countering Refactoring phase) can increase brittleness and Assumptions, reduce reuse, and exacerbate Entropy.

When additions are made to an existing code base, we tend to add responsibilities. Even if we adhere to Single Responsibility, we invariably increase the assumptions that a unit encapsulates, lowering its potential reuse, and therefore introducing Entropy (e.g. if we can’t reuse it, we’ll find another solution, or worse, build another to perform a similar job, and thus, we’ve stumbled into a vicious circle of Entropy).

ARCHITECTURAL STYLE

Some architectural styles are more prone to Entropy than others. For instance, I’ve witnessed Entropy set in within an Antiquated Monolith, where Domain Pollution had taken center stage. The tight-coupling caused by an over-polluted domain made it practically impossible to make any form of change in a safe or efficient manner, causing poor Stakeholder Confidence, which lead to Entropy, and finally the product’s demise.

UPGRADE PATHS

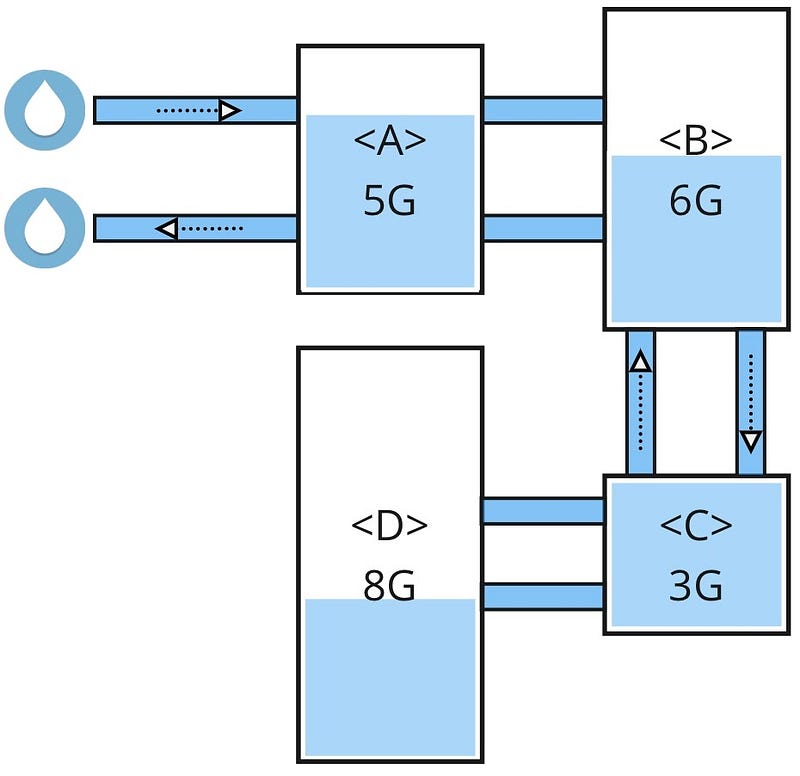

Failing to undertake regular platform upgrades also leads to degradation and Entropy. The software applications we construct are built upon layer after layer of dependency strata; from the libraries and runtime engines we directly rely upon, which sit upon platform engines (like the Java Virtual Machine), which rest upon Operating System libraries, which communicate with lower-level device drivers onto the device/hardware, and eventually are pushed onto a network (if network based). See the figure below.

Typicaly Dependecy Hierarchy

Any one of these dependencies has a shelf-life, and may require upgrading (e.g. to support better performance, interoperability, security), or even replacement, during the application’s lifetime.

Fail to upgrade, and you (may) risk technical qualities such as Evolvability, Security, Productivity, and Scalability, as support dries up, leading to an inability to support the five business pillars I focus on in this book.

DOING NOTHING

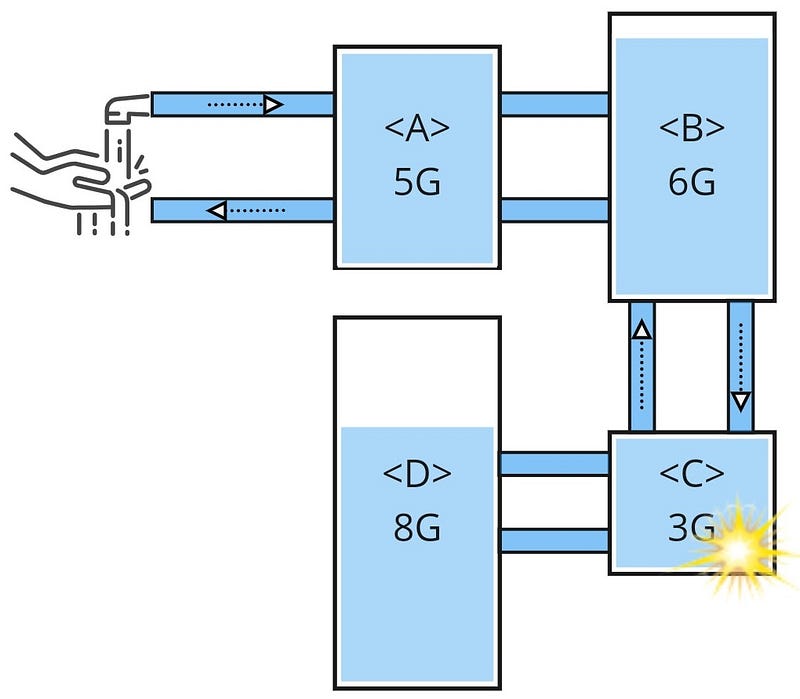

At the start of this section I identified the “do nothing” scenario as another form of Entropy. To help contextualise this, I’ve drawn up the following analogy.

Imagine you are sitting before a grand piano. Upon the piano rests a musical piece for you to play. It’s a beautiful melody that, when played well, can take your audiences breath away.

You begin to play it, but something keeps distracting you, and now and again you strike a few wrong notes, or miss-time it. You hear a few polite coughs from the audience indicating some are aware of your error. The distractions continue, and it becomes increasingly difficult to keep time, to the point where the music suffers and the whole situation is unrecoverable. You stop, turn around, and watch the last member of the audience leave, shaking their head in muted disappointment.

Returning to the technology context, playing the musical score represents the product’s lifetime; whilst new technology, technology upgrades, or security patches, represent the key notes. The audience represents your customers. You’re the (supposed) maestro. See the figure below.

Doing Nothing

Note that these are examples of technologies/techniques that may represent significant events in your product's lifecycle; it doesn't mean they're appropriate to your context; that's for you to decide.

If you miss one note (or miss-time it), things seem askew, but only a highly knowledgeable audience (i.e. the informed customers) will notice it. However, miss (or miss-time) enough notes, and the music will sound so awful, that no-one wants to hear it (i.e. your customers will source an alternate service), and the situation is unrecoverable (at least for that product). There’s no way to catch up, Entropy has set in, and the product is visited by the Grim Reaper.

APPROACHES TO REDUCING ENTROPY

We may reduce software Entropy by:

- Refactoring (a technology term for regular servicing, as you might with a car).

- Following continuous practices (i.e. immediate feedback and pivots).

- Regular technology upgrades.

- Regular (re)assessments of current and future states.

- Sound Encapsulation techniques.

- Application of appropriate Granularity and Separation of Concerns practices.

FURTHER CONSIDERATIONS

- Manufacturing Purgatory.

- Refactoring.

- Assumptions.

- Change Friction.

- Domain Pollution.

- Antiquated Monolith.

- Stakeholder Confidence.

(the) MANY FORMS OF COUPLING

There are many forms of coupling. The one thing they all have in common is their ability to impede change.

Almost everything in our world depends upon others to perform responsibilities we cannot (or should not) undertake ourselves. This is particularly true within Complex Systems, which most software systems are. Coupling relates to how (and why) one entity makes use of (or is aware of) another.

A good understanding of the forms of Coupling supports engineers to make better integration decisions, increase ROI (e.g. by building longer-lasting systems), and protects Brand Reputation (e.g. increased reliability).

COUPLING & ASSUMPTIONS

Coupling comes in many forms (described later). At a more fundamental level, each form represents an (possibly incorrect) assumption (Assumptions) about the operating environment a system functions within. Coupling is a common cause of Change Friction.

In common parlance, Coupling is typically described as being either:

- Tight (coupling) - a strong relationship or bond, where impacting one party likely affects the other. This generally has negative connotations.

- Loose (coupling) - a weak(er) (or less permanent) relationship, where impacting one party doesn’t necessarily impact the other. This generally has positive connotations.

LOSS AVERSION

Tight coupling may occur implicitly or explicitly. The explicit form (where you assess risk and make a judgement call) has one advantage - the thinking described in Loss Aversion can invite discussions around loss, where we can suggest suitable forms (and levels) of Coupling under those circumstances, or deploy countermeasures; i.e. it’s proactive and extends Optionality, whilst the implicit form tends to be more reactive, and may limit Optionality. This Optionality can have considerable benefit when the sky is falling down and you must look to alternate survival tactics.

COUPLING CONSIDERATIONS

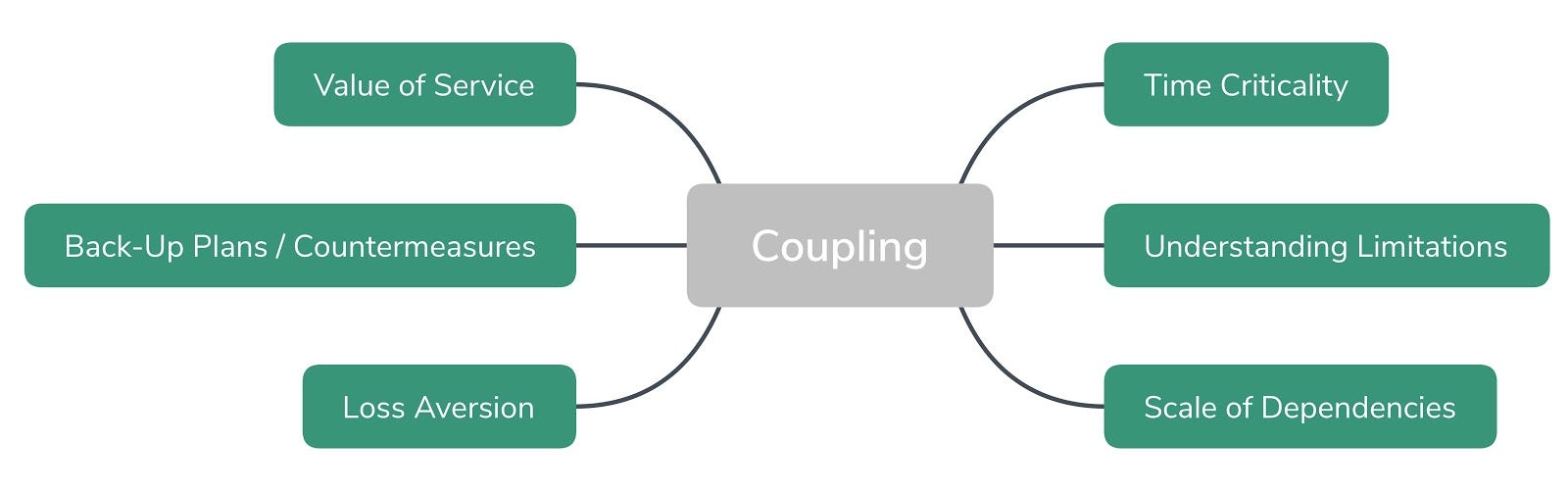

The figure below shows some considerations around Coupling.

Note how several factors may affect any coupling decision, including:

- Service Criticality (or Value). If customers are unable to access any part of the service due to a downstream failure, what effect will it have on your customers, and business? Will customers notice, care, complain, and/or seek recompense? Where does it fit in the MoSCoW scale? For instance, Netflix has the ability to present static recommendation content if the personalised customer response isn’t reachable; therefore it has less criticality than some other functions, and falls within the MoSCoW camp.

- Time Criticality. Does your system depend upon the availability of another system during a critical period? Or is the service only offered ephemerally?

- Loss Aversion.

- Scale of Dependencies. Is the entity being used already heavily relied upon by many others? Change coordination across a single dependent can be very different to managing (say) ten.

- Understanding Limitations. By understanding a dependent’s limitations, you can (b) increase awareness, potentially provoking the means to resolve that limitation, even in solutions owned by another party, (b) counter those limitations by providing an alternative back-up plan/countermeasures (see next point).

- Back-Up Plans/Countermeasures. Once you understand limitations (see earlier point), you can anticipate the perceived impact of tight coupling on qualities such as Reliability. This allows you to deploy countermeasures to potentially harmful situations, and protect Reputation.

ACE UP YOUR SLEEVE

An ace up your sleeve, in times of trouble, is a great asset.

The ability to flip over (route) to an alternative system, or utilise a different approach (e.g. providing static content is better than no content personalisation at all) at the first sign of danger both increases Stakeholder Confidence and provides both you and your customers with a Safety Net.

I’ve witnessed several good demonstrations of the strength of this approach, and several examples where a potentially lucrative deal was soured by the lack of one.

A back-up system need not be polished, or feature-rich. That’s not it’s purpose. It’s there to provide Optionality, enabling a business to continue functioning whilst other parts of a system are crumbling.

We might use a back-up system in several circumstances, including:

- Inability to scale to high demand. Say your business is built to function from immediate feedback (i.e. the synchronous model). Reports, metrics, customer feedback etc all expect immediate feedback. That may be your default, all-things-are-going-well scenario, but your alternative plan may be to switch over to an asynchronous model in times of trouble (you might argue that’s how the business should always operate, but we might be dealing with established systems, processes, and cultures). Whilst this approach may not suit every business stakeholder, or customer, I suspect most would see its benefits when things really do go awry (and you can always switch back to immediate feedback once the spike is over).

- Insufficient testing. Cases where insufficient testing (particularly environment) can cause reputational harm (e.g. introducing a security flaw into a new system with no back-out plan).

- Replacement of a unreliable/unproven downstream partner system. If you depend upon a partner, but they are a cause for concern, then you may deploy a countermeasure and route traffic there if things don’t go well.

TYPICAL FORMS OF COUPLING

Typical forms of Coupling include:

- Technology.

- Static Parentage.

- Temporal.

- Internal Structure.

- Honeytrap Coupling (e.g. by feature or service).

- API Coupling.

- Coupling through Reuse.

- Version.

- Environmental.

- Legacy.

- Operating System and Hardware.

- Single Point of Human Failure.

- Working Style (e.g. Waterfall).

- Locational coupling.

Let’s look at some types of Coupling in more depth.

TECHNOLOGY COUPLING

Software that is tightly-coupled to a specific technology faces Entropy and Change Friction. This is problematic when new, improved technologies are released.

Technology Coupling has several subcategories.

FRAMEWORK/PLATFORM COUPLING

Some objects become inextricably tied to their overarching management framework. In some cases this places evolutionary challenges upon one of a business’ most important assets; their business logic (i.e. how the business runs, what makes it unique in the marketplace).

For instance, embedding both the business logic and the API-related (integration/transformation/routing) logic within the same software unit introduces a framework dependency (i.e. an Assumption) every time the business logic is needed, reducing its potential reuse, and (more importantly) hampering evolution to more modern (generally, better) frameworks.

DECOUPLING TECHNOLOGIES WITH DESIGN PATTERNS

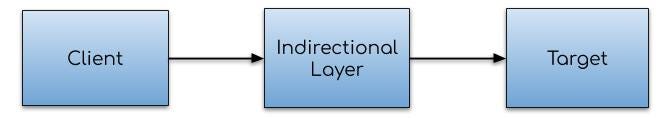

Design Patterns such as Remote/Service/Session Facade and Business Delegate exist for good reason - they separate responsibilities or concerns (through layers/tiers, and Indirection), enabling the independent evolution of unrelated/competing concerns.

Tightly coupling objects to a specific technology increases the effort involved in replacing that technology, reducing technical flexibility (Evolvability).

COMPONENT/SERVICES COUPLING

Tightly-coupled software components cause similar issues to object coupling; i.e. it’s harder to change a component with many responsibilities/dependencies, due to Change Friction. Domain Pollution offers a good example, when components (domain concepts) become polluted by others, they suffer from tight-coupling, hampering change.

A similar strategy for decoupling objects can also be used to decouple higher-level components from one another; i.e. Single Responsibility Principle.

STATIC PARENTAGE COUPLING

This form of coupling is based upon static dependencies on a particular type in a hierarchy; i.e. inheritance in the Object-Oriented world (the child inherits all of its parent’s traits).

To my mind, compile-time coupling offers many benefits. Compilers identify syntactical correctness (using static type-checking), and warn developers of these problems in advance of program execution (a form of Fail Fast). However, it can also lead to inflexibility. For instance, Inheritance in strongly-typed languages (e.g. Java) creates a static dependency from child class to parent. Being a compile-time feature, we may find this tight-coupling hampers change, as we must force a single change through the entire software development life-cycle, even when there are alternative approaches (although we may find this less of a challenge with modern practices such as Continuous Delivery).

STATIC COUPLING & FLEXIBILITY

I can think of three levels of flexibility, typically around static, deploy-time, and runtime dependencies.

Static dependencies are less flexible (and reusable?) as they require full-lifecycle changes. Deploy-time dependencies are typically more flexible than the static counterpart because the source code remains inviolate in alternative contexts, only the configuration changes. Runtime dependencies offer the most flexibility/reuse, as environmental Assumptions are made late on.

The cost of increased Flexibility and Reuse is Maintainability. “Extreme configuration” can sometimes make code difficult to understand, or debug, and configuration that typically resides outwith the component using it may decentralise cohesive domain responsibilities.

Increased Flexibility is one reason to favour Composition over Inheritance.

TEMPORAL COUPLING

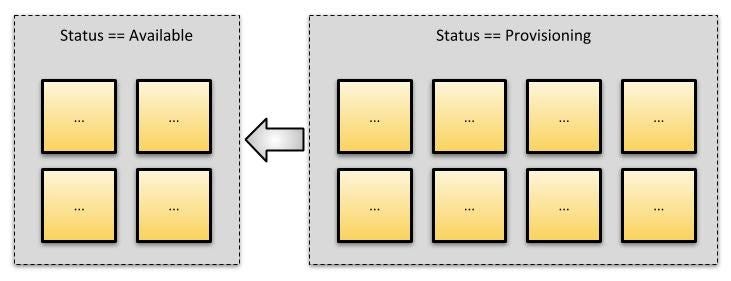

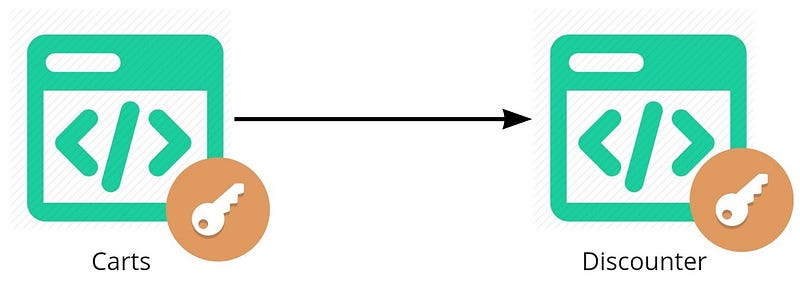

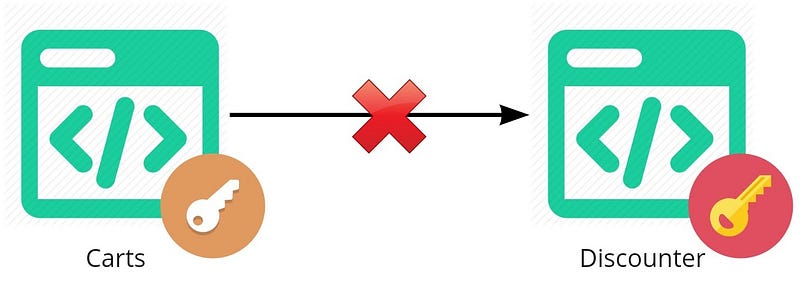

Systems and software units can be (and often are) tightly coupled by an assumption that the downstream dependent is immediately available and stable; i.e. there is a synchronisation expectation that both systems are online at exactly the same moment. This is temporal coupling; i.e. units are coupled by time.

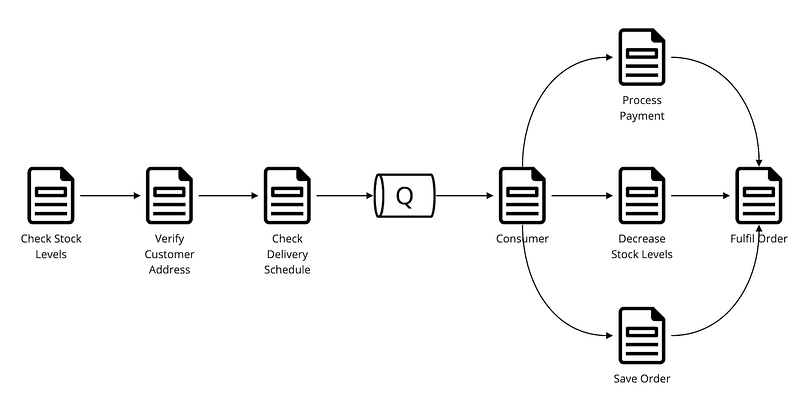

Synchronous Communication is a well-trodden, and relatively simple integration style; yet it isn’t appropriate in every scenario (such as for long-running transactions, where asynchronous communication is favoured, or when the end user need not wait for a response; e.g. bidding on an item). Asynchronous integrations are a key mechanism to break temporal coupling.

BREAKING THE TEMPORAL BARRIER

Several key indirectional technologies/approaches are on hand to help break Temporal Coupling, including Queues & Streams (and even Shared Databases; although I don’t necessarily advocate that!).

Not only do these technologies promote Availability, Reliability and Scalability, they do so by decoupling one system from the imposition of a time constraint by another.

It’s also uncomfortably easy for a business to also couple themselves temporally. For instance, if the business offers its customers live events (e.g. streaming golf), they tightly couple their business model (and its success), to their ability to provide/service content to their customers within a short time window. Failure to do so risks Brand Reputation.

TEMPORAL COUPLING AT THE SYSTEM LEVEL

Imagine you’re building a software solution to - on a monthly basis - credit a customer account to allow them to redeem it on any item in the catalogue (e.g. a book, or DVD). Off the back of the original event, the system may perform a number of additional actions; e.g. if the item is of type stock, then mail it out. It’s entirely possible that each of these actions is coded to only complete after checking the validity of a date.

Let’s look at how we might wrap the logic in a code:

1. if(nextCreditDueDate after LocalDateTime.now()) { 2. creditAccount(accountId, 1); 3. }Line 1 is key. It compares a domain-specific date-time value (nextCreditDueDate) with the system’s current date-time (often representing the geographical region the machine is in). It is a form of Assumption; the code is coupled by Temporal Coupling to the present (e.g. LocalDateTime.now()), making testing extremely challenging.

Why? There’s no easy way to (system) test this path at runtime. For instance, what do you do if:

- You need to test what will happen to a specific customer’s account six months from now, when an offer expires?

- You must replicate a bug that occurred 78 days ago at 10.04 a.m? Accuracy with date/time may be critical to your business model; e.g. what happens to accounts with the 29th as the credit day, on Feb 29th of a leap year?

- You need to understand how the entire system should/will behave when the customer is due credit for three months (due to some other internal failure) and the logic above is executed? Will they get one credit, three credits, or will it fail with some peculiar error? Is that what should happen?

It’s unfair to expect a tester to set up test data to reflect the current date/time every time it’s needed.

Of course, it’s probably worse than that! A real system modelling a domain such as this, is probably strewn with logic of this sort. The impracticalities of building robust test data introduces the need for a time machine, enabling us to easily move time back and forwards to test out different scenarios. An alternative approach might be:

1. if(nextCreditDueDate after timeMachine.now()) { 2. creditAccount(accountId, 1); 3. }In this case, the date-time validation is managed by the timeMachine; a service that wraps identification of the current date/time, enabling it to be manipulated by a tester. This approach can then be reused across the system.

The end result is that we have removed our dependence on Temporal Coupling.

HONEYPOT COUPLING

The Venus Flytrap is a plant renowned for its ability to entice prey to it, before trapping and slowly digesting them. Whilst that’s a rather melodramatic example of my next form of coupling, Honeypot Coupling, maybe it serves its purpose.

HONEYPOTS IN INFORMATIONS SECURITY

A Honeypot is something seemingly of value that is presented in such a light as to entice the unwary (much like the Venus Flytrap). It’s used in the security industry to entice hackers, either to entrap them, or to learn from them (to harden internal services).

To me, vendor lock-in is a form of Honeypot Coupling. The concept is simple, yet very powerful, and has existed far longer than I’ve been in the industry. Draw your customers in by incentivising them with a service/solution/price they can’t get elsewhere, and then promote their tight-coupling to it. Once tightly-coupled, Loss Aversion (in this case, the pain of migrating to another) kicks in, so the customer must remain (whilst it’s possible that this approach increases technical Agility, it may hamper business Agility).

Some vendors are veterans of this approach. In the database arena, we might see an explosion of new features, or bespoke solutions, that (theoretically) simplify your life. From a consultancy perspective, you may find some consultants display a certain Bias towards their own products. There be dragons.

The danger comes not from a single tight-coupling, but from the repeated use of this pattern, in many places, over an extended period. For instance, embedding proprietary database mechanisms within a large (monolithic) codebase, limits Evolvability, due to an inability to migrate vendor (I’ve seen it). Injudicious, tactical, use may tie in the customer, and reduce their options (Optionality) to a binary outcome - continue using the technology/approach (cognisant that it further exacerbates things), or rewrite the entire stack (also unpalatable, particularly to the investors).

CAUTION, HIGH VOLTAGE

Whilst I may be being unfair to many vendors (customers can cause a self-inflicted Honeypot Coupling), I’ve seen sufficient examples to be cautious of some of their advice.

And now for something highly contentious; and I suspect many of you will disagree with me... but are we not witnessing a revival of Honeypot Coupling with the Cloud? As each provider vies for position, their offerings diverge, to the point that they truly become bespoke services that sacrifice Portability for ease-of-use etc. Even the same high-level service offerings (e.g. serverless, managed NoSQL databases) are sufficiently different to present problems.

Of course the services being offered have great merit (that’s why they’re so popular and hard to resist); for example, in their seamless integration with other vendor-supported cloud services, their relative cheapness, and a seemingly endless ability to scale etc.

But I return to my point on balance; with good, there’s also bad (and Tools Do Not Replace Thinking). My concern isn’t about whether the cloud is good or bad, but about the level of coupling some customers are (unintentionally) committing to. Going “all-in” is fine if you know what your getting. The simplicity with which new services can be provisioned/used/integrated may breed complacency - might we find the practice so effortless and convenient that we it spreads without really considering its ramifications?

They say that if you write for long enough you eventually contradict yourself... Whilst it seems like some Cloud providers desire a certain degree of coupling, the cause to a large extent lies elsewhere. Our industry is driven primarily by the forces of TTM, speed of change, and innovation. And the forces of Standardisation and Innovation often compete. Whilst Standardisation may protect you from Portability (and potentially Evolvability) concerns, achieving it is often protracted, hard-fought, requires significant cooperation, and is never guaranteed, which begets Innovation and fast turnaround.

As the saying goes, you can’t have your cake, and eat it.

HARDWARE & OPERATING SYSTEMS (OS) COUPLING

Whilst this book aims to look into the future, it’s important not to forget the past.

One of the first products I worked with when I entered the technology industry was a billing solution, built in C++, and supported across several operating systems (Windows, and several UNIX variants).

Each platform had its own linking requirements (C Headers), and a set of make files (a well-established build and dependency management), used to make a specific platform release (a set of executable code specifically for that Operating System). The lack of Operating System (OS) agnosticism added complexity (i.e. time and money) to the product’s development but was, in those days, a necessary, but Regretful Spend.

WRITE ONCE, RUN ANYWHERE

I still remember Java as a fledgling platform. One of its oft-touted key strengths was its (supposed) OS agnosticism (”write once, run anywhere”). Whilst, nowadays this is taken for granted, it was an important advancement.

Fact. Many established businesses still have (and support) legacy systems (Supporting the Legacy). Often, these legacy systems are tightly coupled, not only to a specific Operating System (and version), but sometimes to specific (antiquated) hardware - both may be discontinued and difficult to source/provision. This type of coupling may impede Evolvability, through technology stasis.

VERSION COUPLING

Becoming coupled to a specific version of a dependency is also a common problem. Examples include a specific technology (e.g. Java 6), an API version (e.g. v1.3 of the Customers API), and an Operating System (although this is less of a problem nowadays), application servers (e.g. JBoss). For instance, it’s common for Antiquated Monoliths to become tied to a specific version of an implementation technology, and due to Big-Bang Change Friction, it becomes impractical to undertake any form of technology migration, thus causing evolutionary challenges.

TYPE COUPLING

Poor assumptions about a type can also hamper change. For instance, consider the following example.

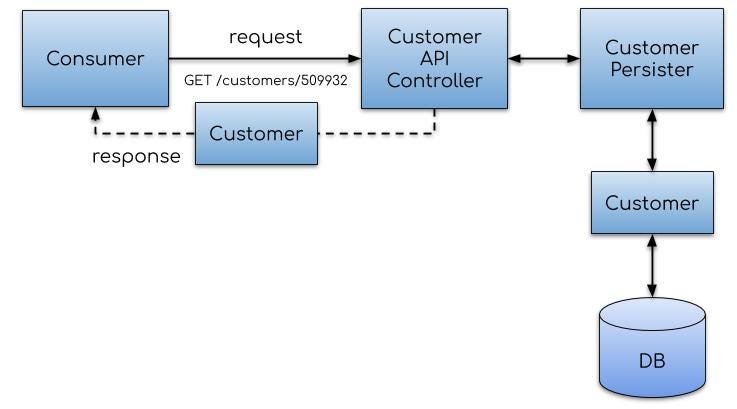

I was once involved in building a framework where someone had made the assumption that the return type for any resource identifier would always be a Long type (it fitted well with the primary key in the relational database world). For instance, a resource lookup might be:

https://services.acme.com/customers/23655This works (assuming you ignore the potential anti-pattern of leaking business intelligence data to the outside world; e.g. you now know how many customers the business has) if the underlying entity id is also a long. But this isn’t necessarily true in all databases; many NoSQL solutions, for instance, tend to use a UUID. It also hampers your ability to encrypt/decrypt this id as one form of securing data (around guessable values).

If we’d proceeded with the Long type, it would have hampered our ability to migrate to a NoSQL data storage, or use this type in the URL.

https://services.acme.com/customers/4995-3426-9085-5327However, by considering future evolutionary needs, we opted for a string type (which I still consider to be the right choice), allowing us to evolve when the time comes.

INTERNAL STRUCTURE COUPLING

Exposing internal structures creates a tight-coupling between the consumer and the internal structure, and may hamper Evolvability.

TABLE STRUCTURE COUPLING

As an increasing number of consumers become aware of an internal (table) structure, we find it increasingly difficult to make structural changes without affecting them. This may cause the following issues:

- Evolvability & Change Friction. You might want to modernise the database technology but you have tightly-coupled dependents.

- Performance & Scalability degradation. Direct coupling has limited our potential to introduce an intermediate caching facility. We might also serve to create overly-chatty consumers.

- Poor Reuse; workflow logic is duplicated in the consumers rather than encapsulated in some indirectional layer (e.g. facade), leading to rework, confusion around change, and additional coordination demands.

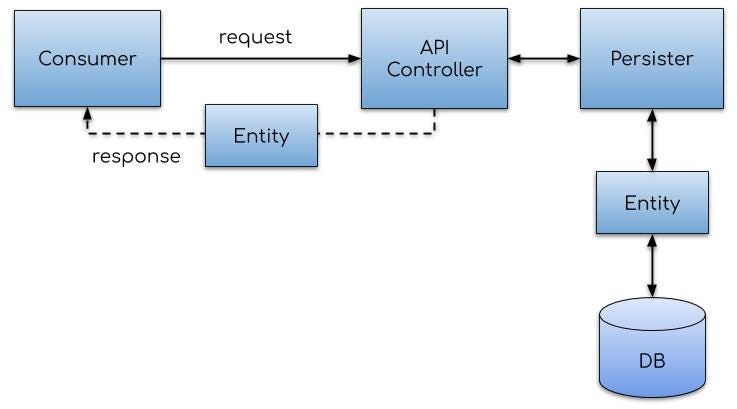

APIs can also become tightly coupled to internal structures (Consumer-Driven APIs).

INAPPROPRIATE STRUCTURE REUSE

This relates to using the same thing for multiple distinct responsibilities, and is a form of coupling through reuse. The Separation of Concerns section describes this in more depth.

COUPLING THROUGH REUSE

A pure Microservices architecture represents truly independent software units that aren’t affected by the lifecycle changes of other externalised units.

Quite a sweeping statement isn’t it? What it means is that - excepting any downstream dependencies on other services - a single change should not cause a large blast radius across a suite of microservices.

Unfortunately, reality and pragmatism also crowd these waters. Common/shared libraries represents the most obvious choice. For instance, most software units (such as a microservice) need other units to undertake a task, and often make heavy use of shared libraries.

The problem is that - like everything else - these libraries can change, thus (eventually) affecting all microservices reliant upon it (and breaking the microservice model of fierce independence); and your software may have a rogue dependency. Sometimes this rogue element can be alleviated through piecemeal deployments (one service at a time), but sometimes (e.g. when a critical security vulnerability is identified) it requires a Big-Bang/Atomic Release; i.e. we return to large, monolithic-style releases.

TO REUSE, OR NOT TO REUSE. THAT IS THE QUESTION

I’ve used the common library reuse approach to - for instance - hold a set of abstract software units (e.g. DTOs, uniform exception handling) that all microservices could use/extend. It enabled us to build Uniformity and consistency into a suite of microservices that (a) simplified integration, and (b) reduced TTM, due to the Low Representational Gap (LRG) stemming from a consistent approach.

However, I’ve also seen the impact of a breaking change (where every service needs updated very quickly). It isn’t some isolated development activity, but one that also involves testing, deployment and release, and all of the coordination typical of a Big-Bang Release cycle.

There are alternatives. For instance, you can duplicate the logic within each microservice, which tends to be the preferred stance for many; but this too seems impractical, breaks cohesion, and goes against (what I’ve always been taught as the best practice of) the Don’t Repeat Yourself (DRY) principle. You might also consider a shared service, which is consumed by multiple microservices, but that also raises concerns, particularly around latency and reliability. And it doesn’t always make sense depending upon what problem is being solved.

I’m not entirely sold on any approach here. They all have failings, and I suggest you apply common sense when choosing the reuse model.

NAMING/DATA/CONVENTION COUPLING

Consumers may also become tightly-coupled to a volatile variable name, key, or convention. For instance, consumers may make assumptions about:

- A global variable; e.g. it exists and contains certain values.

- A key stored in memory; e.g. a HTTP session variable.

- A convention; such as all input values being in lowercase, or all uploaded image files being of .png type.

The more consumers using that particular variable/convention, the more Change Friction occurs.

EXAMPLE - CONVENTION COUPLING

I once worked on a UI proof-of-concept that used JavaScript to display viewable assets to the end user.

The user could navigate through a catalogue, be presented with the name, description, image, and price of a selected asset, and then purchase it.

Deadlines were tight, so to allow us to focus on more business critical issues, the team embedded (what was considered) a harmless rule into the code, creating a coupling between the asset title and the image file name to look up (Assumptions). For example, an asset title of “Resilience is Futile” should always have a corresponding image named “resilienceisfutile.jpg” (note the other assumption mandating the file type to always be a jpeg?). The image would not be presented if either rule failed.

At one stage - immediately prior to a big demo in the next few days - all of the images were cropped, a new banner added, and then the files were renamed and converted to the .png image type. The work was undertaken by someone outwith the team, who was never informed of this assumptive “rule”, subsequently committed the files. Unfortunately, we were left with only two equally unsavoury options:

We chose the latter, and it cost us a few very long days of diverted effort. It just goes to show how a seemingly insignificant form of coupling can have a significant downstream impact.

- Rename and change the type of every single image file to marry up with the expected title, even though we now understood how damaging our assumption had been.

- Remove the (recently discovered) poor assumptive rule, and introduce some flexibility, enabling us to link an asset to an image in a more manageable way.

CONSUMER BUSINESS PROCESS COUPLING

In the section entitled Internal Structure Coupling I described how consumers can become coupled to the internal structures of a system, causing Change Friction.

However, it’s also worth describing that consumers may also become coupled to a specific business flow(s). For example, in an order-processing domain, consumers may build software that expects a specific flow and sequence to how it is processed; e.g. you must interact with X, before Y, but after Z. How sales tax is calculated within different countries offers us another example. Whilst UK citizens tend to get a more static form of goods tax applied, US citizens may have several tiers involved in a tax calculation, suggesting either that more information needs captured up-front, or that tax calculations must be performed Just-In-Time (immediately prior to the sale). This may well affect a customer on-boarding flow, and thus, we should consider how/what we couple to it.

The problem with this approach is in its potential brittleness. If you wish to change the sequence in which those actions are performed - possibly because you need to add some additional up-front validation - then you must coordinate change across every consumer. Not only might this be a significant coordination task, but not everyone will undertake the work.

FACADE

Facade is a good example of a Design Pattern that hides workflow complexity, and may aggregate and transform data into a form suitable for consumption, with a highly reusable mechanism that promotes the Don’t Repeat Yourself (DRY) principle.

When consumers (e.g. UI) become overly familiar with a business workflow, Evolution may suffer. This lack of flow Consolidation can lead to:

- Duplicated workflow across multiple clients, increasing the likelihood of bugs.

- Rework, when those bugs are found.

- Latency issues. We expect (remote) consumers to interrogate APIs to aggregate data, rather than executing that operation closer to the APIs/data source(s).

- Limited caching opportunities; e.g. we might use more caching techniques nearer the source, but we can’t.

- Data validation is promoted within the consumer, rather than in both consumer and the APIs; I’ve heard this argument numerous times but protecting against XSS and injection threats solely inside the consumer is not a secure practice.

- Reputational harm (if external parties have bound themselves to your flows and they must change).

LEGACY COUPLING

Legacy Coupling is a common problem for established businesses embarking on a major system replacement. A common problem is how you successfully manage existing customers on existing (legacy) systems, whilst also supporting the construction and use of its modern replacement in parallel? Obtaining functional parity in the new solution (i.e. replicating the same level of functionality in the new system as is currently supported in the legacy system) may take years. Thus, the temptation to couple from new to legacy, like so:

This coupling has some benefits (e.g. functional reuse), and many potential pitfalls, mainly by being constrained by the legacy system; e.g.:

- Scalability. Many modern systems require “web scale”; e.g. millions of requests per hour. Legacy systems don’t tend to offer this capability (it wasn’t needed in the pre-web days), so how can you satisfy that need whilst coupled to this legacy system?

- Availability, Resilience, and Reliability. Most legacy systems weren’t built on modern Cloud Native principles (the cattle, not pets mantra), so may not suit modern expectations.

- Security is only as good as the weakest link, and a legacy system’s security may be impeded by a lack of patching/support.

- Releasability. A legacy system often (but not always) equates to an Antiquated Monolith architecture, and that suggests Lengthy Release Cycles. Tying a modern system and practices to one with Lengthy Release Cycles suggests slower TTM and poorer ROI.

See the Working with the Legacy section for more on this subject.

SINGLE POINT OF HUMAN FAILURE

“If not controlled, work will flow to the competent man until he submerges.” Charles Boyle, Former U.S. Congressional Liaison for NASA.

The notion of coupling important skills and knowledge onto one individual (or even a single team, in some circumstances) is a dangerous practice. The Phoenix Project summed it up well with Brent - an individual with so thorough an understanding of the domain that it forced almost every significant change (alongside problems and decisions) through him; i.e. these individuals become the “constraint” within the system.

TEAM/PARTNER COUPLING

I’ve also witnessed team coupling; where one team(s) makes assumptions about the availability of a service or feature being built by another team (such as basing your own delivery dates upon another team’s delivery), only to later find that the dependent team’s focus has shifted, and the feature is either no longer available, or is significantly delayed.

The same situation can occur with partnerships - relying on a partner to deliver features on schedule may harm your own reputation in the eyes of others (Perception) if they fail to deliver.

Let’s say we have a small start-up organisation, consisting of Alice, Mo, Stacy, Etienne, and Patrick. I’ve used their avatars to demonstrate how the interaction might work. See the figure below.

Stacy is key here. She is by far the most knowledgeable and experienced, understanding the ins-and-outs of the system. She also makes all major decisions. Naturally, any significant work should be undertaken by her then? Not necessarily.

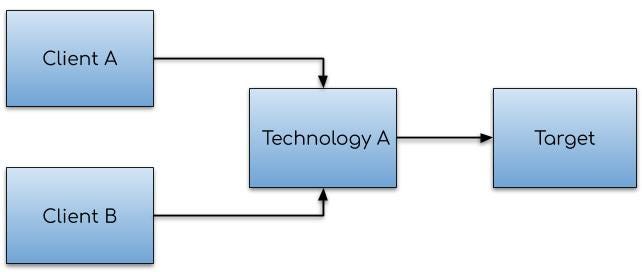

HUB-AND-SPOKE ARCHITECTURE

The Hub-and-Spoke integration architecture follows the same model that is described in this section (a centralised single entity) that all others interact with. It also suffers from the same problems; the hub becomes highly coupled and overloaded.

When one individual (Stacy in this case) is the sole communications and decisions hub that the entire business must pass through, to undertake any significant piece of work (e.g. domain expertise, technology expertise, decision-making on spend), then we subordinate, undermine, and enslave the entire system (the business system, not the technical system) to the capacity (and availability) of that individual, creating a tight-coupling from the business to that individual.

RELIANCE