PRINCIPLES & PRACTICES

Work-In-Progress...

SECTION CONTENTS

- What is Software?

- The Atomic Deployment Strategy

- Lengthy Release Cycles

- Tools & Technologies Do Not Replace Thinking

- Loss Aversion

- Lowest Common Denominator

- Table Stakes

- Internal & External Flow

- Drum, Buffer, Rope

- Value Identification

- Value Contextualisation

- The Principle of Status Quo

- Circle of Influence

- Unit-Level Productivity v Business-Level Scale

- Efficiency & Throughput

- Change v Stability

- Circumvention

- Feasibility v Practicality

- Learn Fast

- Gold-Plating

- Goldilocks Granularity

- "Shift-Left"

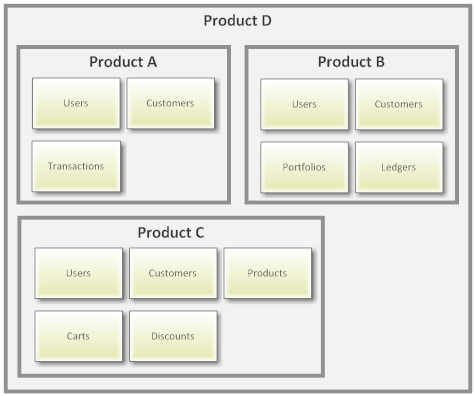

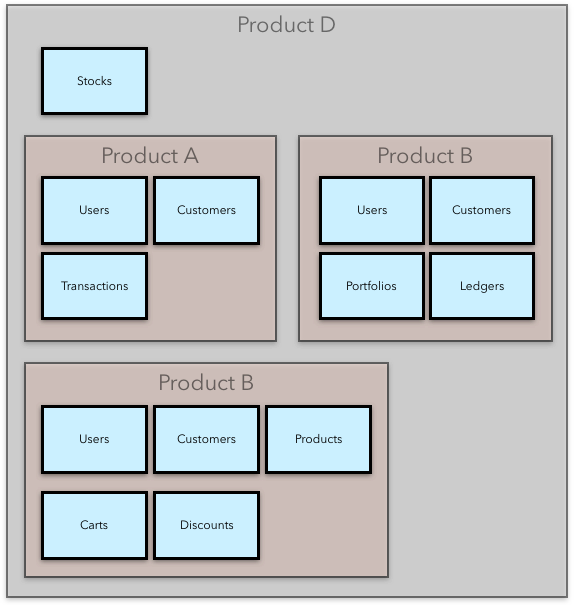

- Growth through Acquisition

- Growth without Consolidation

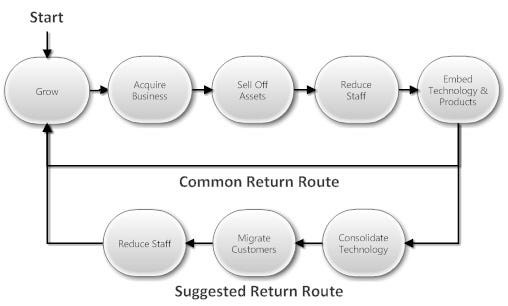

- The Acquisition / Consolidation Cycle

- Neglecting to Consolidate

- Frankenstein's Monster Systems

- Analysis Paralysis

- CapEx & OpEx

- Innovation v Standardisation

- Non-Repudiation

- Spikes & Double Investment

- New (!=) Is Not Always Better

- “Some” Tech Debt is Healthy

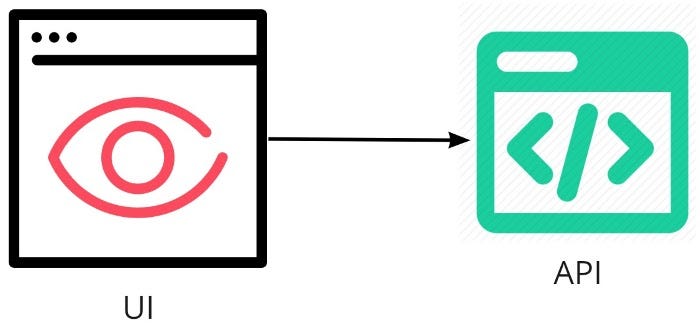

- Consumer-Driven APIs

- MVP Is More Than Functional

- There's No Such Thing As No Consequences

- Why Test?

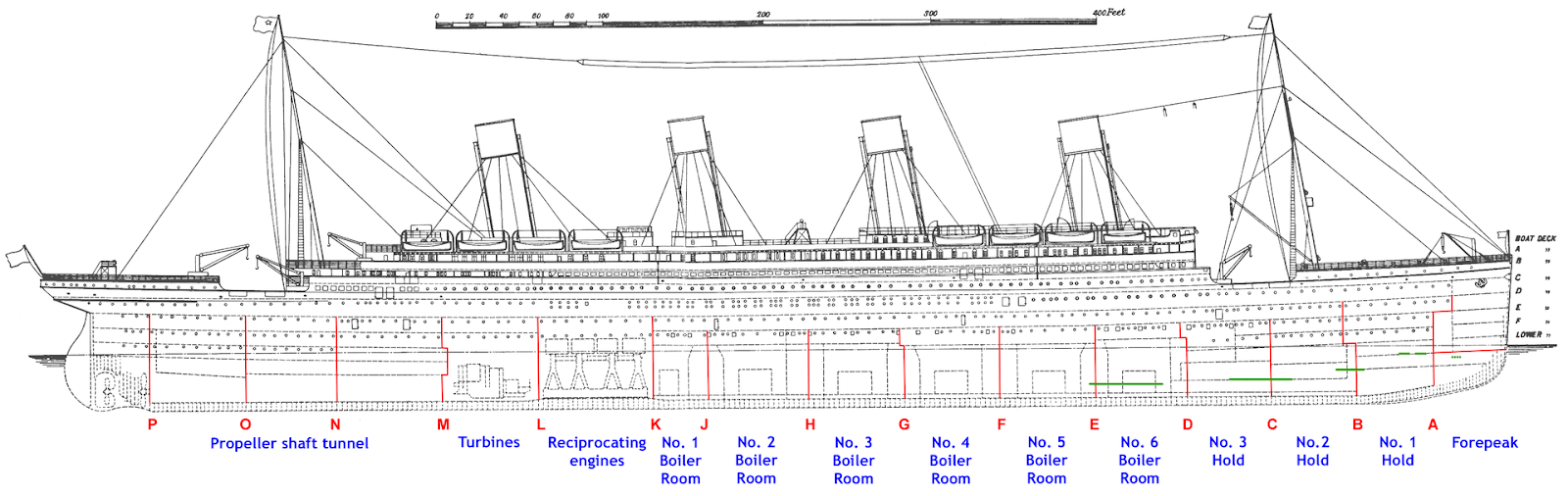

- Bulkheads

- The Paradox of Choice

- Twelve Factor Applications

- Automation Also Creates Chalenges

- Declarative v Imperative

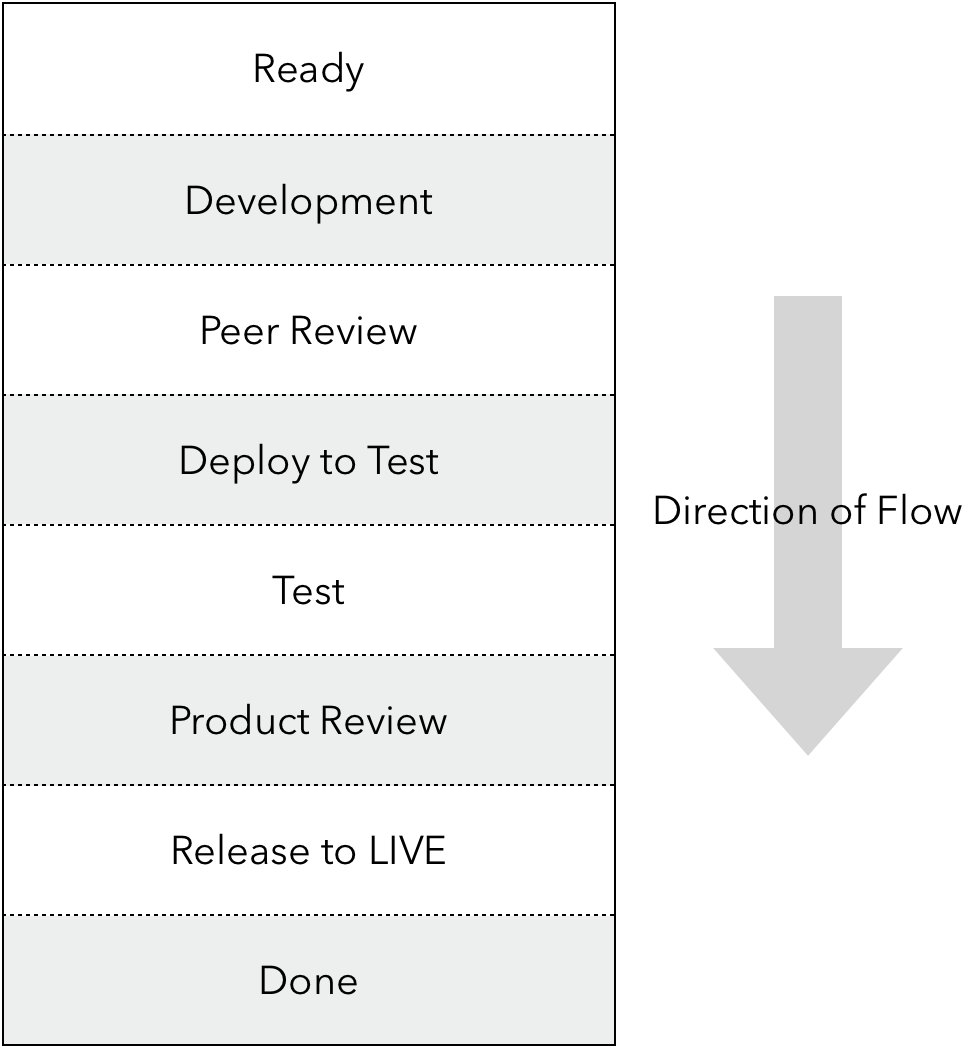

- Work Item Delivery Flow

- Shared Context

- Direct-to-Database

- The Sensible Reuse Principle (Or Glass Slipper Reuse)

- "Debt" Accrues By Not Sharing Context

- Divergence v Conformance

- Work-In-Progress (WIP)

- "Customer-Centric"

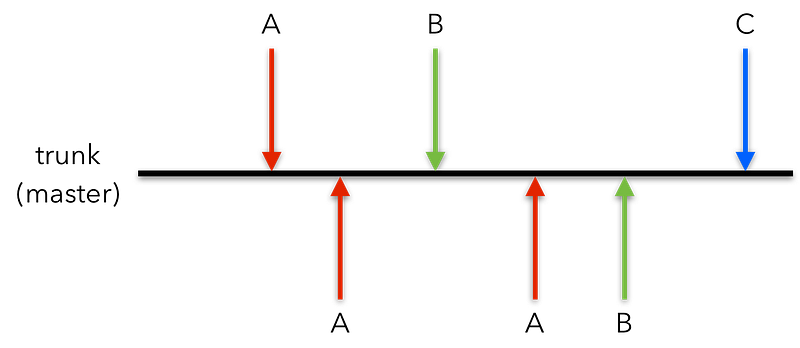

- Branching Strategies

- Lift-and-Shift

- Test-Driven Development (TDD)

- Declarative v Imperative Leadership

- Theory of Constraints - Constraint Exploitation

- Agile & Waterfall Methodologies

- Agile & Less Iterative Activities

- Duplication

- Reliability Through Data Duplication

- Waste Management & Transferral

- Behaviour-Driven Development (BDD)

- BDD Benefits - Common Language

- Single Point of Failure

- Endless Expediting

- Project Bias

- Effective Over Efficient

- Multi-Factor Authentication (MFA) & Two-Factor Authentication (2FA)

- DORA Metrics

- KPIS

- Immediate v Eventual Consistency

- (Business) Continuity

- Upgrade Procrastination

- Blast Radius v Contagion

- Contagion

- Principle of Least Privilege

- Infrastructure As Code (IaC)

- Blast Radius

- Runtime & Change Resilience

- The Principle of Locality

- Reaction

- Evolution's Pecking Order

- Late Integration

- Expediting

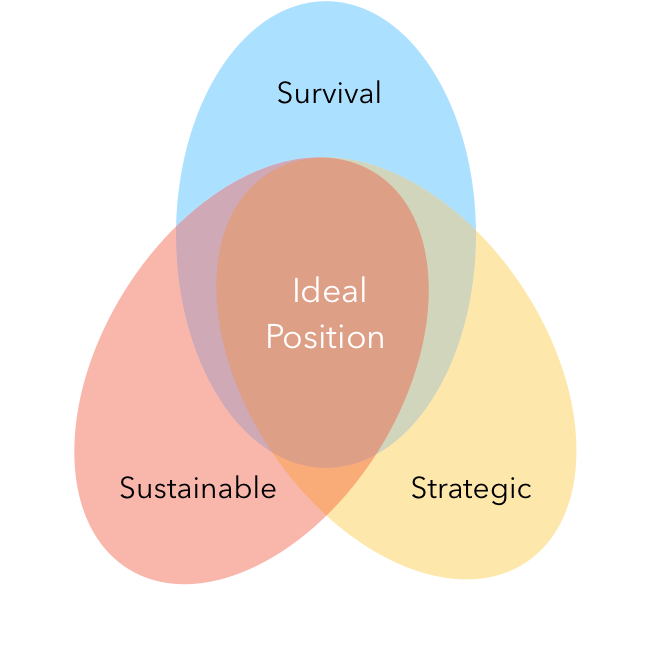

- The Three S's - Survival, Sustainability & Strategy

- Inappropriate Foundations

- Silos

- Minimal Divergence

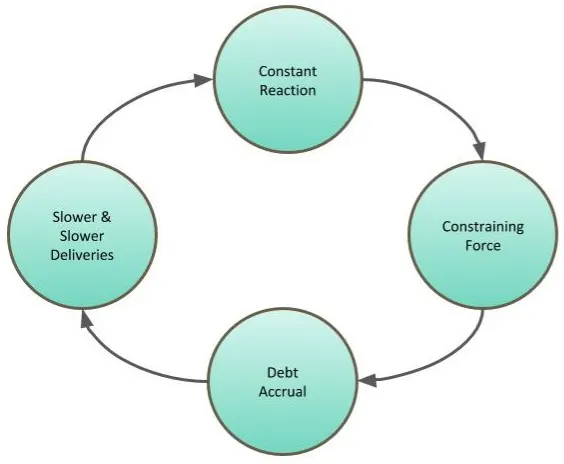

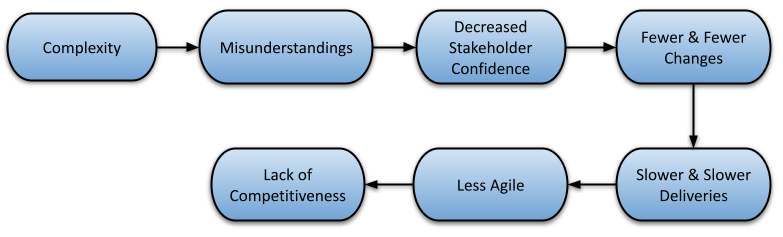

- The Cycle Of Discontent

- Context Switching

- Creeping Normalcy

- Economies Of Scale

- Unsustainable Growth

- Consolidation

- Roadmaps

- Strategy

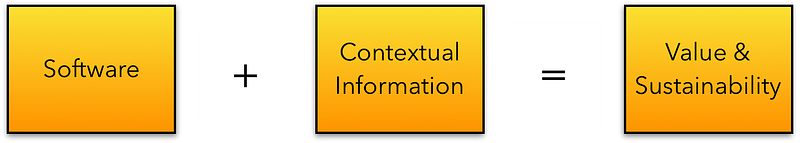

WHAT IS SOFTWARE?

Let’s begin with a more fundamental, philosophical question. What is software?

My interpretation? Software is but a specific realisation of an idea.

In his Theory of Forms, Plato concluded: ‘... "Ideas" or "Forms", are the non-physical essences of all things, of which objects and matter in the physical world are merely imitations.’ [1]

So, an idea may have many different forms (or interpretations), of which a specific software implementation is just one. There can be many other interpretations, such as different software interpretations, a more physical manifestation (e.g. hardware), or a business interpretation (one that doesn’t necessarily involve technology). Consider digital transformations for example. They typically replace one interpretation (existing business workflows) with another (a digital, software, interpretation), but it’s often the same idea realised differently.

The fixation on interpretation over idea is problematic as it creates an interpretation attachment, causing us to:

- Forget that an interpretation is just one (changeable, malleable) view of an overarching idea.

- Lose sight of the idea, in favour of the interpretation. We're so busy looking at how our interpretation is affected by change (which comes in many forms) that we preclude the possibility that something newer or more radical has been discovered, (more or less) neutralising your interpretation. Of course this view is understandable - no-one wants to tell a business who may have invested millions that its interpretation (solution) is about to become redundant.

- Build (unsustainable) solutions from an “bottom-up” approach. I’ve seen this approach push people to favour a reuse strategy (for the sake of it) rather than due to logical decision making or common sense (Sensible Reuse Principle).

- Be polluted by concepts from the existing interpretation. e.g. the terminology, entity naming, structures, the modelling of relationships, flow, and user interactions may not be appropriate for the new interpretation.

- (Potentially) Lose competitiveness. We continue to build upon a (now) flawed interpretation.

- Think in the shorter-term (realisation), rather than longer-term (ideas).

So what’s my point? We should show caution in how we view value. Value isn’t solely about a specific software interpretation, it’s about the idea and its practical interpretation. Of course, I’m not proposing that we neglect the interpretation, only that we adjust our views to better incorporate the idea, promoting it to first-class citizen status.

THE RISE OF THE FINTECH

The last decade has given rise to many new Fintech (financial technology) businesses. The news is full of their success stories, as they start to take market share from (and outmanoeuvre) the established players. Why is this?

They’re able to interpret the same idea differently, typically in a way which is more appealing to customers than what’s already on the market. Because there’s nothing already in place at point of inception (both in terms of a solution, or in terms of the pollution of a specific realisation), they’re able to work top-down (from idea to interpretation to implementation), building out their realisation based upon modern thinking (social, technological, delivery practices, devices, data-driven decision-making, machine learning, consumer-driven, integrability, accessibility), whilst many established businesses continue to use a bottom-up (realisation-focused) approach, munging together new concepts into their existing estate.

All of this leads to a serious problem for established businesses if innovation and progress are constrained by its existing realisation, and Loss Aversion is at hand. Whilst they could certainly attempt to retrofit modern practices into aging solutions, it doesn’t resolve the key issue - the realisation is (now) substandard, and requires a sea change to re-realise the idea (from the top down) using modern thinking.

INFLUENCES

Market pressures, innovations and inventions, modern practices and thinking, trends, better collaboration, knowledge, and experience all affect how an idea is interpreted, and can cause us to reinterpret it in a new, distinct, or disruptive way.

Customers pay for the idea and the interpretation, but in the end it's the idea they want. They’re tied to the idea, not necessarily to the interpretation. If they find an alternative (better) interpretation elsewhere, then they’ll take their custom there. Alternatively, you may alter (evolve) your own interpretation, or change it entirely and so long as it still aligns with the customer’s interpretation, still retain their custom.

FURTHER CONSIDERATIONS

- [1] - Plato’s Theory of Forms - https://en.m.wikipedia.org/wiki/Theory_of_forms

- Innovation Triad

- Loss Aversion

- Sensible Reuse Principle

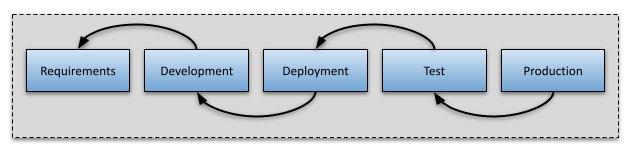

THE ATOMIC DEPLOYMENT STRATEGY

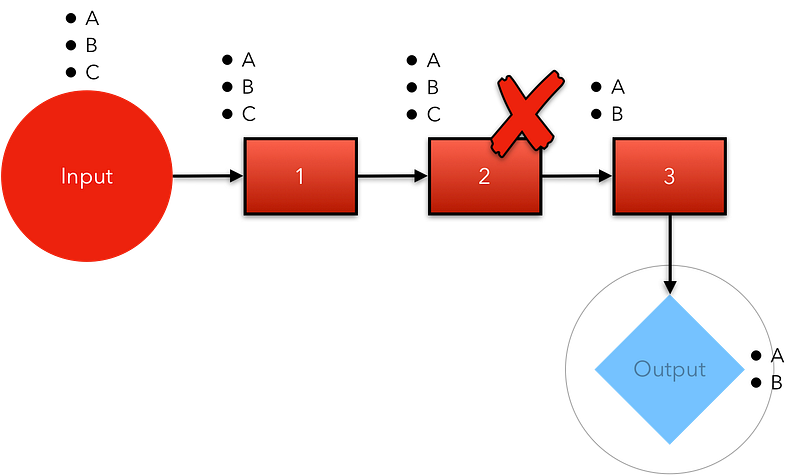

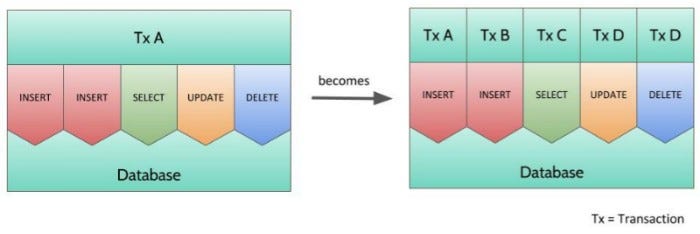

I use the term “atomic deployment strategy” to describe a common deployment approach, commonly aligned with the Monolith - where all components must be deployed, regardless of their need. It is an all-or-nothing deployment strategy.

The standard Point-to-Point (P2P) architecture typical in a Monolith suggests a tight-coupling (Coupling) between all software components/systems in its ecosystem. This has several knock-on effects, including (a) making the extrication of specific services/domains from their dependencies difficult, and as a side-effect (b) forcing deployments to include all components, even when only one component is required (or has changed), and regardless of the necessity.

This approach suffers from the following challenges:

- Release and Operational pain – Software Engineers must build/deploy components that are unrelated to the problem being solved. The Ops team expend effort deploying and managing software that is never used (or changed); In others ways, less so due to less moving parts.

- Releases are large, complicated affairs and thus incentivise a Lengthy Release Cycle mindset, slowing change and inviting Entropy.

- Highly inefficient - an unsurety over what’s changed leads us to retesting everything (even though we know large areas haven’t changed).

- Siloing. Atomic Deployment Strategy leads to Lengthy Release Cycle, which leads to longer flows, which naturally leads to the creation of little specialised silos.

FURTHER CONSIDERATIONS

LENGTHY RELEASE CYCLES

Lengthy (e.g. quarterly or monthly) release cycles can be tremendously damaging to a business, its employees, and its customers.

Lengthy Release Cycles often promote excruciating, siloed steps, involving many days of waiting, regular rework, unnecessarily long and complex deployments, and the forced acceptance (testing) of a large number of changes in a relatively short time frame. They also suggest that anything at the end of the release cycle is particularly at risk of expediting, for the sake of a promised release date.

CONWAY’S LAW AND RELEASES

Conway's Law states that: "organizations which design systems ... are constrained to produce designs which are copies of the communication structures of these organizations." [wikipedia]

So, if a system architecture mimics how the organisation communicates, then why wouldn’t its delivery flow also mimic it? i.e. in a siloed organisation, where inter-department communication is limited, and any decision requires lots of lead time, then we’ll also find similar issues with the release cycle (siloed, with lots of waits).

Lengthy Release Cycles can cause the following issues:

- The siloing of teams.

- Communication issues.

- Lack of group ownership.

- Ping-pong releases.

- Mistrust.

- Poor Productivity.

- Poor Repeatability.

- Cherry-Picking, and thus expediting.

- Poor quality.

Let’s view them.

SILOING OF TEAMS

The longer the flow, the easier it is to fit unnecessary steps into a flow, creating little pockets of resistance to fast change.

Silo’ing can cause the following issues:

- A lack of transparency, collaboration, ownership, and accountability.

- Quality is not introduced early enough.

- Rework is a common practice.

Most of the succeeding points are caused by Silo’ing.

COMMUNICATION ISSUES

Silos are the natural enemy of good communication. Work that is undertaken in a silo has (to my mind) a higher propensity to be flawed, simply because of numbers. (As a generalisation) the more brains on a problem, sooner, the higher the quality of that solution.

Consider the natural tendency for siloed teams to work on software in isolation, typically neglecting to involve any Operations or Security stakeholder input (that would slow things down, wouldn’t it?). I’ve often seen cases where the Operations team either won’t deploy the feature, or are duressed into deploying it (against their will and better sense of judgement), causing the accrual of Technical Debt.

Often, it’s because the developer has assumed something (Assumptions) that competes with an operational expectation (e.g. until recently, that’s been stability, although now it might be nearer Resilience), and hasn't sufficiently communicated with them due to the organisation’s siloed nature.

This lack of good communication, alongside Lengthy Release Cycles causes misunderstandings, such as knowing which features are deployed in which environments (see Twelve Factor Apps - Minimise Divergence). E.g. the developer thinks that a bug fix is in Release X on environment Z, whilst in fact, it never got that far.

FEATURE HUNTS

I’ve listened to lengthy conversations around “Feature Hunts”, until someone finally provides evidence (typically by testing it) of what feature is where. It’s mainly due to Lengthy Release Cycles. Feature Hunts are a big time waster for everyone involved.

Poor communications, caused by the siloed mindset, are one reason for the increasing popularity of Cross-Functional Teams.

LACK OF OWNERSHIP

“I can’t do that, only [Jeff/Lisa/insert name here...] knows how to do that. We’ll have to wait for them.” Ring any bells?

That response is pretty common in siloed organisations suffering from Lengthy Release Cycles. It stems from a lack of group ownership and cross-pollination of skills and knowledge that you’d typically get in a Cross-Functional Team.

Why? I think it’s a mix of skills availability and context. For instance, just because someone in Operations has the skills to solve a particular problem, doesn’t mean they have the context to make the right change.

As a technologist, I admit to finding context much harder to understand than technology. Whilst I can pick up most technologies in a relatively short time, applying those skills and techniques in the right domain context is far more challenging (and one reason why I’d argue that consultants should rarely have the final say in a solution, unless there is parity between parties). If you don’t have context, you can’t own it, and if you can’t own it, you can’t help. Alas, we must await Jeff.

Pair/Mob Programming, or Cross-Functional Teams are some counters to this problem.

PING-PONG RELEASES

The scale of change embedded within each release often results in one (or more) major/critical bugs being identified, often late in the cycle (e.g. in system, acceptance/exploratory testing). These bugs must be fixed, causing lengthy “ping-pong” releases between development, build and release, deployment, and several forms of (formal) testing, causing lots of unnecessary waiting and rework.

TRUST

Technology books often focus on mechanisms over people (but sometimes the solution needs something more people-focused). Yet one of the cultural concerns over Lengthy Release Cycles is trust.

Lengthy Release Cycles promote silos, and individuals who are siloed may feel isolated, and neglect opportunities to interact with people within other silos. This is more than a shame, it’s a travesty. People who don’t know one another may have no means to build relationships, and thus trust. And without trust, we face an uphill battle to deliver meaningful change in a short time.

TRUST & CAMARADERIE

The camaraderie that stems from a well-knit, integral team of like-minded individuals shouldn’t be overlooked. It’s an extremely powerful tool to build highly performant, collaborative, antifragile teams.

Much of a team’s strength comes through the accrual of trust. E.g. Bill trusts Dave and Lisa as they’ve worked well together for years. They understand one another’s strengths (and weaknesses), and often complement them to build a stronger foundation. Cross-Functional Teams also build a shared learning platform that reduces single-points-of-failure, thus building trust. Consider the mutual trust and respect that the astronauts built up on the lunar landings, including how each one must learn how to perform the other’s job (a Redundancy Check).

Yet siloed teams don’t get the same opportunities. Whilst people may build trust within the silo, they can’t (necessarily) trust what comes before or after their own little island, creating a Us versus Them mentality.

Colleagues who don’t/can’t truly trust one another may live in a world of stop-start communications (e.g. “I don’t trust them, so I’m going to ensure I know exactly what they’ve done before I let it pass”; a form of micromanagement), and where duplication of work is predominant (e.g. “so what if they’ve tested it, I don’t trust they did it right, so I’ll do it again myself”). Mistrust hampers our willingness/ability to Shift-Left, and thus slows Productivity.

PRODUCTIVITY

Long Release Cycles exist (in a large part) due to difficult environment provisioning. Even in the cases where provisioning is quick, we often still find they’re not a carbon copy of the production environment. This has efficiency ramifications.

For instance, without an efficient provisioning mechanism, we invite systemic problems to creep into the work of development and testing staff, simply because they lack any means of fast feedback; thus, we limit people’s confidence in their own work because they can’t guarantee it will function as intended (i.e. no Safety Net).

Creating the right mechanisms to successfully and efficiently provision environments quickly takes time and money (which is why some organisations never get around to doing it; they’re always “too busy building functionality”). So, some organisations attempt to remedy this by providing a shared environment. But this approach is also flawed. For example, who owns the environment, keeps it functioning, and ensures its accuracy? If no one owns it, no one will support it. The result is stale software/data that doesn’t reflect the production setup. Again, this is hampered by Lengthy Release Cycles and poor provisioning.

RELEASE MANAGEMENT TROUBLES

I’ve seen some interesting approaches to release management through the years (including verifying readiness).

At one organisation, I witnessed a great deal of ping-pong releases, where the entire software stack was deployed, configured and tested, only to find that some feature or configuration had been missed, thus forcing the cleansing and reprovision of the environment, rebuilding the code, redeployment and reconfiguration of the application (which took a couple of hours each time), recording all changes (it was hoped) in an “instruction” manual, and then retesting it all again. This vicious cycle might occur multiple times.

Once the testers gave the thumbs up, the environment was dropped, all the steps in the newly formed “instruction” manual re-executed, then all the testing repeated to prove the instructions were indeed correct! What a massive waste of time and money.

LENGTHY RELEASE CYCLES & CHERRY PICKING

Lengthy Release Cycles may also promote poor practices such as Cherry Picking (a form of Expediting). Cherry Picking is (typically) where someone with little appreciation for the technical difficulties, identifies features/bug fixes they think the business needs and discards the remainder for later release (we hope). Cherry Picking raises the following concerns:

- The picker(s) may regularly favour functionality over non-functional needs (i.e. Functional Myopicism).

- It promotes messy branching and merging activities, where the engineer must identify what’s been picked, discard the remainder, then merge what’s left back for release. This is painful, error-prone, and pretty thankless.

REPEATABILITY

Lengthy Release Cycles make most forms of change difficult (Change Friction). Thus, when change is required (which happens often in dynamic organisations), it incentivises the circumvention of established processes (i.e. Expediting) in an effort to right a wrong; e.g. the effort to manually hack a change into a production environment seems quicker than following the established process (Short-Term Glory v Long-Term Success).

Whilst this approach seems quicker, it actually increases risk in the following areas:

- The change exacerbates the problem, resulting in a bigger problem.

- The change remains unrecorded (or incorrectly recorded), and requires a manual intervention inconsistent with normal practice (i.e. a notorious practice for introducing faults). Tracking the original change is difficult, so we may only be able to infer what changed.

IMPACT ON QUALITY

Lengthy Release Cycles can cause quality issues, simply because of timing. Lengthy Release Cycles tend to increase wait time, hamper collaboration, and don’t lend themselves well to fast feedback (or fail fast). Consequently, there is less time to rectify issues; i.e. less time to increase quality, without incurring cost and delivery time penalties. The question then becomes more contentious; do we release now, because we’ve promised the market something but knowing there are quality issues (which might affect customers), or hold off for another month/quarter to resolve quality issues but potentially suffer reputational damage, and let down our customers in other ways?

Performance (e.g. load) testing is a good example where Lengthy Release Cycles can impact quality. Performance tests (e.g. load, soak) are undertaken towards the end of a release cycle, once everything is built, combined, coordinated, and deployed to a production-like environment. This takes time (and is a lot more involved than you might imagine), and may (see previous points) be difficult to provision (i.e. time and money).

Tasks (such as performance tests) which are not undertaken till towards the cycle end (neglected entirely, or only performed half-heartedly), makes reacting to any identified problems difficult. In the case of performance testing, you may be forced to release software with known scale/performance concerns, leading to poor quality, and incurring Technical Debt. And Lengthy Release Cycles likely means you’ll often find yourself down this same path, where performance testing is never (or rarely) done due to time pressures. Unfortunately, we’re only pushing the problem further down the line, where it will be worse.

Quality is again at risk. And bear in mind that quality can be subjective (Quality is Subjective).

FURTHER CONSIDERATIONS

- Change Friction.

- Expediting.

- Safety Net.

- Shift-Left.

- Cross-Functional Teams.

- Twelve Factor Apps.

- Technical Debt.

- Quality is Subjective.

TOOLS & TECHNOLOGIES DO NOT REPLACE THINKING

We live (and work) in a highly complex, interconnected world. Complex interrelations abound, and every decision we make has both positive results, and negative consequences.

Yet, humans love simplicity, having a natural tendency to focus on positive outcomes, whilst neglecting negative consequences. This oversimplification promotes the chimeric notion that every problem has a silver-bullet solution.

My point? Tools, frameworks, design patterns, technologies, and even books (like this one), are all tools to support thinking; they are not replacements for it.

REAL-WORLD EXAMPLE - THE IMPORTANCE OF THINKING

To support my point around thinking, consider the doctor/patient relationship, and specifically the procedures involved in the diagnosis and treatment of a patient.

Below is a quotation from a medical article. I’ve bolded the words of particular relevance.

“The diagnostic process is a complex transition process that begins with the patient's individual illness history and culminates in a result that can be categorized. A patient consulting the doctor about his symptoms starts an intricate process that may label him, classify his illness, indicate certain specific treatments in preference to others and put him in a prognostic category. The outcome of the process is regarded as important for effective treatment, by both patient and doctor.” [1]

Let’s pause a moment to consider it.

As a precondition to the medical assessment, the doctor familiarises themselves with the patient’s medical history. The assessment takes the form of a familiar protocol, where the doctor asks the patient about their ailment, listens to the patients description/assessment, then begins a dialogue, probing the patient (at appropriate times) to gather more detailed, or accurate, information about the symptoms (i.e. to identify a root cause). Understanding the history (i.e. contextual information) is important here (there’s often an association between past problems and the current ailment), as it may lead to a revelation that will enable the doctor to classify the illness.

Note that at this stage, whilst the doctor has (hopefully) classified the illness, there’s not a single mention of a treatment plan. Only once the doctor has undertaken sufficient due-diligence, and has a keen understanding of the cause (including potentially testing that hypothesis), will they then diagnose and offer a treatment plan. The doctor now begins the next stage; indicate certain specific treatments in preference to others.

At the treatment stage the doctor uses their knowledge, expertise, and judgement, to formulate the best treatment plan based upon their given constraints. Let’s pause a moment to reflect on this.

Now consider the following. Why do doctors consider your age, fitness level, cholesterol, known allergies, genetic familial issues, in addition to accounting for your medical history etc, prior to identifying a treatment plan? Because they must work within the constraints of your Complex System; i.e. how your system reacts to a treatment plan may differ to how my system will react.

However, doctors must also work within the realms of another Complex System; they may be constrained (or influenced) by external influences like time, treatment cost (like budgetary costs; e.g. expensive treatment plans may be disfavoured, even when they’re known to offer more promising results), or (in some cases) unorthodox new treatments (such as in the treatment of a potentially terminal disease; albeit this is a bit of a caveat emptor). We’re talking about the intersection of two complex systems: one for the patient, and one for external parties.

Let me reiterate my last point. No treatment plan is recommended until after the doctor has considered a number of key factors and constraints, and made a balanced decision based on all available evidence.

Returning to the technology domain, I’m sorry to say, I often see a very different approach to how technology treatment plans are undertaken. Whilst I’m not saying it’s true of everyone, my experience suggests that many technologists spend far too little time diagnosing the problem, and often have already formulated a treatment plan before understanding the context to apply it to, or whether it will really work (and this may be subjective too!). This is a form of Bias. To rephrase, I rarely see technologists consider all of the constraints, often only considering the first-level ramifications, but ignorant to second and third-level consequences, or formulating a treatment plan based on an accurate diagnosis of the problem.

The reason for this behaviour is harder to qualify. Is the cost of medical failure much greater (maybe there’s little opportunity to rectify a medical failing - the patient is already affected), or more obvious, than the failure of a technological decision, thus less time is spent diagnosing technology? Possibly, but I suppose that depends on the context. Maybe a medical faux pa is recognised sooner (e.g. the impact on the patient is more immediate)? Are there more rigorous validations in the practicing of medicine than in technology? I’m less sure about that one. Do we know more about the human body than technology? No, I’d label both as Complex Systems...

I suspect there’s a more obvious answer, known as Affect Heuristic.

“The affect heuristic is a heuristic, a mental shortcut that allows people to make decisions and solve problems quickly and efficiently, in which current emotion—fear, pleasure, surprise, etc.—influences decisions. In other words, it is a type of heuristic in which emotional response, or "affect" in psychological terms, plays a lead role.” [2]

“Finucane, Alhakami, Slovic and Johnson theorized in 2000 that a good feeling towards a situation (i.e., positive affect) would lead to a lower risk perception and a higher benefit perception, even when this is logically not warranted for that situation. This implies that a strong emotional response to a word or other stimulus might alter a person's judgment. He or she might make different decisions based on the same set of facts and might thus make an illogical decision. Overall, the affect heuristic is of influence in nearly every decision-making arena.” [3]

We’re often driven by our emotions towards a specific technology or methodology, which may play a lead role in our decision making. I've seen this time-and-again; from an unhealthily averse response to certain vendors, regardless of the quality of their offering, to an inappropriately positive outlook towards specific cloud technologies incompatible with the business’ aspirations, or timelines. It sometimes leads to technologists attempting to fit a business around a technology constraint, rather than the converse (Tail Wagging the Dog).

BALANCED DECISION-MAKING OVER TOOLS & TECHNOLOGY

A good deal of this book attempts to demonstrate the complex interrelations within our industry. My advice is simple. Rather than attempting to solve a problem through the introduction of a new tool or technology, I (at least initially) emphasise the importance of better understanding these complex interrelations, helping you to make more informed and balanced decisions on technology/tooling choices.

BRING OUT YOUR DEAD

The technology landscape is littered with the broken remnants of once lauded tools and technologies that nowadays cause raised eyebrows and sheepish grins.

Some enterprises now face major challenges, through the heavy investment in a (now irrelevant) technology. The problem is twofold:

Whilst hindsight is a wonderful thing, I believe that with the right mindset and knowledge, it’s possible to foresee many (but not all) obstacles on the road in enough time to avoid them, or pivot, simply by applying more balance to the decision making process.

- Transitioning to a new technology is technically challenging.

- The existing technologies and practices are so ingrained in the organisation’s culture (through Inculcation Bias) that there’s little opportunity to reverse the decision.

There’s no such thing as a free lunch. “Good” outcomes and “Bad” consequences are so entwined that it’s impossible to separate one from the other, and extricate only the good. Consider the following cases:

- SOA & ESBs. As a nascent practice, SOA was lauded as a technological revolution. It certainly had merits, such as the better alignment of business and technology, and service-focused solutions. It also helped to pave the way for XML to be treated as the defacto data transfer mechanism. Yet nowadays SOA (generally) has derogatory connotations. As do ESBs (Enterprise Service Buses), popularised by the rise of SOA, and now demonised as incohesive, single-points-of-failure.

- Employing Rapid Application Development (RAD) principles and tools may increase initial development velocity, but they may also increase Technical Debt (e.g. exposing entities as DTOs, or advocating a direct-to-database integration mindset, inculcating a bottom-up mentality to API and UI design, and thus resulting in solutions made for developers, not users).

- Many cloud/vendor technologies are fantastic, but some are highly proprietary, and can tightly couple you to a specific vendor (Honeypot Coupling).

- “All problems in computer science can be solved by another level of indirection.” [David Wheeler]. “Any performance problem can be solved by removing a layer of indirection”. [Unknown].

- Embedding business logic in SQL procedures (typically) hardens code from attack, and suggests improved performance (at least latency, not necessarily scale), but it likely also increases vendor coupling, reduces Portability, and introduces (affordable) talent sourcing difficulties, thereby hampering your business’ ability to scale.

- SQL joins across disparate domain tables likely increases performance, and scalability but only to a point. It mayalso reduce Evolvability, (horizontal) Scalability, and potentially reduces Security (increases the area to secure; Principle of Least Privilege cannot be applied).

- Returning a persisted entity (rather than a DTO) from an API promotes Productivity through Reuse, but reduces Evolvability, and may introduce security vulnerabilities (exposing too much).

- Microservices has many redeeming qualities, but they also increase manageability costs, may nudge you down a (previously untraveled) Eventual Consistency model, and (if not carefully managed) can cause Technology Sprawl.

- Solutions built around a PAAS reduce many complexities (through abstraction), and likely increase development/release velocity. Yet they’re more opinionated (than IAAS for instance), and also tend to reduce Flexibility, by constraining the number of ways to solve a problem.

MARKETING MAGPIES

Beware of Marketing Magpies; individuals who wax lyrical on new tools and technologies, based mainly around the (potentially biased) opinions of others. They may be missing the balanced judgement necessary for strategic decisions.

KNOWN QUANTITY V NEW TECH

Never hold onto something simply because it’s a Known Quantity, and never modernise just because it’s new and shiny. Change takes time and patience, and should always be based upon a business need or motivation.

Will we be demonising Microservices and Serverless in decades to come? Possibly. So let’s finish on a more uplifting note.

The best tool at your disposal is not some new tool, technology, platform, or methodology; it’s a diverse team with complementary skills and experience, with a precise understanding of the problem to solve, a good knowledge of foundations and principles, coupled with a deep understanding of the complex interrelations that exist between business and technology, and sound, balanced decision-making which remains unbiased (and sometimes undeterred) by the constant noise and buzz that encompasses our industry.

FURTHER CONSIDERATIONS

- Inculcation Bias.

- Marketing Magpies.

- Honeypot Coupling.

- PAAS.

- Technology Sprawl.

- Microservices.

- Technical Debt.

FURTHER READING

- [1] - https://academic.oup.com/fampra/article/18/3/243/531614

- [2] [3] - https://en.m.wikipedia.org/wiki/Affect_heuristic

LOSS AVERSION

“In preparing for battle I have always found that plans are useless, but planning is indispensable.” - Dwight D. Eisenhower

Coupling should be, but often isn’t, considered alongside Loss Aversion (i.e. how averse your business is to the loss of a service, or feature). Owners of systems with a tight-coupling to integral services or features, may suffer great financial hardship if those services become unavailable (e.g. whether in the temporal capacity, or something more final, such as partner bankruptcy). Astute businesses identify key services or features they are averse to losing, and either plan for that failure, or deploy countermeasures (by building slack) into the system.

Netflix provide the archetype from a systems perspective, practicing several key aspects of fault tolerance (in their Microservices architecture) to counter Loss Aversion:

- They proactively identify failing services, stop/throttle requests to them, and may terminate defective ones.

- They apply fallback procedures for underperforming services; e.g. they may present static recommendation content if the personalised customer response isn’t received in a reasonable time-frame.

- They intentionally inject faults, or additional latency, in a controlled manner, into their system to learn from it. Whilst this may seem counterintuitive, it actually increases their resiliency.

Consider the following scenario around Loss Aversion.

Let’s say I’m starting a new business venture to provide baking advice and recipes. To market my business, I need the following things:

- A domain name representative of my branding. Let’s call it blithebaking.com, costing me $5 per month.

- A website, to advertise my services, offer advice, recipes etc.

- Business cards. I use these as part of my branding exercise, to hand out to potential customers. Printed on each card are my contact details and website address (i.e. Domain Name).

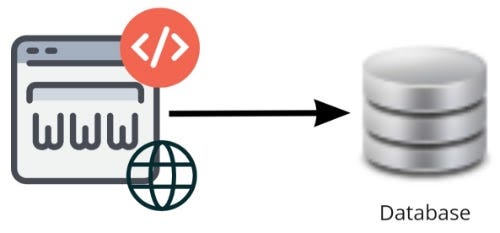

The coupling might take this form.

Domain Name Coupling

Now whilst this represents a very simple case of Coupling, there’s already a few potential failures here. In this case, I’ll focus on the domain name.

Let’s say I’m remiss, and fail to renew my domain name on its renewal date. Several scenarios can play out:

- I’m comfortable with the loss (i.e. I’m not averse to the loss, accept it, and move on). In this case I’m loosely coupled to this outcome and can easily recover.

- I’m uncomfortable with the loss, but remedial action is available (i.e. I’m moderately averse to the loss). Whilst in this case, I’m tightly-coupled to the outcome, I have recovery options (let’s say I manage to renew the domain name).

- I’m uncomfortable with the loss and there’s no remedial action available (i.e. I’m highly averse to the loss).

Let’s say option 3 occurs. I’m remiss, and lose my domain name to a competitor (it’s a popular domain name!). That competitor links their own website to the blithebaking.com domain, which either confuses all my existing custom, or routes them all to my competitor. I’ve drawn up a table of potential outcomes below.

| Scenario | Domain Name Costs (monthly) * | Business Cards Costs (one off) | Website Costs | Number of Customers (sales avg. $50) | Overall Potential Cost | (My) Level of Aversion |

|---|---|---|---|---|---|---|

| 1 | $5 | $50 (100 cards) | $0. Built it myself. | ~10 | $610 ($50 + $500) | Low |

| 2 | $5 | $50 (100 cards) | $0. Built it myself. | ~500 | ~$25K ($50 + $25,000) | Medium to High |

| 3 | $5 | $10K (100,000 cards) | $0. Built it myself. | ~10 | ~$10K ($10,000 + $500) | Medium to High |

| 4 | $5 | $10K (100,000 cards) | $0. Built it myself. | ~1000 | ~60K ($10,000 + 50,000) | High |

| 5 | $5 | $10K (100,000 cards) | 2K per change. Third party managed. | ~1000 | ~62K ($10,000 + $2,000 + $50,000) | High |

| * The domain name costs aren’t included in the overall potential costs; they highlight the disparity in how a tiny outgoing may relate to the Aversion costs it affects. | ||||||

You can see how quickly the combination of aversions can wreak havoc. The key concepts are:

- How tightly I’ve coupled myself to depend on something else (whether it’s a business card, or custom).

- How costly (to me) the loss of that dependency is (this need not be monetary).

Scenario 1 is the best in terms of low coupling/dependence. I’ve spent very little on business cards, and I have limited custom at this stage. I might grumble, but I can live with this outcome. Scenario 2 has cost me dearly due to the significant number of customers I had. Scenario 3 is an interesting one. Whilst I have limited custom, my somewhat unorthodox approach of purchasing 100,000 business cards as an up-front investment (a form of stock), prior to validating my business model, has done me a disservice, inflicting a form of self-inflicted Entropy upon myself.

Scenarios 4 & 5 show the worst cases, where my Loss Aversion is at its highest. In Scenario 5 I demonstrate that for the sake of only $5 a month, I’ve inflicted immediate costs in the region of $62,000. But there’s also the unseen, insidious costs to consider here; e.g. note that I haven’t considered the longer-term branding implications. Has it actually cost me hundreds of thousands of dollars?

How the number of customers affects our Loss Aversion falls into the scale category. Large-scale failings concern me more, because of the large disparity between (say) 10 customers, and 1000 customers.

LOSS AVERSION MAY BE SUBJECTIVE

One individual’s perception of Loss Aversion may differ to another, introducing an additional degree of complexity. For instance, whilst I might consider a $15K loss unacceptable, another - with stronger recovery capabilities - may not.

TIME CRITICALITY & LOSS AVERSION

Time criticality (the permanence of the failure) is another consideration.

Temporal failure may be sufficiently disruptive to put your business at risk, and you might want to consider:

- What is an unacceptable duration of disruption to your business? Depending upon the situation, it might be seconds, or even days.

- Timing. When did the failure occur? E.g. if my sales website fails at 3am one cold Sunday winter morning, I’m probably less concerned than it occurring at 6pm on Black Friday. Alternatively, if a large proportion of my revenue comes through events (e.g. a sports event), then the timing of a failure is crucial.

What’s interesting about my earlier example was that the outcome was - given enough time and foresight - utterly controllable. Most of the problems I found myself in were due to my inability to react, which I’d inflicted upon myself.

Whilst there’s no golden rules around Loss Aversion, that doesn’t suggest we shouldn’t plan; especially when each scenario is unique, complex, and responses may be subjective. The outcome of any decisions made here should feed into discussions around Coupling.

FURTHER CONSIDERATIONS

- Coupling.

- Complex System.

- Entropy.

- Microservices.

- https://www.sitepoint.com/help-ive-lost-domain-name

LOWEST COMMON DENOMINATOR

Lowest Common Denominator - if correctly used - can be a powerful tool. In a sense, it promotes Uniformity, which is a powerful Productivity enabler.

Consider the following example. For many years (and even today), one of the biggest problems facing integration protocols was the lack of widespread support for a single approach across the major vendors. Achieving a significant quorum was difficult as each vendor was either already heavily invested in an existing protocol, or was promoting their own. Standards existed but they were many pockets of resistance.

For instance, for many years Microsoft supported COM and DCOM, whilst Sun/Oracle promoted RMI (based upon Corba) for much of the “enterprise edition” integrations. Whilst both protocols are highly regarded, they often influenced the direction of the implementation technology; e.g. there was a vicious cycle, where opting to implement one solution in Java promoted the RMI protocol, which in turn influenced all further solution implementation choices to be Java (regardless of whether it was the right tool for the job).

As new technologies, such as XML (and later JSON) emerged, we began to see a nascent form of (implicit) standardisation (through uptake, rather than necessarily vendor-driven). Web Services settled upon string-based data transfer structures that were highly flexible, hierarchically structured, human readable, and could easily represent most business concepts, all over HTTP. It allowed the implementation technology to be decoupled from the contract (or API interface), enabling us to separate how we communicate with software services, and how behaviours/rules are implemented within it (Separation of Concerns). As long as you could communicate in string-form over HTTP, you could integrate; i.e. it became the Lowest Common Denominator.

We’re now seeing this Lowest Common Denominator used across highly-distributed polyglot systems (e.g. Microservices) as the default communication mechanism.

MICROSERVICES & LOWEST COMMON DENOMINATOR

One of Microservices key benefits is its ability to support highly distributed, heterogeneous systems. It achieves this, in large part, through the use of the Lowest Common Denominator principle to communicate between software services.

LOWERING THE GAP

Interoperability is a key design feature of the .NET platform. It lowered the gap, by providing a common engine to leverage any managed (running under the CLR) and unmanaged code (written as C++ components, or COM, ActiveX) to communicate. The benefits include:

- Greater choice of implementation technology, inculcating a “Best Tool for the Job” mindset.

- The ability to source a greater pool of talent - if you can’t get C~ talent, you might source some VB.net.

- Extended sharing and reuse capabilities; e.g. reuse an existing investment, such as using a VB.net library in a C# application.

Note that whilst Lower Representational Gap draws upon the many benefits of Uniformity, one drawback may be innovation; i.e. new ideas often come from unique sources; less readily from sources that share many common attributes within an established order.

FURTHER CONSIDERATIONS

TABLE STAKES

In gambling parlance, to play a hand at the table, you must first meet the minimal requirement; i.e. you must match/exceed the table stake.

In business, Table Stakes are the features, pricing, or capabilities, a customer expects of every product in that class; i.e. it is the Lowest Common Denominator. In many cases, Table Stakes’ features are the core, generally uninteresting aspects of a product, so integral that they’re rarely discussed in detail during a sales negotiation (you shouldn’t be at the negotiation table without it).

Whilst Table Stakes normally relate directly to the product, they need not. For instance, a customer may demand regular distribution through a technique such as Continuous Delivery, or a cooperative and inclusive culture more akin to a partnership.

INTERNAL & EXTERNAL FLOW

Good Flow is an important characteristic of any successful production line, and thus your ability to deliver regularly, accurately, and efficiently. Yet it seems that many businesses fall foul of what I term Internal Flow Myopicism; i.e. they only consider their own internal flow when considering their delivery pipeline - and this may not represent the whole picture.

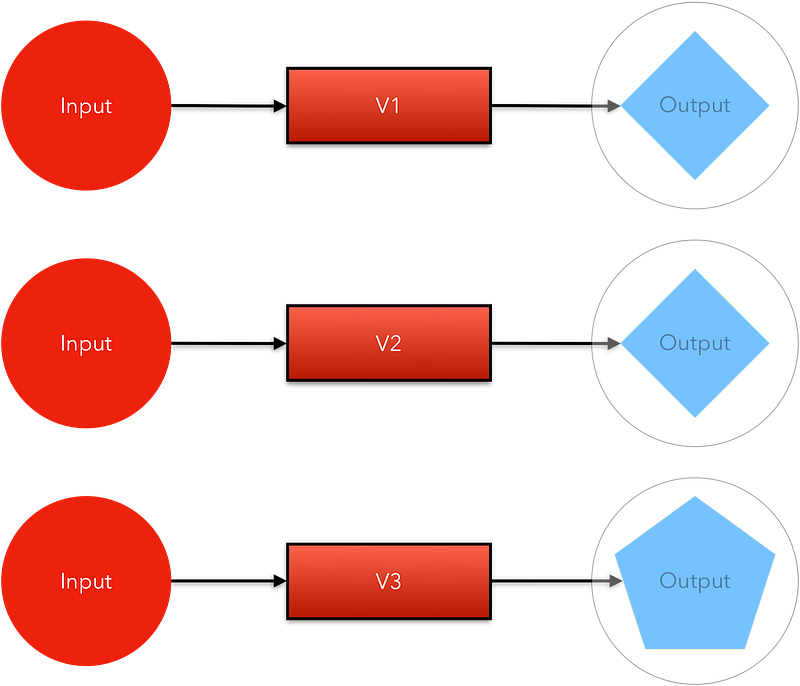

The figure below shows an example of flow within a (software) delivery pipeline. Let’s assume in this case that it’s a software supplier providing a platform to customers to build products upon. In this case, the assembly line has only five sequential stages (S1 to S5).

Flow

The “constraint” (i.e. the slowest process in the flow, or bottleneck) is, in this case, stage 3 (tagged with an egg-timer symbol; it’s also the smallest) in our five-stage process. No matter how fast the rest of the system is, throughput is dictated by this constraint. Inventory sits on the Buffer (see Drum-Buffer-Rope), waiting to feed the constraint.

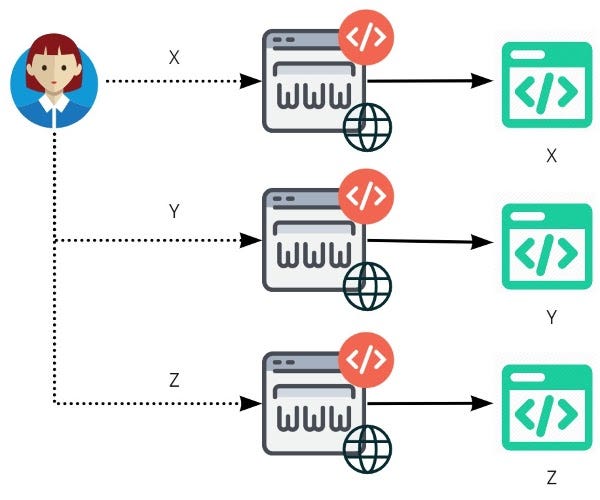

What isn’t always immediately obvious from the above diagram (and something that is easy to overlook) is that the entire flow (from inception until real use by an end user) is typically far more expansive than just the internal flow. For instance, let’s say a supplier provides you a service (such as a software platform), which their customers (i.e. you) build upon to create their own product, which they - in turn - sell to their own customers. The figure below shows who’s involved in that chain.

Customer - User

Technically, from a suppliers perspective, the supplier’s customers are also intimately linked to the flow and should not (at least from the customer’s perspective) be considered in isolation; yet they were never represented on the original (supplier) diagram. IF Value is indeed associated with both what YOU provide AND what your SUPPLIERS provide, then this is an important point.

If we were to consider Drum-Buffer-Rope to represent both the supplier and the external customer, we would likely find that the drum beats to the much slower rhythm of a specific customer (the slowest part in the chain); not the velocity of the supplier, nor the fastest customer, not even of the second slowest consumer. Let’s see that now.

Entire Flow

The Supplier pushes it’s wares out to three customers; A, B, and C. Customer A moves quickly and can easily integrate those supplier changes whenever they arrive. However, Customers B and C move slower (B in this case being the slowest) and can't integrate those supplier changes so quickly. This, therefore, is the theoretical constraint (I say theoretical as it doesn’t happen like this in practice).

Of course, this picture is somewhat skewed by reality. None of the parties necessarily knows one other, or their velocity. And neither - in most cases - is the supplier aware of them. Customers are only cognisant of the supplier velocity, and their own velocity, nothing more.

But humour me for a bit longer. It’s all academic after all. If - as I have inferred - we find that the constraint sits with a specific customer (B in our case), yet the drum actually beats at the supplier’s speed, then we find that all of the inventory (the Buffer in Drum-Buffer-Rope) builds up ahead of Customer B (much like in the tale of The Magic Porridge Pot [1], where the porridge keeps flowing until the whole town is filled with it), and to a lesser extent, in front of the other slow customers (C).

You might question the fairness of this situation; why can’t the supplier move at my speed? So allow me to present you with an analogy. Imagine yourself in ancient Greece; specifically Athens, during the time of Socrates (circa 470-399 bc, if you’re really interested). Before you stands the great man, surrounded by his avid students and followers, all deeply engaged in one of his famous discourses. Let’s also assume you understand ancient Greek. The dialogue moves at a furious pace, back-and-forth between teacher and students, and you quickly find yourself unable to follow the main thrust of the argument.

Rising from your seat, you interrupt Socrates mid-flow, explain your lack of clarity, and suggest the group adjust their verbal discourse to a tempo more suited to your mental faculties. Would the great man, or indeed his followers, appreciate your (regular) interruption, and be willing to sacrifice everyone’s learning and enjoyment (it would probably frustrate a few), to slowly recount every minutiae, solely for your comprehension? Or might you be shown the proverbial door? My money is on the second option. So why should a software vendor (Socrates in this analogy) behave differently?

Of course, there is an alternative tactic, which fits well into our analogy. Rather than interrupting the flow, and facing indignation and alienation, you try to hide your ignorance; returning each day to hear the great man speak, reclining in your seat, nodding in appreciation at appropriate intervals, but not once comprehending the argument. What’s occurring here is your own form of personal (learning) debt accrual; at some critical juncture you’ll suffer a personal catastrophe (one day Socrates turns to you and asks you to argue your point of view - of which you have none readily available), and may even be laughed out of Athens (maybe the Spartans will be more accommodating?).

Let’s now return to the software world, and see if we can fit it with our analogy. If we find that the software our business depends upon moves at an uncomfortably fast pace, we can:

- Make our views known, and hope someone listens. However, unless they’ve a (very) good reason, that supplier probably won’t slow for you (and maybe won’t even notice you). The drum beats to the supplier’s needs, not yours, not some other consumer.

- Ignore (and procrastinate over) the problem. This amounts to (virtual) inventory accruing in front

of your business (which, being a virtual offering, is difficult to see).

If you don’t keep up you accrue technical debt (affecting Agility, amongst other things),

and reduce the value you can provide to your customers. You can ignore it for a while,

but eventually it will cause:

- Embarrassment.

- A competitive disadvantage.

- Frustrated customers.

- Integration issues due to the progressively larger queue of upgrade requests.

- Stay ahead of the game. This amounts to regular dental flossing; it might not be enjoyable, but its regularity promotes good health and minimises major incidents.

FURTHER CONSIDERATIONS

DRUM, BUFFER, ROPE

Good Flow is an important characteristic of any successful production line. Drum-Buffer-Rope (DBR) - popularised in the Theory of Constraints (ToC) - is a heuristic to visualise flow and constraint management.

The figure below shows a basic example of flow within a (software) delivery pipeline.

Software Delivery Pipeline

In this case, the assembly line has only five sequential stages (S1 to S5). We find that stage 3 (tagged with an egg-timer symbol) is our system “constraint” (the slowest step in the process).

Now that we’ve identified this constraint we can represent it using Drum-Buffer-Rope. See the figure below.

Software Delivery Pipeline with Drum-Buffer-Rope

In this model the Drum represents the capacity of the “constraint” (i.e. the slowest process in the flow, or bottleneck); in this case, stage 3 in our five-stage process. No matter how fast the rest of the system is, throughput is dictated by this constraint.

WAR DRUMS

For centuries, drums were used by the military for battlefield communication, to signal an increase or decrease in tempo (such as during a march), or to signal coordinated manoeuvres.

Inventory sits on the Buffer, waiting to feed the constraint. The Rope can be pulled to increase flow to the constraint; i.e. ensuring the constraint is never starved (which would effectively cut the entire system’s throughput; a unit of time lost to the constraint is a unit lost for the overall system).

FURTHER CONSIDERATIONS

VALUE IDENTIFICATION

Value should be a measurement of the whole, not the part; not solely what you can offer, but an amalgam of what you and your supply-chain can offer your customers.

Perceived Customer Value

Whilst customers may not always explicitly state it (Functional Myopicism), they expect certain qualities in the software and systems that they use (and purchase); such as stability, Security, accessibility, Performance, and Scalability. Customers who directly foot the bill for your service probably also appreciate efficient (and cost-effective) software construction and delivery practices.

TABLE STAKES

When viewed, these system qualities are often seen as Table Stakes, and may be glossed over during sales discussions. However, that doesn’t make them irrelevant.

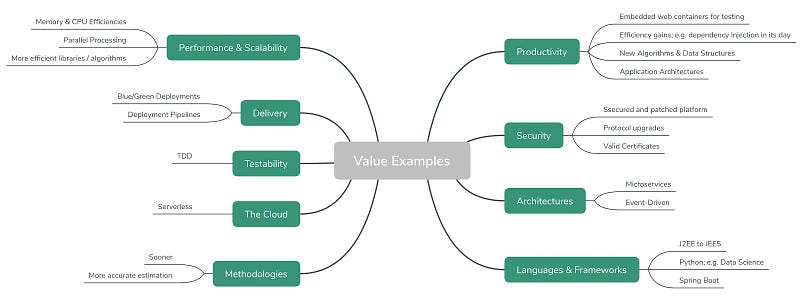

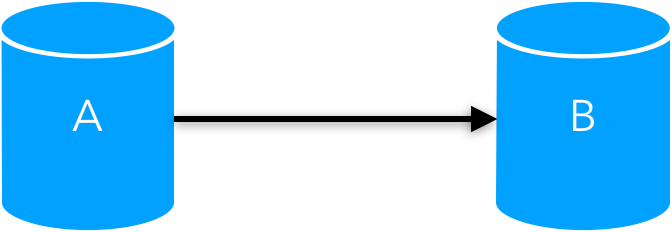

Most businesses rely heavily upon the software platforms and services of others; and as a business, we inherit traits and qualities from those suppliers (e.g. platform stability, or instability); yet we can’t necessarily Control any of these aspects themselves. And if customers value the whole, not the part, then logical deduction suggests that these inherited traits also hold customer value. The figure below shows some examples of inherited traits (value) that suppliers may offer you and your customers.

Value Examples

Some might question the merit of these qualities, so let me present you with some examples based upon my experiences.

EXPERIENCES

EMBEDDED WEB SERVERS/CONTAINERS

Web servers/containers are used to host software and serve out web requests. Historically, they have been treated as entirely independent entities, embedded within the deployment and runtime software delivery phase, rather than the construction phase; however, those lines are now being blurred.

Embedding web containers into my day-to-day engineering practices had profound benefits on my software development habits and productivity over my original working practices. Bringing development, testing, and deployment activities closer together enabled me to do more of what I had typically invested less effort into (not through choice as much as through necessity), and to do so sooner.

For instance, prior to the switch, these were the steps I would typically follow:

- Write the code.

- Build and package the code.

- Stop the web container.

- Copy the runtime artefact.

- Navigate to the correct folder in the web container, and paste in the artefact.

- Start up the container.

- Wait for it to start.

- Execute runtime acceptance tests.

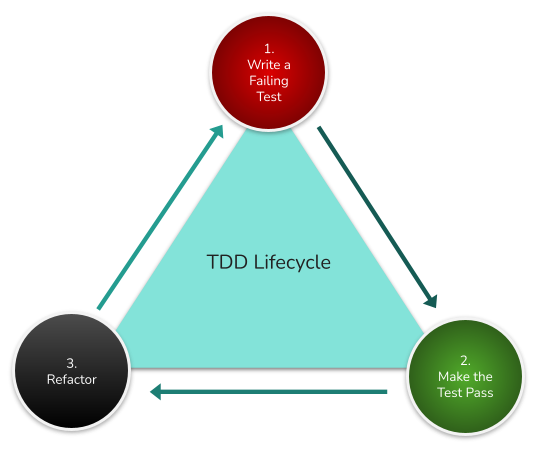

Whilst I performed some form of incremental development involving deployment and runtime test phases, it was numbing and laborious, and rife with start/stop/navigate/wait activities. Embedded web containers changed all that, and also allowed me to embrace TDD practices.

I estimate these practices improved my delivery performance by around 25%, enabling me to deliver functionality (of greater robustness), sooner, through more rigorous testing.

MAVEN

Whilst Apache Ant was a significant step forward over its predecessors (e.g. “make files”), it was - for me - Apache Maven that was the real trailblazer. Maven is a build automation and dependency management tool that uses an elegant, easy-to-follow syntax, sensible conventions (e.g. a standardised location for source code and unit tests), has fantastic dependency management (a key problem to minimise duplication and “versioning hell”), and strong plugin support (see my point about embedded web containers). The end result? Increased Productivity, Uniformity, and (release) Reliability.

MOB PROGRAMMING

Whilst initially sceptical of this approach (a group - or mob - work on the same work item together for an extended period, until complete), I soon found it to be a great way to align teams around a domain and/or a problem, gain new skills, collaborate, build trust and acceptance, grow in confidence, and increase business scalability and resilience (having a pool of people with sufficient expertise to solve similar problems increases flexibility and enables the more reliable sequencing of project management activities).

DISTRIBUTED ARCHITECTURE

The introduction of a distributed (Microservices) application architecture enabled me to innovate (use a range of different technologies to solve a problem), isolate change (increase Productivity) and therefore reduce risk, support evolution, and embed TDD practices into my day-to-day work.

THE CLOUD

The Cloud has had a significant impact on many technology-oriented businesses. Need I say more?

LINKS AND ENABLERS

Most of these technologies/techniques have close associations or interrelations; and one often becomes a direct enabler to the next. For instance, in a previous role, I couldn’t gain the benefits from embedded web containers (or Maven), until we broke the monolithic architecture into smaller "Microservices". Once that approach became available, I could more readily apply a TDD mindset to many problems, resulting in better quality code and swifter future change. That TDD-driven mindset supported a marked increase in automated test coverage, which subsequently promoted continuous practices, like CI/CD. Once that was in place, I could look at Canary Releases etc etc.

My point is that there are almost always second and third-order effects to any decision, and you can’t necessarily know what the downstream impact of introducing one idea/technology will be. As I described, the introduction of one innovation may lead to many others, leading to a flood of innovation, and cultural improvements across the business.

PERCEIVED VALUE

Surely some, if not every one of these innovations has value? So, why are they given a second-class status within so many organisations? I can think of several reasons:

- Features are - to put it bluntly - more interesting to most people than non-functional qualities, and therefore drive more interesting discussions.

- Customers infer many of these qualities as Table Stakes, so they may be glossed over and easily accepted in sales discussions. This is a double-edged sword - it allows sales discussions to be steered by the key drivers (e.g. functionality), but it doesn’t necessarily promote these as important qualities in the minds of leading internal executives. Not asking about something, and not caring about it are two different things.

- Many of these traits aren’t easily contextualised (Value Contextualisation).

- Customers don’t appreciate the ramifications of the absence of a system trait (to my last point about contextualising) until it’s too late. A failure in any one quality can cause severe embarrassment, reputational harm, or even a business’ demise (consider the reputational harm done by businesses suffering from a data breaches). I’d place a bet that most of the executives within the organisations that have suffered a significant security breach are now deeply aware (contextualised) of failings in the Security quality.

WHAT’S THE MINIMUM?

Good ROI is mainly about doing the minimum to satisfy Table Stakes, whilst investing the remainder on creating diverse functionality that excites customers. You want prospective customers to leave with the perception of a high quality product (which it hopefully is), and balance effort (and therefore ROI) by doing just enough Table Stakes to be successful. But how do you measure what's the minimum? It's rather subjective.

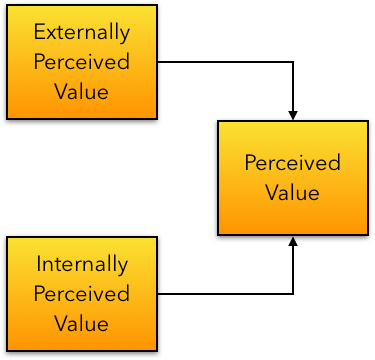

We can perceive value from two alternate angles:

- What external parties (customers) perceive.

- What the internal business - offering the service - perceives.

See the figure below.

Perceived Value

The external and internal parties perception of value are rarely identical, and can often be radically different. There’s no hard-and-fast rules in how different stakeholders perceive value. For instance, whilst some customers may perceive value lies with Functionality, Reliability, Usability, (and possibly) Security, internal stakeholders may perceive value lies in Functionality, Reliability, Scalability, Security, and Productivity. Much of these views comes down to our ability to Contextualise, yet perception may also shift over time, as people gain new learnings, or by the stimulus of some tumultuous event, causing us to reassess our previous beliefs.

We might visualise the problem as two distinct sets of perceived value, intersecting where the two parties are in agreement. See the figure below.

Two Value Sets

For instance, if both parties viewed Security as being of prime importance, then that quality would lie within the intersection, and should therefore be accorded an appropriate amount of energy from both parties. Ideally, there would be a large intersection (a commonality) between the two, representing a close alignment in goals and virtues between the two parties (you and your customers); such as in the figure below.

Large intersection means greater alignment

To my mind, this scenario better represents a partnership between aligned parties, rather than the typical hierarchical customer-supplier model that’s been a mainstay of trade for centuries. In this partnership both parties are deeply invested in building the best product or service; not because it benefits the one party only, but because it benefits everyone: 1. your business, to build a world-class product to sell widely, and 2. the customer, to allow them to reap the biggest benefits from that product.

As Adam Smith put it:

"It is not from the benevolence of the butcher, the brewer, or the baker that we expect our dinner, but from their regard to their own interest." [1]

"INTERNAL VALUE? - WHY SHOULD I CARE? I DON'T PAY FOR THAT"

External customers may be of the opinion that they don’t pay for internal value. They’re paying for functionality, not some seemingly vague notion called Maintainability, Scalability, or some other “ility”.

Whilst I understand that viewpoint, it seems rather myopic, and - to my mind - not entirely valid. In one way or another (whether in its entirety, or through some SAAS-based subscription model), external customers pay a share for the product or service that is delivered. And ALL software has production and delivery costs. And what about innovation?

If the supplier is slow (because they have inefficient construction or delivery practices), the external customer “pays” in the following ways:

There’s also something to be said around Brand Reputation. As a customer, you should be able to ask the tough questions about scalability, resilience, security, productivity etc. Misinterpret these and you’ll pay for them too; whether in fines, lost revenue, share price, or simply embarrassment. Don’t believe me? Do a quick search on some of the big organisations who’ve suffered a major security breach, or the airlines that have suffered system availability/resilience issues, and analyse the outcome.

- They’re investing in the time for that business to do things other than produce functionality, or (for instance) the further stabilisation of the platform.

- They’re not getting innovation quickly enough. Consider this a bit longer. I’ll wait... Innovation is key to the existence of many businesses; without it, many would have shrunk into insignificance. And if your competitors (using another supplier) can out-innovate you, then surely that represents a problem?

SUMMARY

My point? Different parties perceive value differently. The greater the discrepancy, the greater the chance of that partnership (eventually) failing. Some modern businesses have dismissed the rather one-dimensional, and deeply hierarchical, customer-provider business model, to favour one of a collaborative partnership, by aligning on what’s truly valuable (the qualities intersection) and learning from one another, to build long-term relationships of mutual benefit.

We dismiss the value that (upstream or downstream) suppliers can provide from our regular productization practices at our own peril; they offer benefit internally and externally; platform upgrades should be given a first-class status alongside internal product improvements.

However, to appreciate value, we must also be able to contextualise it; the subject of the next section (Value Contextualisation).

FURTHER CONSIDERATIONS

- [1] - The Wealth of Nations - Adam Smith

- Flow

- Table Stakes

- TDD

- Functional Myopicism

- Control

- Value Contextualisation

VALUE CONTEXTUALISATION

The ability to contextualise value comes from several sources, including:

- Knowledge; e.g. "Everything I’ve read on this subject indicates this is the best way to do it."

- Experience; e.g. "I’ve seen this before; we better resolve it now, or it will bite us later."

- Use the knowledge/experience of others to guide you to make better decisions.

- Experiences still to occur. These are often the stimulus of some tumultuous event, causing us to reassess our beliefs. They shape your future (business) self.

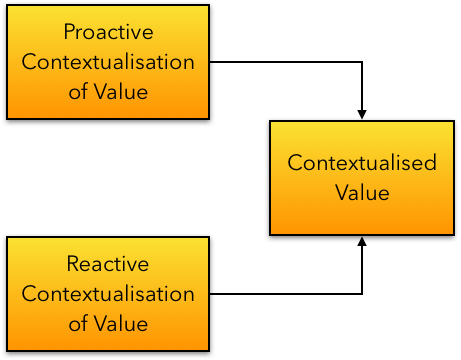

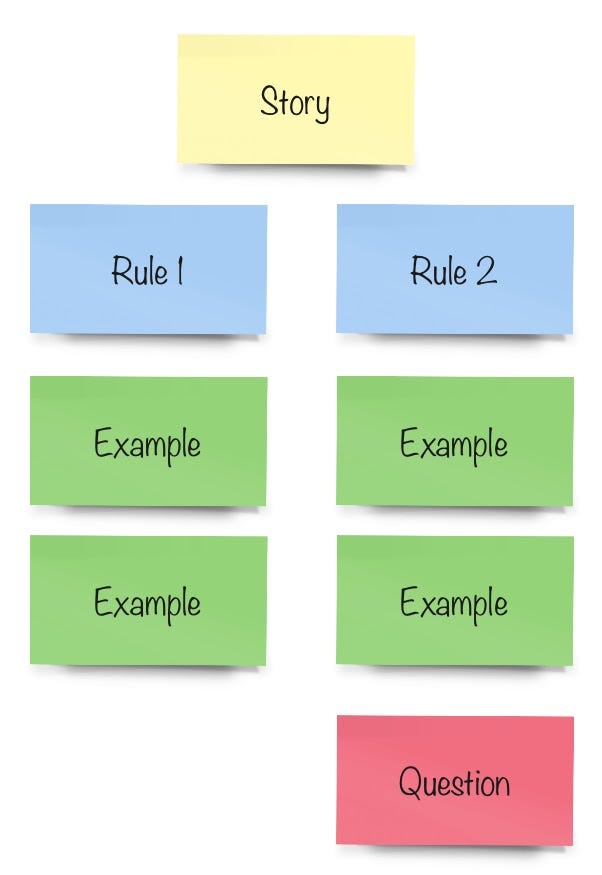

We can categorise this contextualisation as either proactive (enabling forethought - i.e. Prometheus), or reactive (hindsight, or afterthought - i.e. Epimetheus). See below.

Value Contextualisation

Value - therefore - is an amalgam of what customers can proactively contextualise, and what they must retrospectively contextualise (typically, right after a significant system failure).

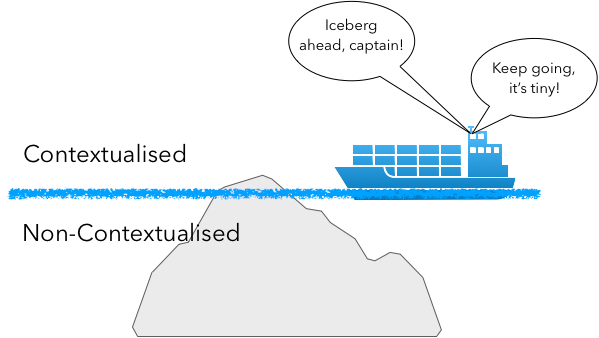

The ability to proactively contextualise value can be a very important ability. See the graphic below.

Failure to sufficiently contextualise leading to disaster

Failing to spot, or - in this case - to contextualise (the crew spotted a problem, they just never afforded it sufficient credence) the unseen, and change course, may result in disaster; i.e. if we build a product or business where little is visible (and known), and much remains invisible (the insidious unknown), we should proceed with caution, and be mindful of icebergs.

Most business customers I meet with see what’s above the water (e.g. functionality), and thus, can contextualise it. Yet, they don’t necessarily see, ask, or are given access to, what lies below the surface. Thus, they can’t contextualise its importance or indeed, its purpose. Business news is rife with examples of systems that weren’t sufficiently contextualised (or given credence) by their owners, forcing them to react (and rather swiftly) after a tumultuous event [1]. But the horse has already bolted.

A CHRISTMAS CAROL

In Charles Dickens’ famous classic novel A Christmas Carol [2], the main character - Eberneezer Scrooge - is portrayed as a spiteful, grasping, misanthropist, with no love for anything other (it seems) than money. You probably you know the story.

Scrooge had lost all sense of his humanity, and became blind to problems of his own making. Prior to the main event, we are treated to various scenes of loathsome rapacity as he turns away men of charity with hurtful words; scathes and mocks his good-natured nephew; loads misery and poverty upon Bob Cratchett and his family, and even dismisses the chained spectre of his once lauded, and now dead business partner, Jacob Marley, warning Scrooge to repent before it is too late (Marley is dismissed as a piece of undigested food). None of these actions are sufficient for Scrooge to contextualise what he truly values, so he is given a hard lesson.

Scrooge is visited by three ghosts on the eve of Christmas; the ghosts of Christmas past, present, and future. Through the course of the night, Scrooge is shown the error of his ways, and his own mortality is laid bare. It slowly dawns on him that he cares for more than money (e.g. his own mortality, how others view him, and his regained love of fellow man). He repents in time, and is able to change his destiny.

Where am I going with this? Well, it took the visitation of the ghosts for Scrooge to contextualise what he truly held valuable; i.e. it took a tumultuous event for him to reassess his values/beliefs, in order to make changes. Fortunately, time was on his side.

Whilst this novel has a fantastical theme, the underlying issue of reactive contextualisation still applies to how some businesses are run. These businesses are ill-prepared for the visitation of some “quality spectre” (whether it be Security, Resilience, Scalability, or regulatory non-compliance), and are forced to reactively contextualise. It takes some tumultuous event to wake them, during which time they’ll probably suffer harm (e.g. reputationally, financially, innovation dampeners).

We can’t change an outcome, yet by proactively contextualising, we can influence both our current position, and our future.

There’s another aspect to consider here too; whilst what’s below the surface may not sink you, it also may not be to your advantage. The metaphor of the graceful swan above water (external customers contextualise this), with duck legs paddling furiously below (the internal business contextualise this) fits well into this model.

You might be paying for a swan, but getting a duck! Aesthetic Condescension is a popular trap to the unwary; slap a new front end on a legacy product, and sell it as something new. The unwary see a flashy new UI and link the entire product (and practices) to modernity, even though it’s just a veneer. Again, there is a contextualisation problem; we’re blinded by beauty and can’t see the ugliness below (or the other idiom; "you can put lipstick on a pig, but it’s still a pig" [3]).

WILLINGNESS TO LEARN

Of course, much of this proactive contextualisation assumes a willingness to learn.

I knew a senior executive who (at least outwardly) seemed entirely unwilling to learn about the key technologies or practices used to build the business’ product suite. Now, I’m not suggesting that that executive should be coding software, but their lack of appreciation for it, and how teams worked, made it hard for them to contextualise, so they couldn’t proactively support the business needs - e.g. to identify, correctly prioritise, and solve key problems on the horizon, prior to them becoming serious impediments. My view is that if you’re in the technology business, you should make an effort to understand technology, at least at a high level.

“Only if we understand, can we care. Only if we care, will we help.” - Jane Goodall

SUMMARY

Contextualising value is not necessarily about resolution; foremost, it's about awareness, and then deciding what - if anything - to do about it. Once we can contextualise problems, we may then progress into risk management.

Value Contextualisation comes in two flavours:

- Proactive (Prometheus).

- Reactive (Epimetheus).

One is about understanding your path and (potentially) changing your future; the other is about dealing with the after effects of an unknown and unexpected future (more of a fatalist mindset). The converse of proaction is reaction. Favour Proaction over Reaction.

Many have failed to sufficiently contextualise, or give credence to a problem, and suffered. Business news is rife with stories of failing systems leaving customers stranded, significant data losses causing eye-watering financial penalties (sometimes into the hundreds of millions of dollars), and key systems failing to scale at inauspicious times, angering customers and inducing financial recompense. Business Reputation is at stake.

FURTHER CONSIDERATIONS

- [1] - https://www.cnet.com/news/biggest-data-breaches-of-2019-same-mistakes-different-year

- [2] - A Christmas Carol - Charles Dickens

- [3] - “Some superficial or cosmetic change to something so that it seems more attractive, appealing, or successful than it really is.” https://idioms.thefreedictionary.com/lipstick+on+a+pig

- Value Identification

- Table Stakes

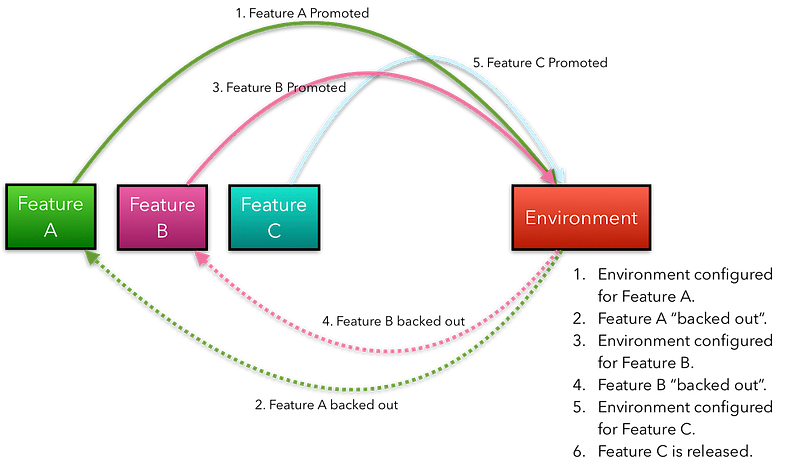

THE PRINCIPLE OF STATUS QUO

Retaining the status quo - meaning the “the existing state or condition” - is important to many businesses.

Whilst many modern books, practices and methodologies place a heavy emphasis on change and innovation at both the technology and cultural levels (i.e. break Cultural Stasis), they tend to neglect to mention the fact that most businesses also depend upon a certain degree of status quo to survive. Innovation tends to be about future success, but stability is about the present situation.

BALANCING FORCE

Sometimes, we are driven so much by what we can achieve, that we forget to ask if we should do it. The Principle of Status Quo suggests that we maintain a modicum of balance between change and stability (Stability v Change).

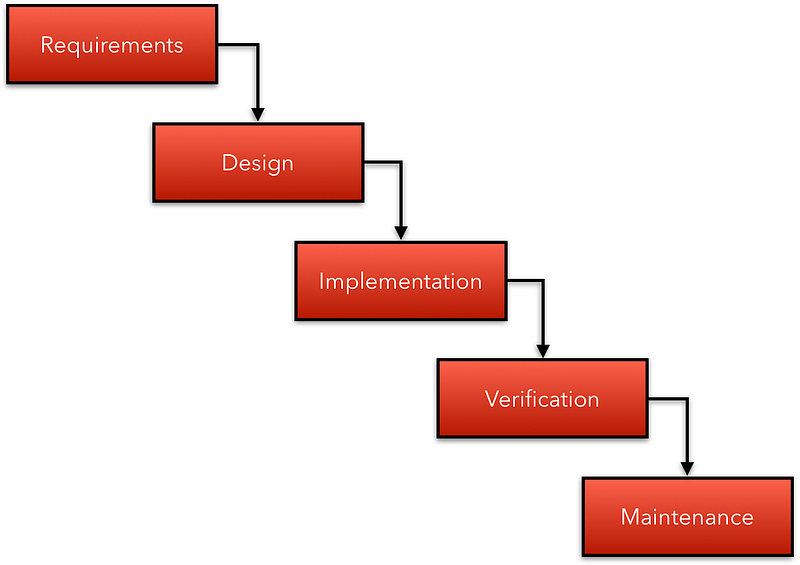

Most businesses, and customers, can’t manage extreme change; it requires deep and sustained cognitive load, and each change carries an inherent risk. Whilst innovation is desirable, it needs to be carefully judged and managed so it doesn’t impact the current perception of stability. No one I know has (successfully) attempted to transition from Waterfall to Agile in one fell swoop, or migrated from on-prem to the Cloud, or shifted from a Monolithic architecture to Microservices. Change occurs incrementally, not as one big bang, and we maintain most of the status quo whilst undertaking that transition.

Consider - for instance - Agile, Blue/Green Deployments, Canary Releases & A/B Testing. Whilst these practices are certainly a vigorous nod towards progression and change, their approach is methodical and also protective of the status quo, with features like small, incremental change (Agile), fast rollback (Blue/Green), and smart routing to minimise impact on more conservative customers (Canary).

FURTHER CONSIDERATIONS

THE CIRCLE OF INFLUENCE

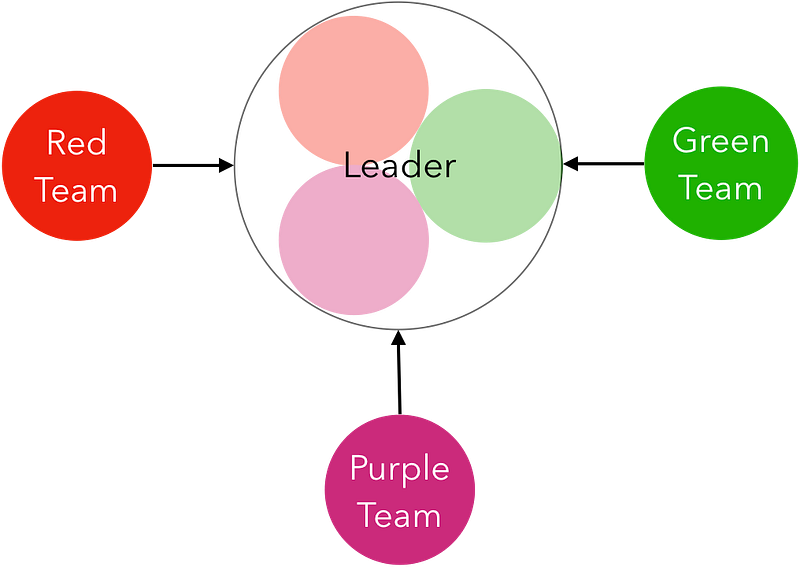

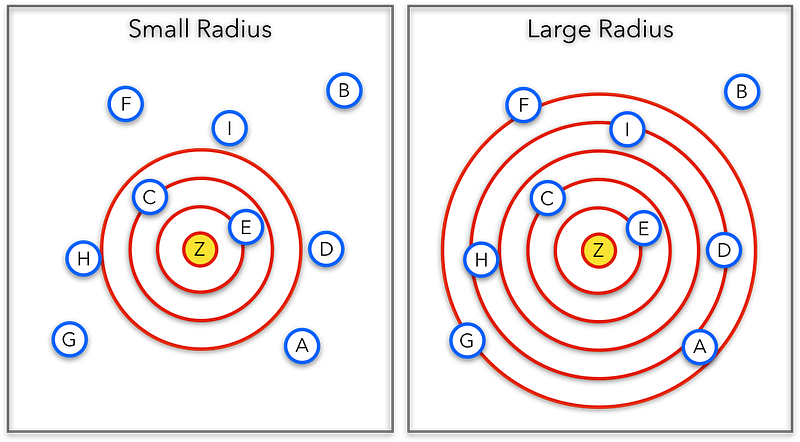

The Circle of Influence is a way to visualise who (and by what degree) change influences. It can be a useful tool for influencing and negotiation. The figure below shows an example.

The Circle of Influence

- Layer 1 - the least influence; provides the least degree of access.

- ...

- Layer 5 - the greatest influence; everyone has access to the feature.

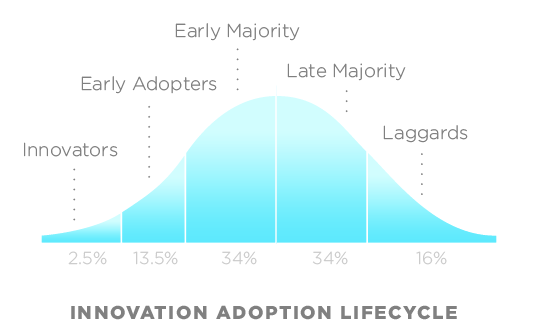

It takes significant effort to convince others of the need to change (whether that change is how we work, functional, or cultural). People have many different reasons to reject change; from a simple bias, to a lack of understanding, or that they (rightly or wrongly) think the change holds no value. Attempting to convince everyone, in a single big bang change, is doomed to failure. See the figure below. [1]

Adoption of Innovation

The graph shows you when change is actioned by different groups. Note it occurs at different times, and has various influencers.

EXAMPLE

At a previous employment I saw an opportunity to make a big difference in the way we built software; yet I didn’t shout loudly for all to hear. It would have been pointless, and may even have hampered the change’s introduction.

I began by influencing my immediate circle (colleagues I worked with on a day-to-day basis), explaining the problem (don’t underestimate the time this takes) I aimed to solve, discussing my proposal with them, and listening to their concerns and improvements, before progressing onto the next stage; a proof of concept (PoC).

This PoC was a success, and gave me and my immediate circle greater confidence that we could expand into the next circle of influence - the wider technology department. Again, there were more discussions, we took improvements on board, extended the PoC, and then took it to the next set of stakeholders (another circle of influence). By the third or fourth concentric circle, we had sufficiently influenced all of the C-level execs to give us the nod to use it for all future work.

If I had approached this big bang, I wouldn’t have found sufficiently strong support to influence everyone. Additionally, the overall solution wouldn’t have gained from the improvements offered by my colleagues.

The solution is to build up concentric rings of influence until you’ve enough motion that there’s no stopping it. But you need to get that stone rolling in the first place; and they can be big.

BIDIRECTIONAL INFLUENCE

Circle of Influence has bidirectional influence. You promote ideas for others to trial, and they agree, disagree, or offer improvements (influence in the opposite direction).